Earlier this month, the Russian weapons manufacturer Kalashnikov Group made a low-key announcement with frightening implications. The company revealed it had developed a range of combat robots that are fully automated and used artificial intelligence to identify targets and make independent decisions. The revelation rekindled the simmering, and controversial, debate over autonomous weaponry and asked the question, at what point do we hand control of lethal weapons over to artificial intelligence?

In 2015, over one thousand robotics and artificial intelligence researchers, including Elon Musk and Stephen Hawking, signed an open letter urging the United Nations to impose a ban on the development and deployment of weaponized AI. The wheels of bureaucracy move slowly though, and the UN didn't respond until December 2016. The UN has now formally convened a group of government experts as a step towards implementing a formal global ban, but realistically speaking this could still be several years away.

The fully-automated Kalashnikov

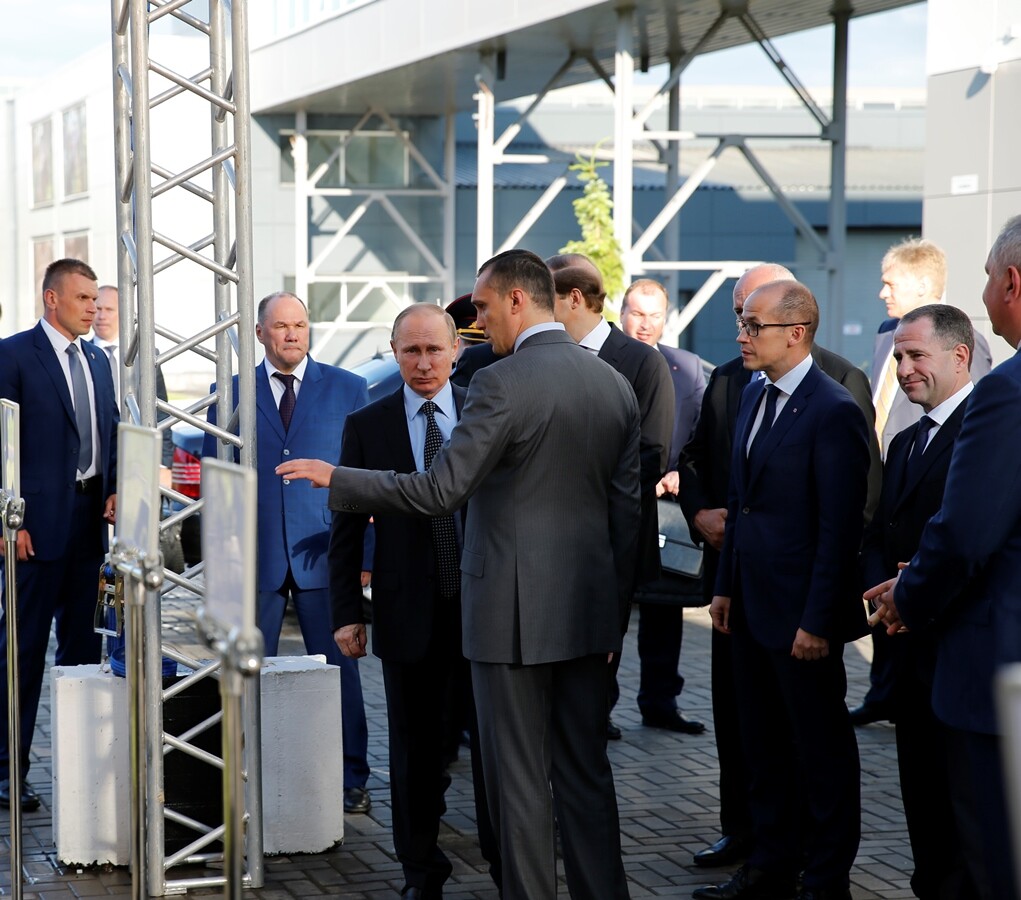

While the United Nations are currently forming a group to discuss the possibility of introducing a potential ban on AI-controlled weaponry, Russia is already about to demonstrate actual autonomous combat robots. A few days after Russian President Vladimir Putin visited the weapons manufacturer Kalashnikov Group, infamous for inventing the AK-47, known as the most effective killing machine in human history, came the following announcement:

"In the imminent future, the Group will unveil a range of products based on neural networks," said Sofiya Ivanova, the Group's Director for Communications. "A fully automated combat module featuring this technology is planned to be demonstrated at the Army-2017 forum," she added, in a short statement to the state-run news agency TASS.

The brevity of the comments make it unclear as to specifically what has been produced or how they would be deployed, but the language is clear. The company has developed a "fully automated" system that is based on "neural networks." This weaponized "combat module" can apparently identify targets and make decisions on its own. And we'll be seeing it soon.

The "Terminator conundrum"

The question of whether we should remove human oversight from any automated military operation has been hotly debated for some time. In the US there is no official consensus on the dilemma. Known informally inside the corridors of the Pentagon as "the Terminator conundrum," the question being asked is whether stifling the development of these types of weapons would actually allow other less ethically minded countries to leap ahead? Or is it a greater danger to ultimately allow machines the ability to make life or death decisions?

Currently the United States' official stance on autonomous weapons is that human approval must be in the loop on any engagement that involves lethal force. Autonomous systems can only be deployed for "non-lethal, non-kinetic force, such as some forms of electronic attack."

In a compelling essay co-authored by retired US Army Colonel Joseph Brecher, the argument against the banning of autonomous weaponry is starkly presented. A scenario is described whereby two combatants are facing off. One holds an arsenal of fully autonomous combat robots, while the other has similar weaponry with only semi-autonomous capabilities that keep a human in the loop.

In this scenario the combatant with the semi-autonomous capability is at two significant disadvantages. Speed of course is an obvious concern. An autonomous system will inherently be able to act faster and defeat a system that needs to pause for a human to approve its lethal actions.

The second disadvantage of a human-led system is its vulnerability to hacking. A semi-autonomous system, be it on the ground or in the air, requires a communications link that could ultimately be compromised. Turning a nation's combat robots on itself would be the ultimate act of future cyberwarfare, and the more independent a system is, the more closed off and secure it can be to these kinds of outside compromises.

The confronting conclusion to this line of thinking is that restraining the development of lethal, autonomous weapon systems would actually strengthen the military force of those less-scrupulous countries that pursue those technologies.

Could AI remove human error?

Putting aside the frightening mental image of autonomous robot soldiers for a moment, some researchers are arguing that a more thorough implementation of artificial intelligence into military processes could actually improve accuracy and reduce accidental civilian fatalities.

Human error or indiscriminate targeting often results in those awful news stories showing civilians bloodied by bombs that hit urban centers by mistake. What if artificially intelligent weapons systems could not only find their own way to a specific target, but accurately identify the person and hold off on weapons deployment before autonomously going in for the kill at a time it deems appropriate and safer for non-combatants?

In a report supported by the Future of Life Institute, ironically bankrolled by Elon Musk, research scientist Heather Roff examines the current state of autonomous weapon systems and considers where future developments could be headed. Roff writes that there are two current technologies sweeping through new weapons development.

"The two most recent emerging technologies are Target Image Discrimination and Loitering (i.e. self-engagement)," writes Roff. "The former has been aided by improvements in computer vision and image processing and is being incorporated on most new missile technologies. The latter is emerging in certain standoff platforms as well as some small UAVs. They represent a new frontier of autonomy, where the weapon does not have a specific target but a set of potential targets, and it waits in the engagement zone until an appropriate target is detected. This technology is on a low number of deployed systems, but is a heavy component of systems in development."

Of course, these systems would still currently require a "human in the loop" to trigger any lethal action, but at what point is the human actually holding back the efficiency of the system?

These are questions that no one currently has good answers for.

With the Russian-backed Kalashnikov Group announcing the development of a fully automated combat system, and the United Nations skirting around the issue of a global ban on autonomous weaponry, we are quickly going to need to figure those answers out.

The "Terminator conundrum" may have been an amusing thought experiment for the last few years, but the science fiction is quickly becoming science fact. Are we ready to give machines the authority to make life or death decisions?