Most people wouldn't think twice about the best way to stab a piece of food on their plate, but when viewed through the eyes of a robot this simple action is surprisingly complex. Now, researchers at the University of Washington have developed an autonomous robot arm that can figure out the best way to pick up food of any shape and bring it up to a user's mouth, which should make mealtimes easier for people with limited mobility.

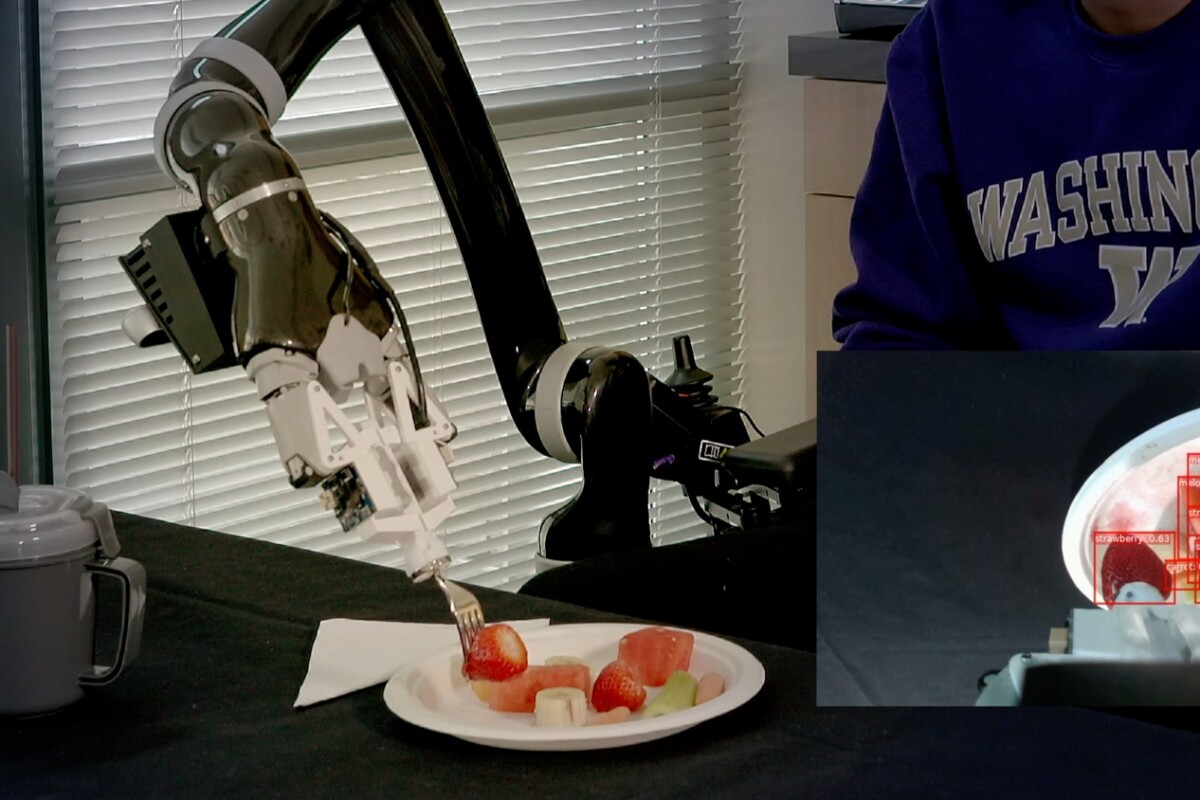

The robot, known as ADA for Assistive Dexterous Arm, is designed to attach to a person's wheelchair, and help them eat on demand. Built into the arm are haptic sensors to help it feel how much force it's applying, as well as a camera to identify what's on a plate and the face of the person it's feeding.

The idea is that when it's time to chow down, the arm scans the plate with the camera to figure out the shape and size of each piece of food. Then, it analyzes how best to skewer each item, then brings it up where the camera can scan for the diner's face, to move the fork within easy biting range.

But the underlying algorithms the team developed are more complicated than you might expect. The first one is called RetinaNet, and it's designed to scan the plate, identify the food item and place a virtual frame around it. The second, known as SPNet, analyzes the type of food in each frame and figure out the best way to pick it up.

"Many engineering challenges are not picky about their solutions, but this research is very intimately connected with people," says Siddhartha Srinivasa, corresponding author of the study. "If we don't take into account how easy it is for a person to take a bite, then people might not be able to use our system. There's a universe of types of food out there, so our biggest challenge is to develop strategies that can deal with all of them."

These algorithms were developed by first observing how human test subjects handled different types of food, including relatively hard foods like carrots, soft ones like bananas, and those with thick skins but soft innards like grapes. They were given force-sensing forks and asked to skewer and pick up bits of food as they normally would.

These tests recorded how much force needs to be applied to foods with different consistencies, such as carrots and bananas. But on top of that, they also revealed a few tricks we don't even realize we're using, but are invaluable to a robot that's trying to learn. The test subjects, for example, tended to spear soft foods like bananas at an angle, so they didn't slide off the fork. And for longer items like carrot sticks, they targeted one end so it was easier for the diner to take a bite.

These tricks were all incorporated into ADA's algorithms, to make it more user friendly. The end result sounds like a more advanced version of other mobility aids like the Obi arm.

"Ultimately our goal is for our robot to help people have their lunch or dinner on their own," says Srinivasa. "But the point is not to replace caregivers: We want to empower them. With a robot to help, the caregiver can set up the plate, and then do something else while the person eats."

The research was published in the journal IEEE Robotics and Automation Letters. The team demonstrates ADA in the video below.

Source: University of Washington