While we're seeing some exciting leaps forward in virtual reality and augmented reality right now, the human body is still adjusting to these types of immersive experiences. Now scientists may have found a fix for one of the recurring problems of VR/AR: eye fatigue.

Strap on a VR headset or some AR glasses for an extended period of time, and you might start to feel the strain on your eyes as you would when looking at any electronic screen. The long-term effects on the eyes are still unknown, but you're basically looking at an artificial representation of a 3D world rather than real life.

The use of a stereoscopic display – showing the left and the right eye slightly different images to create the illusion of depth – creates what's technically known as a vergence-accommodation conflict. Ordinarily our eyes naturally adjust their focus to look at something far off or nearby, but in VR they're asked to continually adjust their focus even though everything is on a screen only a few inches away, and that means a disconnect between where our eyes are looking and where they're focusing.

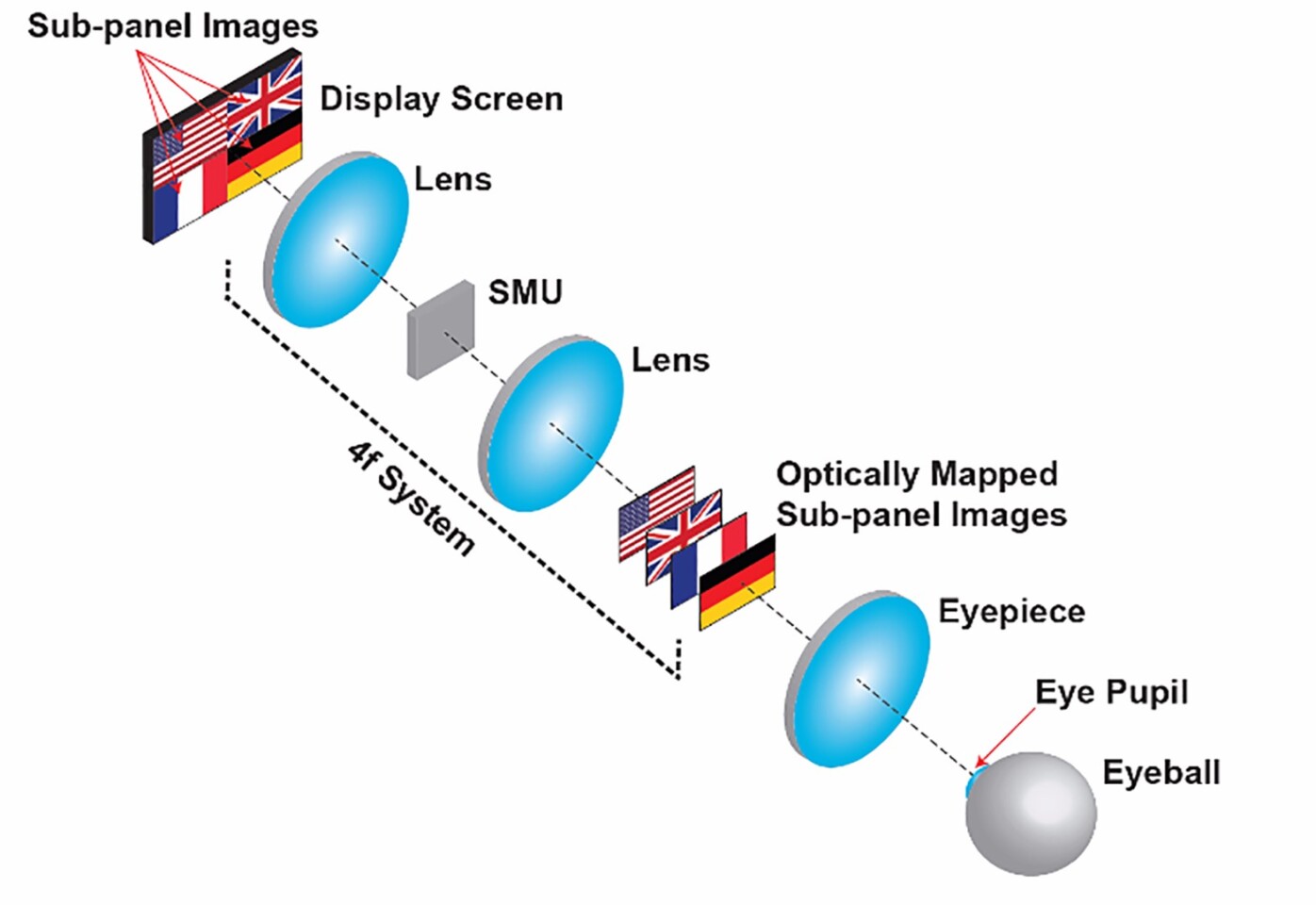

Enter a new type of display module developed by scientists from the University of Illinois at Urbana-Champaign. Using an approach called optical mapping, realistic 3D images can be created by using a series of 2D subpanel images, even though the display remains very close to the eyes, genuine depth cues are created.

Thanks to algorithms created by the team behind the optical mapping near-eye (OMNI) technology, the eyes get their natural focus cues just as they do in the real world, limiting the vergence-accommodation conflict. In other words, where our eyes are pointing matches up with where our eyes are focusing again.

"People have tried methods similar to ours to create multiple plane depths, but instead of creating multiple depth images simultaneously, they changed the images very quickly," explains Liang Gao, one of the researchers on the team. "However, this approach comes with a trade-off in dynamic range, or level of contrast, because the duration each image is shown is very short."

The researchers built the new OMNI technology into an OLED screen with four subpanels, using a spatial multiplexing technique to change the depths of each panel while keeping objects aligned along the same viewing axis.

With the help of a demo black-and-white video of parked cars close to and far from the camera, the team was able to generate depth perception in a way that fits naturally with the way the human eye perceives depth.

The early results are promising, but it's going to be some time before we see these modules inside a HTC Vive or an Oculus Rift. The developers are working on reducing the size, weight and power consumption of the technology before they can start talking to commercial partners about mass production.

A paper discussing the new 3D mapping approach has been published in Optics Letters.

Source: The Optical Society