As Germany and France move towards elections in 2017, pressure has been mounting on tech giants such as Google and Facebook to tackle the challenge of fake news. But in the age of highly politicized news cycles the question everyone is asking is how can truth be safely separated from fiction without censoring genuine alternative news sources?

In the first part of our investigation we looked at the history of fake news and discovered that this is not a new problem. We also examined the fake news economy that has arisen as digital communication fostered a more fragmented media landscape.

The Facebook and Google approach

After a contentious 2016 US election, Facebook faced strong criticism for not better policing the torrent of fake news articles that were circulated on its platform. Chief executive Mark Zuckerberg had long insisted that Facebook was not a media publisher, but rather a technology company, and users were primarily responsible for the content that was spread over the platform. But by late 2016, the problem was too great to ignore and Facebook announced a raft of measures to tackle the spread of misinformation.

Zuckerberg was careful in stating that "we don't want to be arbiters of truth," before laying out a series of protocols that were being developed. These included disrupting the economics of fake news websites by blocking ad sales to disputed sites, and bringing in a third-party verification process to label certain articles as false or disputed.

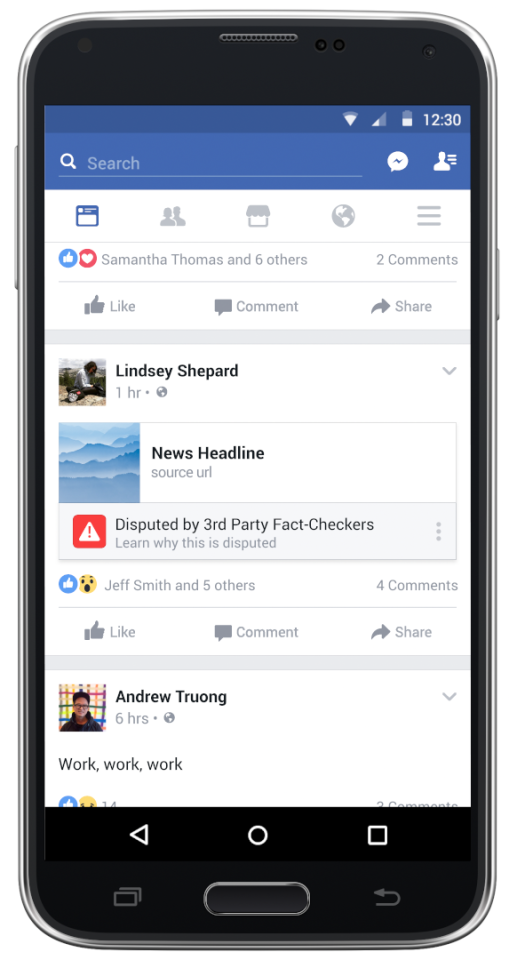

From early 2017, Facebook began testing these new measures in the US and rolling them out more widely across Germany and France. Users will be able to flag articles that are suspected as fake news, which would forward the story to a third-party fact checking organization that would evaluate the accuracy of the piece. If the story is identified as fake it will be noticeably flagged as "Disputed by 3rd Party Fact-Checkers" and users will see a warning before they choose to share the story further.

Google has also launched an initiative dubbed "CrossCheck," which will initially run in France across early 2017 in the lead up to the country's presidential election. CrossCheck partners with a number of French news organizations with a view on fact checking articles that users submit that are believed to be false. Much like Facebook's plan, articles that are deemed false by at least two independent news organizations will be flagged as fake news in users' feeds.

The post-truth era

While these strategies are certainly a welcome approach from the tech sectors in trying to deal with this issue, we are faced with an increasingly existential crisis over what even constitutes fake news. When the President of the United States accuses an organization such as CNN of being fake news then it allows certain sectors of the community to attack the veracity of who is fundamentally determining what is and isn't true.

Oxford Dictionaries declared "post-truth" to be their word of the year for 2016, defining it as an adjective, "relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief."

It seemed 2016 was not only a year of political destabilization, it was also one of fundamental philosophical destabilization too. Even objectively credible sourced were undermined, as even previously trustworthy scientific sources were violently politicized. For every scientist saying one thing, there was sure to be someone that could be dredged up to counter that point – with no regard to their own qualifications, or lack thereof. Climate data, immunizations, and even crowd-size estimates were all perceived as being up for debate in some circles as scientists were accused of harboring political agendas. Unreported information that contradicted the general consensus was immediately claimed as being suppressed.

We have now reached a point where it's fair to ask, how can we even determine a news story as factually verified when the sources of facts are themselves in question?

Can AI help?

Many organizations are currently looking to technology to help find a way to more clearly separate the truth from the fiction. ClaimBuster from the University of Texas is an algorithm that analyzes sentences, in real time, for key structures or words that correspond with factual statements. The system does not rate the truth of statements, but it can identify whether a comment is check-worthy on a scale of 0 to 1.0. It's an early step towards a system that can identify if someone is making factual claims and then move to a process where those claims can be automatically verified.

While AI systems such as these are undoubtedly useful for live fact checking of statements that are verifiable, they fall short when considering that a great deal of news reporting surrounds truthful recounting of witnessed events or citations of newly compiled statistics.

Additionally, the interpretive quality of journalism cannot be easily replaced by artificial intelligence. In discussing this issue of how the news can be abstracted through the language used to recount it, Guardian reporter Martin Robbins uses the example of President Trump's recent executive order temporarily halting access to the United States to persons from certain countries. Some news organizations have referred to the order as a "Muslim ban" and while the order itself specifically targets certain countries and not religion, Robbins explains, "To say that Trump's order is a ban on Muslims is technically false, but to suggest that it doesn't target Muslims is equally disingenuous."

Robbins describes our current climate as resulting in, "a sort of quantum news," where stories can be both true and false at the same time. When agenda can be loaded into language so heavily, how can we trust any third-party to verify stories for us? The danger is that political agendas can easily be slipped into articles that can still be considered truthful reporting. Could Facebook determine that a story about Trump's immigration ban that uses the language "Muslim ban" be fake news? What possible hope could an algorithm have in deciphering the intricacies of human language?

It's up to all of us

In a conversation with Twitter CEO Jack Dorsey on December 2016, controversial whistleblower Edward Snowden worried that these attempts by technology platforms to classify the truthfulness of content could lead to censorship.

"The problem of fake news isn't solved by hoping for a referee," Snowden said. "We have to exercise and spread the idea that critical thinking matters now more than ever, given the fact that lies seem to be getting very popular."

Regardless of our political leanings, we all need to approach information with a critical and engaged perspective. The issue of fake news is not necessarily a partisan one. In addition to fake news peddlers who are purely profit driven, recent reports have signaled that fake news has begun to skew more left with President Trump in power and liberals are to blame for perpetuating the spread of misinformation as well as conservatives.

Critical thinking is an apolitical act and ultimately we are all responsible for the information we consume and share.

Fake news has existed as long as people have told stories, but with the internet dramatically democratizing the nature of information transmission, now more than ever, the onus is on all of us to become smarter, more skeptical and more critical.

![The Ti EDC [everyday carry] Wrench is currently on Kickstarter](https://assets.newatlas.com/dims4/default/0ba225b/2147483647/strip/true/crop/4240x2827+0+3/resize/720x480!/quality/90/?url=http%3A%2F%2Fnewatlas-brightspot.s3.amazonaws.com%2F59%2Fb2%2F6a6fdd0348a8bfdad88bbcefec53%2Fdsc03572.jpeg)