Although humans perform intricate hand movements like rolling, pivoting, bending and grabbing different shaped objects without a second thought, such dexterity is still beyond the grasp of most robots. But a team of computer scientists at the University of Washington has upped the dexterity stat of a five-fingered robotic hand that can ape human movements and learn to improve on its own.

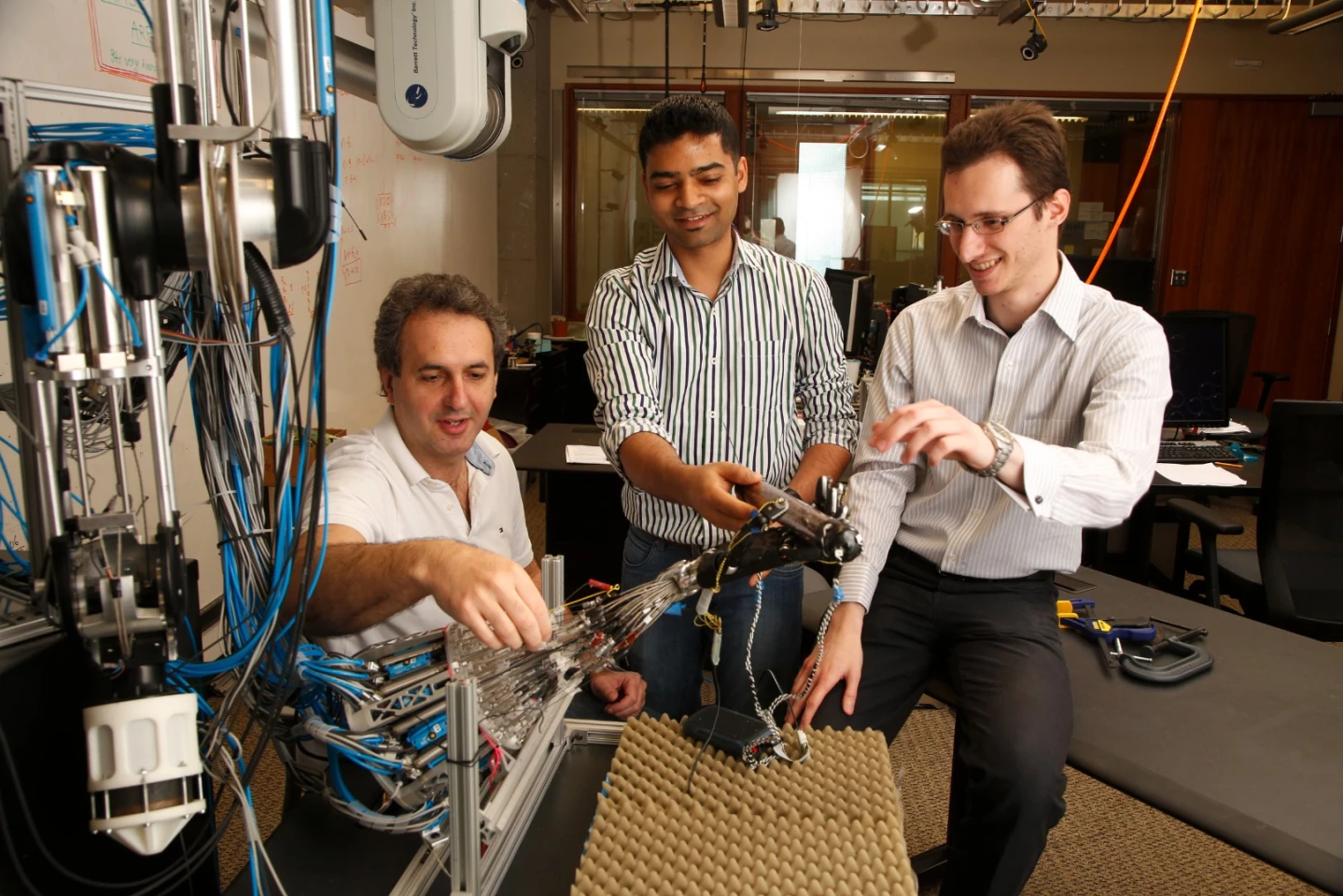

Built at a cost of around US$300,000, the University of Washington's design is based on a Shadow Hand skeleton, which is actuated with a custom pneumatic system that gives it the ability to move faster than a human hand. Although the hand is currently too expensive for commercial or industrial use, it does allow researchers to test control strategies that wouldn't otherwise be possible.

"Usually people look at a motion and try to determine what exactly needs to happen — the pinky needs to move that way, so we'll put some rules in and try it and if something doesn't work, oh the middle finger moved too much and the pen tilted, so we'll try another rule," says senior author and lab director Emo Todorov. "It's almost like making an animated film — it looks real but there was an army of animators tweaking it. What we are using is a universal approach that enables the robot to learn from its own movements and requires no tweaking from us."

Having used an algorithm that was able to model complex five-fingered behaviors, like typing on a keyboard or catching a falling object, and simulate the movements needed to achieve a desired outcome in real time.

This computer model has now been applied to the robotic hand hardware to see how it performs in the real world. Additionally, the robot hand has been given the ability to learn and improve its performance without human input thanks to a range of sensors and motion capture cameras that feed data to machine learning algorithms. These help model the actions required to achieve a goal and the basic physics involved. This is in contrast to other approaches that involve manual programming of each individual movement of a robotic hand.

Currently, the autonomous learning system is able to improve at a specific task that involves manipulating one set object in a similar way to what it has done previously, but the team is aiming to demonstrate global learning, which would allow the hand to work out how to effectively manipulate unfamiliar objects using its 40 tendons and 21 joints.

The research was funded by the National Science Foundation and the National Institutes of Health.

A video of the hand in action is below. To see the robot learn how to twist an object, skip to 1:47.

Source: University of Washington