While we've looked at a couple of efforts to upgrade the humble white cane's capabilities, such as the ultrasonic Ultracane and the laser scanning cane, the decidedly low tech white cane is still one of the most commonly used tools to help the visually impaired get around without bumping into things. Now, through their project called NAVI (Navigation Aids for the Visually Impaired), students at Germany's Universität Konstanz have leveraged the 3D imaging capabilities of Microsoft's Kinect camera to detect objects that lie outside a cane's small radius and alert the wearer to the location of obstacles through audio and vibro-tactile feedback.

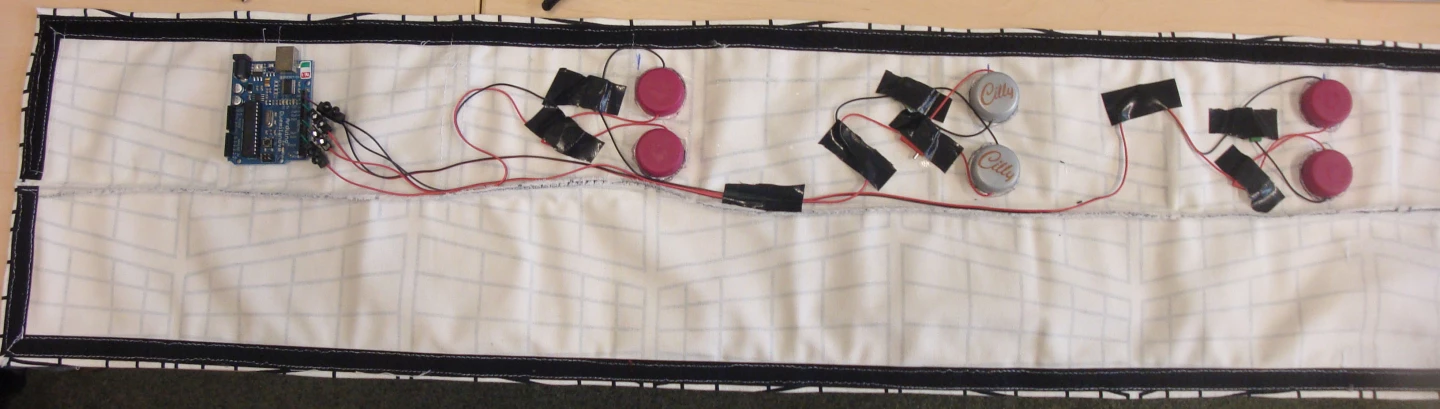

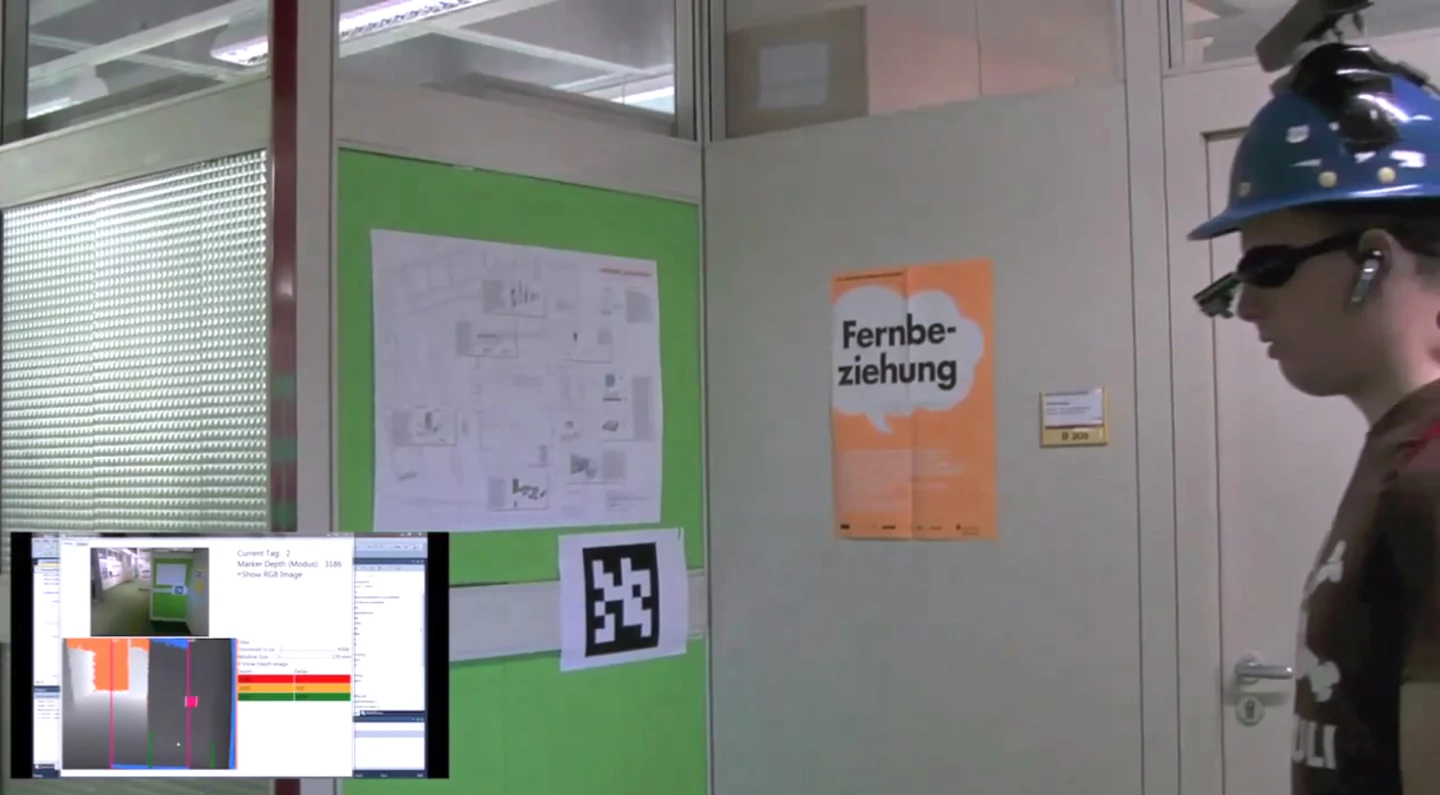

That's right, I said "wearer" because the system created by Master's students Michael Zöllner and Stephan Huber places the Kinect camera atop the visually impaired person's head thanks to a hard hat, some sugru and a liberal application of duct tape. The image and depth information captured by the Kinect cameras is sent to a Dell laptop mounted in a backpack, which is connected via USB to an Arduino 2009 board glued to a fabric belt worn around the waist.

The depth information captured by the Kinect camera is processed by software on the laptop and mapped onto three pairs of Arduino LilyPad vibration motors located at the upper and lower left, center and right of the fabric belt. When a potential obstacle is detected, its location is conveyed to the wearer by the vibration of the relevant motor.

A Bluetooth headset also provides audio cues and can be used to provide navigation instructions and read signs using ARToolKit markers placed on walls and doors. The Kinect's depth detection capabilities allows navigation instructions to vary based on the distance to a marker. For example, as the person walks towards a door they will hear "door ahead in 3, 2, 1, pull the door."

The students see their system as having advantages to other point-to-point navigation approaches using GPS – which don't work indoors – and seeing-eye dogs – which must be trained for certain routes, cost a lot of money and get tired.

For their NAVI project, the Universität Konstanz students wrote the software in C# and .NET and used the ManagedOpenNI wrapper for the Kinect and the managed wrapper of the ARToolKitPlus for marker tracking. The voice instructions are synthesized using Microsoft's Speech API and all input streams are glued together using Reactive Extensions for .NET.

Via SlashGear