When augmented reality hits the market at full strength, putting digital overlays over the physical world through transparent glasses, it will intertwine itself deeper into the fabric of your life than any technology that's come before it. AR devices will see the world through your eyes, constantly connected, always trying to figure out what you're up to and looking for ways to make themselves useful.

Facebook is already leaps and bounds ahead of the VR game with its groundbreaking Oculus Quest 2 wireless headsets, and it's got serious ambitions in the augmented reality space too. In an online "Road to AR glasses" roundtable for global media, the Facebook Reality Labs (FRL) team laid out some of the eye-popping next-gen AR technology it's got up and running on the test bench. It also called on the public to get involved in the discussion around privacy and ethics, with these devices just a few scant years away from changing our world as completely as the smartphone did.

Wrist-mounted neuro-motor interfaces

Presently, our physical interactions with digital devices are crude, and they frequently bottleneck our progress. The computer mouse, the graphical user interface, the desktop metaphor and the touchscreen have all been great leaps forward, but world-changing breakthroughs in human-machine interface (HMI) technology come along once in a blue moon.

And previous approaches won't work for augmented reality; you won't want to be holding a controller all day just to interact with your smart glasses. Camera-enabled hand tracking like what the Quest headsets are already doing will always be a little clunky, and for AR to take off as the next great leap in human-machine relations, it needs to operate with total precision, at the speed of thought, so smoothly and effortlessly that you can interact with it without breaking your stride or interrupting your conversations.

To make that happen, the FRL team has been working on a next-level wrist-mounted controller that quietly sits there reading neural impulses as they go down your arm. These neural impulses don't even need to be strong enough to lift a finger; the mere intention is enough for the wristband to pick up and translate into a command.

Why the wrist? "It's not just a convenient place to put these devices," says TR Reardon, FRL's Director of Neuromotor Interfaces. "It's actually where all your human skill resides, it's how you do adaptive tasks in the world. Your brain has more neurons dedicated to controlling your wrist and your hand than any other of your body ... You have a huge reservoir of untapped talent of motor talents that you barely use when you actually interact with the machine today."

Today's human-machine interfaces, says Reardon, have a lot of unnecessary steps that can be eliminated. An action begins as an intention in the motor cortex of the brain, travels down through the motor neurons in the arms as an electrical impulse, then becomes a muscle contraction that moves a hand or a finger to operate a mouse or keyboard. The FRL team can intercept that impulse at the wrist and cut out the middleman.

"We've had a big breakthrough here," says Reardon. "That's why we think there's an immense future for this technology. We're actually able to resolve these signals at the level of single neurons, and the single zeros and ones that they send. And that gives us a huge opportunity to allow you to control things in ways you've never done it before."

The neuro-motor interface, currently just a lab prototype that looks like a big, chunky watch on a wristband, can read neural activity down to such a fine resolution that it can clearly pick up millimeter-level deflections in the motion of a single finger. "I wish I could show you," says Reardon, "because you really could try all of this." The system requires each user to train the device for a repertoire of commands, developing its own intelligent mapping of how your particular nerves get a given job done.

The FRL team is already using the neuro-motor interface as a game controller, a "six dimensional joystick built into your body." It's already operating with a latency of just 50 milliseconds, providing responses that are snappy enough to game with, and even a guy who has never had a fully developed left hand has been able to get up to speed in a couple of minutes and use the controller as easily as anyone else.

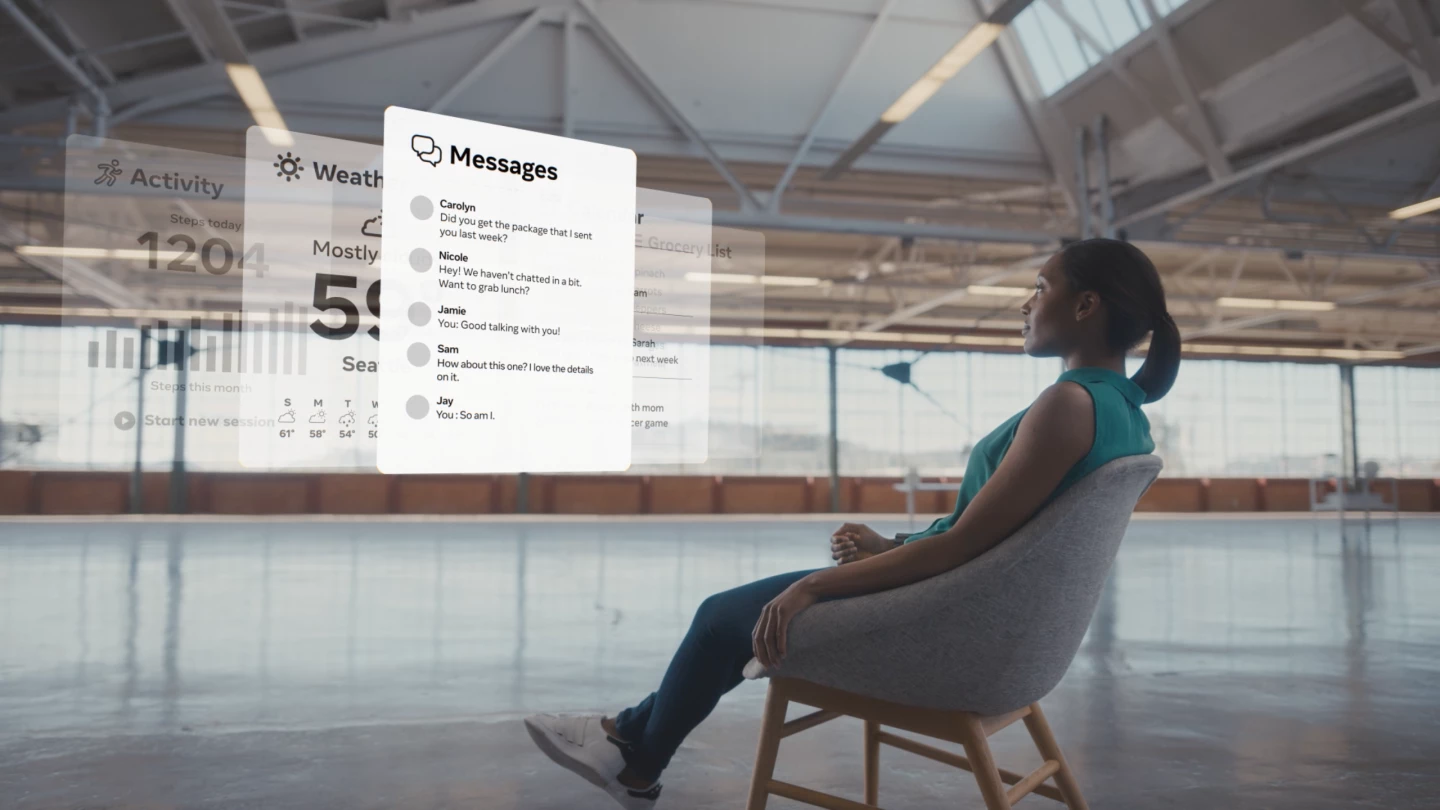

Interactions would start out simple, says the team, with things like finger pinches and simple thumb movements for clicks or yes/no type responses. This would let you deal with contextually-generated AR prompts without getting distracted from a conversation; if your friend talks about meeting you at a particular place and time, the system might throw up a discreet popup asking if you want to stick that in your calendar, and a simple finger motion could say yes or no and clear the popup without you even having to take your hands out of your pockets.

Things would expand from there, including any kind of subtle gesture control you can dream up, as well as the ability to control 3D AR objects with your hands. The FRL team has also been designing and refining keyboardless keyboard technology that lets you tap away on any old surface, refining its predictive algorithms as it gets to know you better. "This will be faster than any mechanical typing interface," says the FRL team, "and it will be always available because you are the keyboard."

This opens up the potential of a full multi-screen AR computing setup you can use anywhere, anytime, bringing nothing along but your AR glasses and wrist controllers. That, friends, would be even more Minority Report than Minority Report itself – and I say this in full knowledge of the sanctions this will earn me from the New Atlas editorial team, which has a long-standing policy of dishing out wedgies and noogies to any writer that mentions Minority Report after a rash of overuse in the late 2000s.

Advanced haptics

Haptic feedback is another area the FRL team is working hard to advance, and the latest prototype, which would be built into a wrist-mounted neurosensor like the ones described above, combines six "vibrotactile actuators," presumably similar to the force feedback units in a game console controller, with a "novel wrist squeeze mechanism."

The TASBI (Tactile And Squeeze Bracelet Interface) might not sound that interesting compared to a nerve-hijacking controller, but the addition of physical feedback will be a vital part of AR systems that integrate with your life while demanding the least attention possible.

"We have now tried tons of virtual interactions in both VR and AR with the vibration and squeeze capabilities of TASBI," said FRL Research Science Manager Nicholas Colonnese. "This includes simple things like push, turn and pull buttons, feeling textures, and moving virtual objects in space. We've also tried more exotic examples like climbing a ladder or using a bow and arrow. We tested to see if the vibration and squeeze feedback could make it feel like you're naturally interacting with these virtual objects. Amazingly, due to sensory substitution, where your body integrates the visual, audio and haptic information, the answer can be yes. It can result in a surprisingly compelling experience that is natural and easy to interpret."

Adaptive Interface AI and the ethical and privacy implications

Before an augmented reality system can make itself truly indispensable, it will need to know and understand your behavior – perhaps better than you know or understand yourself. It will need to constantly analyze your environment and your activities, figuring out where you are and what you're doing. It'll need to get to know you, building up a history of behaviors it can use to start predicting your actions and desires. This is going to require a ton of data crunching, and when it comes to crunching video feeds and working out what's going on, AI and deep learning technology will be indispensable.

Once it has a pretty good idea what you might want, it'll need to jump in and offer to help, in a way that's helpful rather than annoying. FRL calls this the Adaptive Interface.

“The underlying AI has some understanding of what you might want to do in the future,” explains FRL Research Science Manager Tanya Jonker. “Perhaps you head outside for a jog and, based on your past behavior, the system thinks you’re most likely to want to listen to your running playlist. It then presents that option to you on the display: ‘Play running playlist?’ That’s the adaptive interface at work. Then you can simply confirm or change that suggestion using a microgesture. The intelligent click gives you the ability to take these highly contextual actions in a very low-friction manner because the interface surfaces something that’s relevant based on your personal history and choices, and it allows you to do that with minimal input gestures.”

How might it work? Well, look at a smart lamp and you might get the option to turn it on or off, or change its color or brightness. Pull out a packaged food item and you might get the cooking instructions popping up in a little window, complete with pop-ups offering to start a timer just as you reach a critical step. Walk into a familiar café, and it might ask if you want to put in your regular order. Pick up your running shoes and it might ask if you want to start your workout playlist. These are things that could conceivably be achieved in the short to medium term – beyond that, the sky's the limit.

All of which would be nice and handy, but clearly, privacy and ethics are going to be a big issue for people – particularly when a company like Facebook is behind it. Few people in the past would ever have lived a life so thoroughly examined, catalogued and analyzed by a third party. The opportunities for tailored advertising will be total, and so will the opportunities for bad-faith actors to abuse this treasure trove of minute detail about your life.

But this tech is coming down the barrel. It's still a few years off, according to the FRL team. But as far as it is concerned, the technology and the experience are proven. They work, they'll be awesome, and now it's a matter of working out how to build them into a foolproof product for the mass market. So, why is FRL telling us about it now? Well, this could be the greatest leap in human-machine interaction since the touchscreen, and frankly Facebook doesn't want to be seen to be making decisions about this kind of thing behind closed doors.

"I want to address why we're sharing this research," said Sean Keller, FRL Director of Research. "Today, we want to open up an important discussion with the public about how to build these technologies responsibly. The reality is that we can't anticipate or solve all the ethical issues associated with this technology on our own. What we can do is recognize when the technology has advanced beyond what people know is possible and make sure that the information is shared openly. We want to be transparent about what we're working on, so people can tell us their concerns about this technology."

This is not a Neuralink, stresses FRL CTO Mike Schroepfer. "We're not anywhere near the brain, you can't read thoughts or senses or anything like that." And other devices, he says, like the Facebook Portal – which sits in people's homes and uses AI tracking to move a camera and keep you in frame as you wander around a room while on a video call – have had to deal with some of the same issues in the past. "That system," he explains, "was built from the start from day one, to run locally on the device, so that we could provide the feature but protect people's privacy. So that's one example of us trying to use on-device AI to keep data local, which is another form of protection for this data. So there's lots of things like that, that specifically this team is looking at."

How can you join in on this conversation? Well, one way is through us. Put your questions, comments, concerns and ideas in the comments below. We'll look at following up with the FRL team in an interview shortly.

Whether you believe we're already living in a post-privacy world in which people freely exchange information about themselves for convenience, or you feel that tech like this is one step too far into the digital unknown, it's coming, and it'll change the world. Check out a video below.

Source: Facebook Reality Labs