Last year we looked at an interesting research project from scientists at Cornell University seeking to use wearable cameras to track facial expressions, and the technology has now taken on a more practical form. The team has developed a necklace-like device that uses infrared cameras to image full facial movements, which they believe could become a useful tool for tracking a wearer's emotions across the course of a day.

The first iteration of the technology was dubbed C-Face, and was conceived as a way of "seeing" people's facial expressions when they are wearing a mask. The system consisted of a pair of cameras fixed to the ends of set of headphones, positioning them beneath the subject's ears.

By observing the contours of the cheeks peeking out beyond the mask, the system could deduce the position of 42 facial features and therefore determine the shape of the eyes, eyebrows, mouth, and ultimately, their facial expression. The C-Face system was able to reflect the wearer's actual facial expression with an accuracy of 88 percent, and displayed one of eight corresponding emojis on a computer screen.

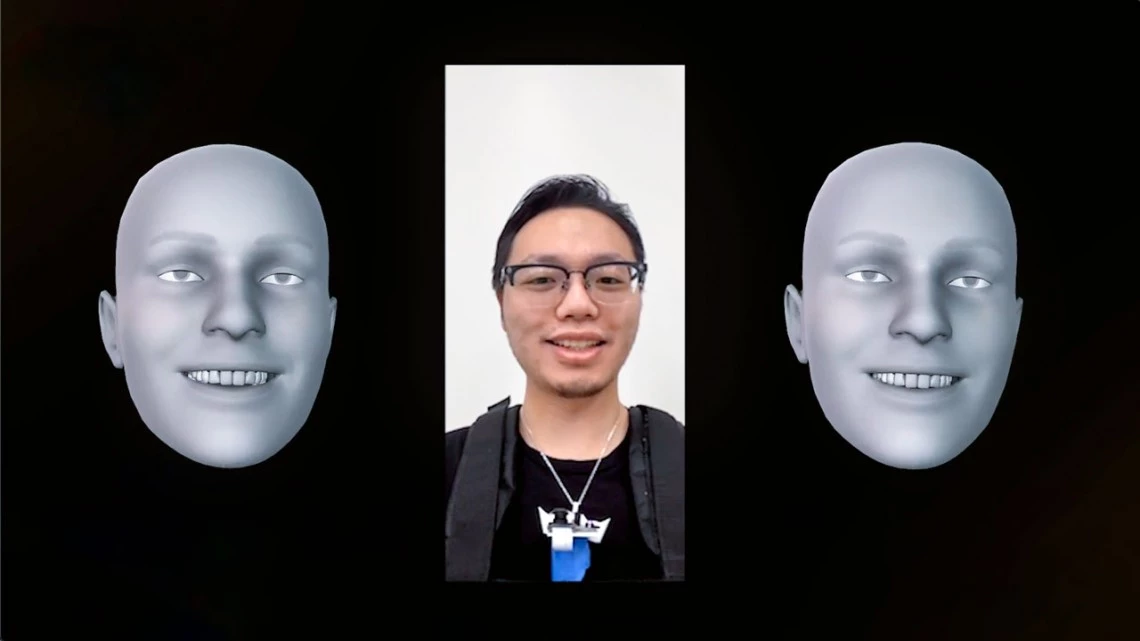

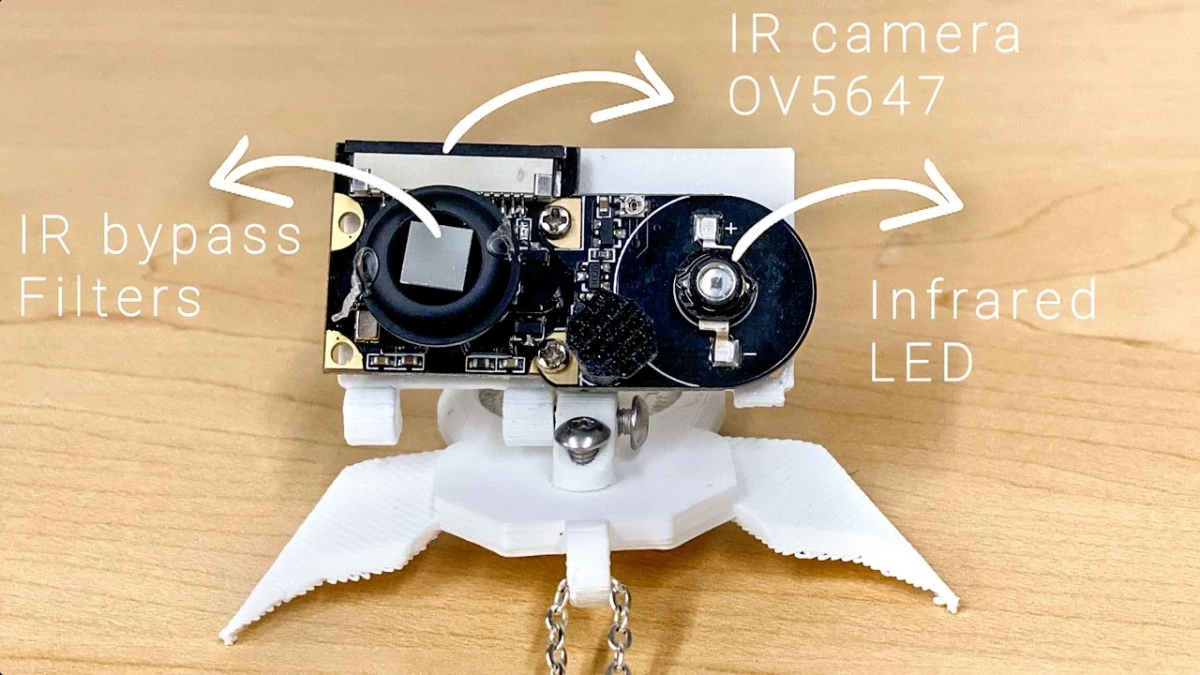

To bring this tech closer to real-world applications, the researchers have now fashioned it into a necklace-like device they call NeckFace. It works on the same principle, but uses an infrared camera system instead of the RGB cameras seen in the original, and captures images of the chin and neck from underneath. From this, the system can track the wearer's facial movements and produce a 3D reconstruction of their expressions.

This was demonstrated through a study involving 13 participants, who were made to perform different facial expressions while sitting and while walking around. This involved the subjects rotating their heads and even removing and remounting the device, amounting to the expression of 52 different facial shapes in all.

The performance of the NeckFace system was then compared to the TrueDepth 3D camera found on the iPhoneX, and was found to be nearly as accurate. The team also tested out a neckband device like that used in the original C-Face system, which proved more accurate likely due to the greater spacing between the cameras, compared to the singular, pendant-like device worn on the upper chest.

While they continue to optimize the technology, the scientists imagine a variety of uses for it. These could include virtual reality, video conferencing where a typical front-facing camera isn't possible, or in mental health, where it could monitor facial expressions and in turn fluctuating emotions throughout the day.

“Can we actually see how your emotion varies throughout a day?” says team leader Cheng Zhang. “With this technology, we could have a database on how you’re doing physically and mentally throughout the day, and that means you could track your own behaviors. And also, a doctor could use the information to support a decision.”

The video below offers a demonstration of the NeckFace technology, while the research was published in the journal Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies.

Source: Cornell University