While there are already AI systems that generate sound effects to match silent images of city streets (and other places), an experimental new technology does just the opposite. It generates images that match audio recordings of streets, with uncanny accuracy.

Developed by Asst. Prof. Yuhao Kang and colleagues from the University of Texas at Austin, the "Soundscape-to-Image Diffusion Model" was trained on a dataset of 10-second audio-visual clips.

Those clips consisted of still images and ambient sound taken from YouTube videos of urban and rural streets in North America, Asia and Europe. Utilizing deep learning algorithms, the system learned not only which sounds corresponded to which items within the images, but also which sound qualities corresponded to which visual environments.

Once its training was complete, the system was tasked with generating images based solely on the recorded ambient sound of 100 other street-view videos – it produced one image per video.

A panel of human judges were subsequently shown each of those images alongside two generated images of other streets, while listening to the video soundtrack the image was based on. When they were asked to identify which of the three images corresponded to the soundtrack, they averaged 80% accurate at doing so.

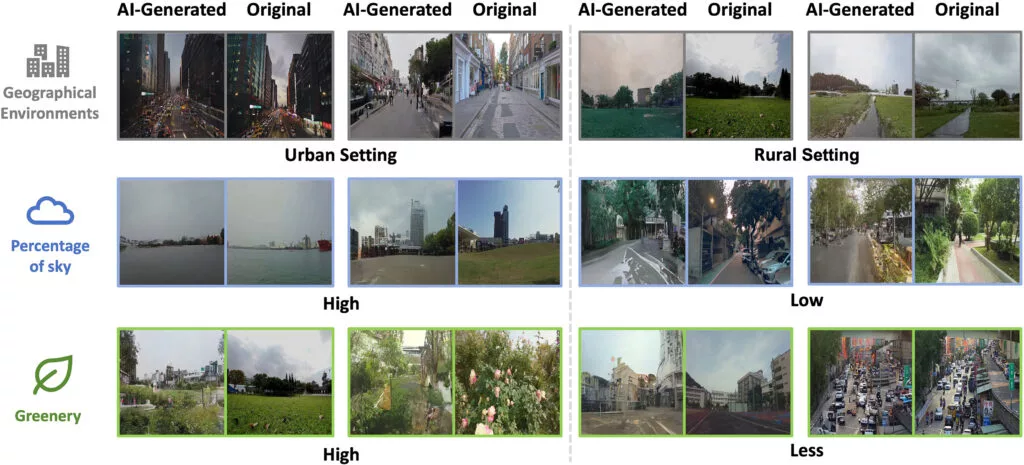

What's more, when the generated images were computer-analyzed, their relative proportions of open sky, greenery and buildings were found to "strongly correlate" with those in the original videos.

In fact, in many cases the generated images also reflected the lighting conditions of the source videos, such as sunny, cloudy or nighttime skies. This may have been made possible by factors such as decreased traffic noise at night, or the sound of nocturnal insects.

Although the technology could have forensic applications such as getting a rough idea of where an audio recording was made, the study is aimed more at exploring how sound contributes to our sense of place.

"The results may enhance our knowledge of the impacts of visual and auditory perceptions on human mental health, may guide urban design practices for place-making, and may improve the overall quality of life in communities," the scientists state in a paper that was recently published in the journal Nature.

Source: University of Texas at Austin