A number of research institutions are currently developing systems in which autonomous robots could be sent into places such as burning buildings, to create a map of the floor plan for use by waiting emergency response teams. Unfortunately, for now, we still have to rely on humans to perform that sort of dangerous reconnaissance work. New technology being developed by MIT, however, kind of splits the difference. It’s a wearable device that creates a digital map in real time, as the person who’s wearing it walks through a building.

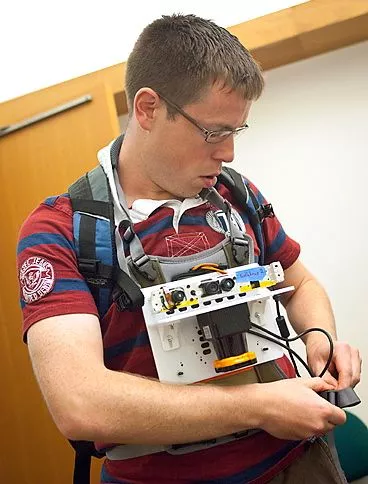

The prototype device, which is about the size of an iPad and worn on the user’s chest, wirelessly transmits data to a laptop in a distant room. That data comes from a variety of sensors, including accelerometers, gyroscopes, a stripped-down Microsoft Kinect camera, and a laser rangefinder. Much of the system utilizes technology used in a previous project, in which a wheeled robot was equipped to perform a similar mapping function.

The laser rangefinder creates a 3D profile of its surroundings by sweeping its beam across the immediate area in a 270-degree arc, measuring the amount of time that it takes for its light pulses to be reflected back by the surfaces around it. When used with the robot, it could get fairly accurate readings as it was able to operate from a relatively level, stable platform.

When worn by a person who’s walking, however, the rangefinder is constantly tipping back and forth – not at all ideal conditions for its use. That’s where the gyroscopes come in. By detecting when and in what way the mapping device is tilted, they provide data that is applied to the information gathered by the rangefinder, so that the user’s movements can be corrected for in the final profile.

The accelerometers, meanwhile, provide data on how fast the person is walking – a service that was performed in the robot using sensors in its wheels. Additionally, the accelerometers provide information on changes in altitude, as would be experienced when the user moved from one floor of the building to another. In some experiments, a barometer was added to the setup, which was also good at indicating changes in altitude. By knowing when the person has moved to another floor, the system avoids merging two or more floors into one.

The camera comes into play every few meters, as it snaps a photo of its surroundings. For each image, software takes note of approximately 200 unique visual details such as color patterns, contours or shapes. These details are matched to the map location at which each shot is taken. Subsequently, should the user return to a spot that they’ve already traveled through, the system will be able to identify it not only by its relative position, but also by comparing the newest snapshot of the area to one taken previously.

In the current prototype, a push button is used to “flag” certain locations. After the initial reconnaissance, users can then go back through the finished map, and add annotations to those flags. In a future version, however, the developers would like to see a function whereby users could make location-tagged speech or text annotations live on site.

Ultimately, it is hoped that the device could be shrunk to about the size of a coffee mug. More information on the research is available in the video below.

Source: MIT