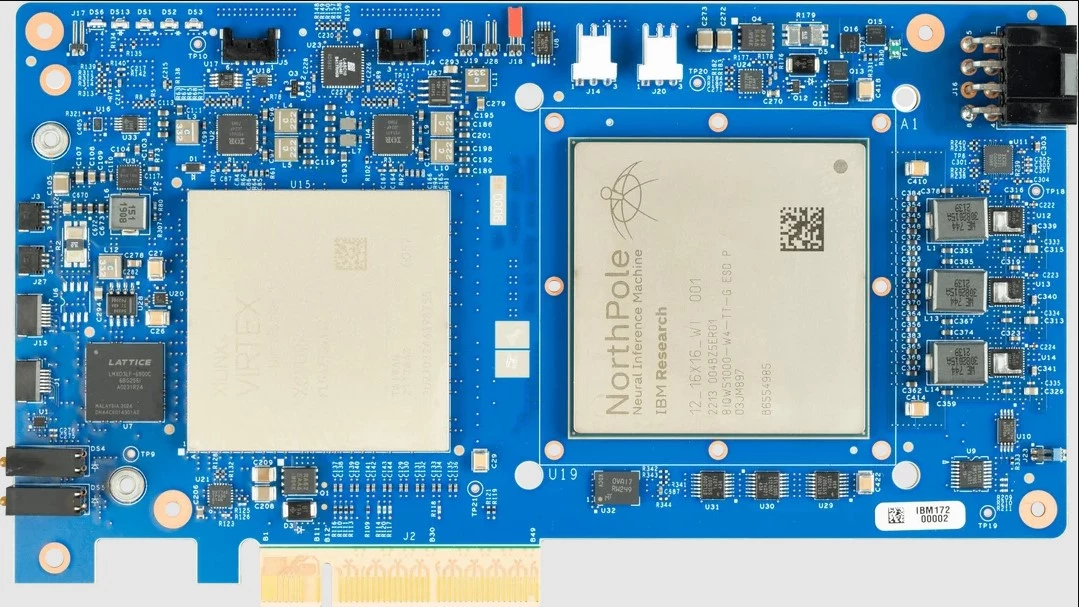

IBM Research’s lab in California is developing a prototype chip called NorthPole based on the architecture of the human brain. It holds the promise of greatly improving computer efficiency and producing systems that do not rely on cloud computing.

It's surprising how much of our world is tied to the past, resulting in all sorts of puzzles. Look at a computer keyboard and you're faced with a confusing jumble of letters that actually make it more difficult to type. That's a holdover from the days of mechanical typewriters when designers deliberately slowed down how fast one could type as a way to avoid jamming the mechanism.

You can easily find many other examples. There are bridges built where old fords were used for river crossings. We still call things "phones" that only provide voice communications incidentally. And some people still put milk in the cup before the tea because the other way round once caused cheap porcelain to crack.

The same is true of computers. The gigantic early mainframe computers were a complex mash of technologies and the only way to get them to work properly was to divide functions like control, logic, and memory into separate units, with the system shifting data from one unit to the next as part of the process.

As a result, eight decades later, engineers are still putting together computers based on this historical setup now known as von Neumann architecture after computing pioneer John von Neumann. It also created what is known as the von Neumann bottleneck, which is caused by all that inter-unit shifting.

According to IBM, the prototype NorthPole chip took two decades to develop and manages to get around the von Neumann bottleneck by a couple of clever innovations. One was to base the architecture on the human brain, which is far more energy efficient than any computer, and create what IBM calls "a faint representation of the brain in the mirror of a silicon wafer."

Essentially, what project leader Dharmendra Modha did was to use neural inference architecture that acts like digital synapses to combine what were once separate functions. This allowed the engineers to move the memory onto the processing chip itself and then to blur the line between memory and processing.

This new architecture not only speeds up the chip, with its 22 billion transistors and 256 cores, making it 25 times more efficient, it also cuts down on wasted energy, eliminating the need for the elaborate cooling systems required for high-performance systems.

Currently, NorthPole is not a general purpose chip. Part of its improvements have been at the cost of making it specialized in terms of its functions. However, it has the potential to double or even quadruple operations, opening up the way to create a network on a chip that can carry out all manner of tasks that today require access to huge cloud servers.

Because of its design, NorthPole isn't suitable (yet) for AI, but its tremendous power of inference opens up a wide variety of military and civilian applications, including independent systems that can handle large amounts of data on their own for face recognition, image classification, natural language processing, speech recognition, and much more reliable autonomous driving systems. In addition, the improved thermal efficiency means that Moore's Law may have been given a temporary reprieve, opening the way to smaller, more powerful chips.

The research was published in Science.

Source: IBM