It's a novel idea, using light diffracted through numerous plates instead of electrons. And to some, it might seem a little like replacing a computer with an abacus, but researchers at UCLA have high hopes for their quirky, shiny, speed-of-light artificial neural network.

Coined by Rina Dechter in 1986, Deep Learning is one of the fastest-growing methodologies in the machine learning community and is often used in face, speech and audio recognition, language processing, social network filtering and medical image analysis as well as addressing more specific tasks, such as solving inverse imaging problems.

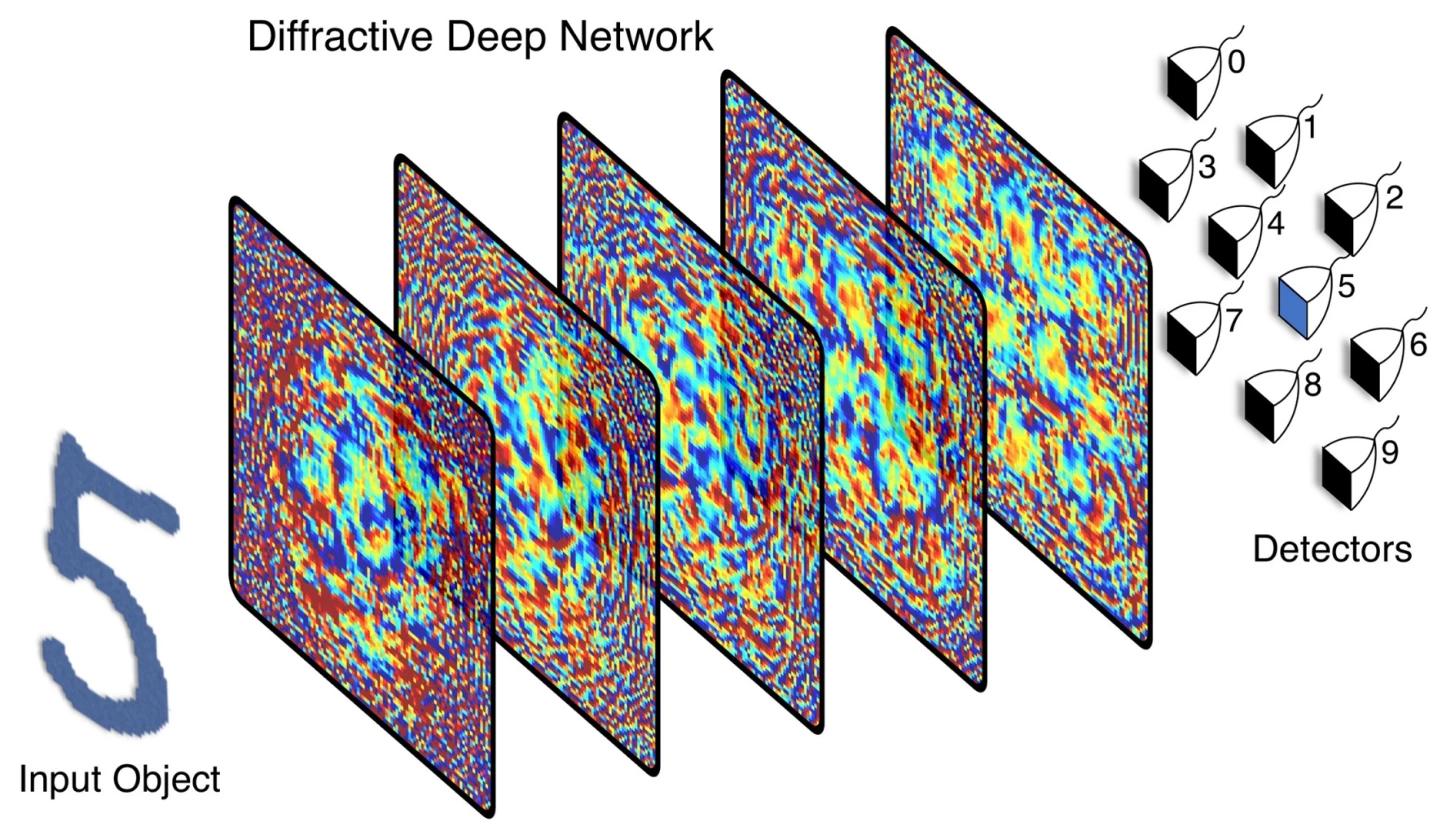

Traditionally, deep learning systems are implemented on a computer to learn data representation and abstraction and perform tasks, on par with – or better than – the performance of humans. However the team led by Dr. Aydogan Ozcan, the Chancellor's Professor of electrical and computer engineering at UCLA, didn't use a traditional computer set-up, instead choosing to forgo all those energy-hungry electrons in favor of light waves. The result was its all-optical Diffractive Deep Neural Network (D2NN) architecture.

The setup uses 3D-printed translucent sheets, each with thousands of raised pixels, which deflect light through each panel in order to perform set tasks. By the way, these tasks are performed without the use of any power, except for the input light beam.

The UCLA team's all-optical deep neural network – which looks like the guts of a solid gold car battery – literally operates at the speed of light, and will find applications in image analysis, feature detection and object classification. Researchers on the team also envisage possibilities for D2NN architectures performing specialized tasks in cameras. Perhaps your next DSLR might identify your subjects on the fly and post the tagged image to your Facebook timeline.

"Using passive components that are fabricated layer by layer, and connecting these layers to each other via light diffraction created a unique all-optical platform to perform machine learning tasks at the speed of light," said Dr. Ozcan.

For now though, this is a proof of concept, but it shines a light on some unique opportunities for the machine learning industry.

The research has been published in the journal Science.

Source: The Ozcan Research Group