Artificial intelligence is starting to combine with smartphone technology in ways that could have profound impacts on the way we monitor health, from tracking blood volume changes in diabetics to detecting concussions by filming the eyes. Using the technology to spot melanoma in its early stages is another exciting possibility, and a new deep-learning system developed by Harvard and MIT scientists promises a new level of sophistication, by using a method commonly used by dermatologists known as the “ugly duckling” criteria.

Using smartphones to detect skin cancers is an idea that scientists have been exploring for more than a decade. Back in 2011 we looked at an iPhone app that used the device’s camera and image-based pattern recognition software to provide risk assessments of unusual moles and freckles. In 2017, we looked at another exciting example, in which an AI was able to use deep learning to detect potential skin cancers with the accuracy of a trained dermatologist.

The new system developed by the MIT and Harvard researchers again leverages deep learning algorithms to take aim at skin cancer, but with some key differences. Algorithms built to automatically detect skin cancers so far have been trained to analyze individual skin lesions for odd features that could be indicative of melanoma, which is a little different to how dermatologists operate.

To better assess which moles may be cancerous, the team instead turned to the “ugly duckling” criteria, which is based on the concept that most moles on an individual will look similar and those that don't, the so-called ugly ducklings, are recognized as a warning sign of melanoma. The researchers say their system is the first of its kind to replicate this process, and they started by building a database of more than 33,000 wide-field images containing not just a patient’s skin, but other objects and backgrounds.

Within these images were both suspicious and non-suspicious lesions, which were labeled by three trained dermatologists. The deep learning algorithm was trained on this database and, after some time of refinement and testing, was able to distinguish the dangerous lesions from the benign ones, though this was still based on its assessments of the individual objects.

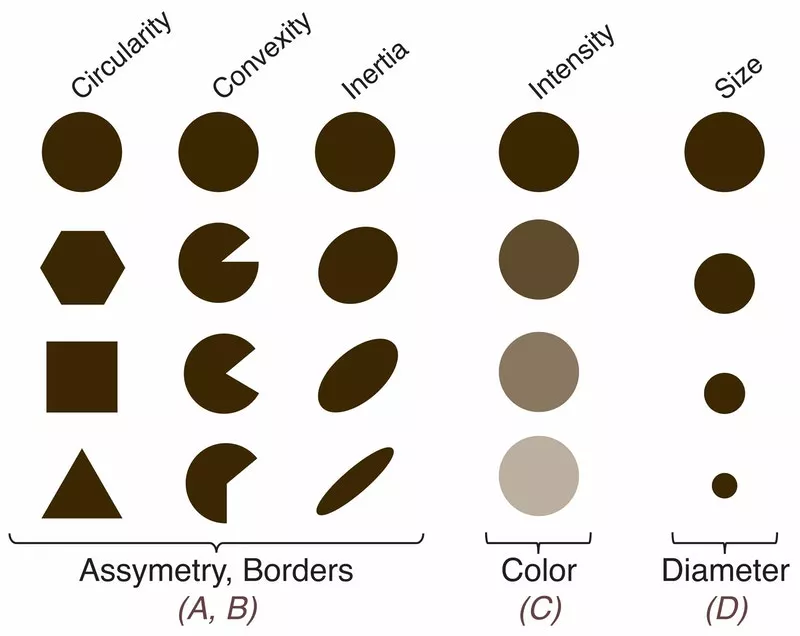

The team brought the ugly duckling method into the mix by building 3D maps of all the lesions in a given image – spread across a patient’s back, for example – and ran calculations on how odd the features were on each lesion. By comparing how unusual some of these features were compared to those on other lesions in the image, the system is able to assign values and determine which ones were dangerous.

This is described as the first quantifiable definition of the ugly duckling criteria, and the technology was put to the test identifying suspicious lesions in 68 different patients using 135 wide-field photos. Individual lesions were given an oddness score based on how concerning their features were, with the assessments compared to those made by three trained dermatologists. The algorithm agreed with the consensus of the dermatologists 88 percent of the time, and the individual dermatologists 86 percent of the time.

“This high level of consensus between artificial intelligence and human clinicians is an important advance in this field, because dermatologists’ agreement with each other is typically very high, around 90 percent,” says co-author Jim Collins. “Essentially, we’ve been able to achieve dermatologist-level accuracy in diagnosing potential skin cancer lesions from images that can be taken by anybody with a smartphone, which opens up huge potential for finding and treating melanoma earlier.”

The team has made the algorithm open source and will continue developing it in hope of conducting clinical trials further down the track. One area they will focus on is enabling the algorithm to work across the full breadth of human skin tones, to ensure it is a universally applicable clinical tool.

The research was published in the journal Science Translational Medicine, while you can hear from one of the scientists involved in the video below.

Source: Wyss Institute