Imagine speaking to an individual a hundred feet away in a noisy crowd of thousands – and hearing their reply – without using your phone. Now imagine sitting in the cheap-seats at the back corner of a concert hall, but hearing everything just as well as someone front and center.

Researchers at the universities of Sussex and Bristol in the UK are working towards making scenarios like these – and more – possible by employing "acoustic metamaterials" to manipulate sound in the same way lenses do with light. Think, sniper-scopes and narrow-beam torches, but with sound.

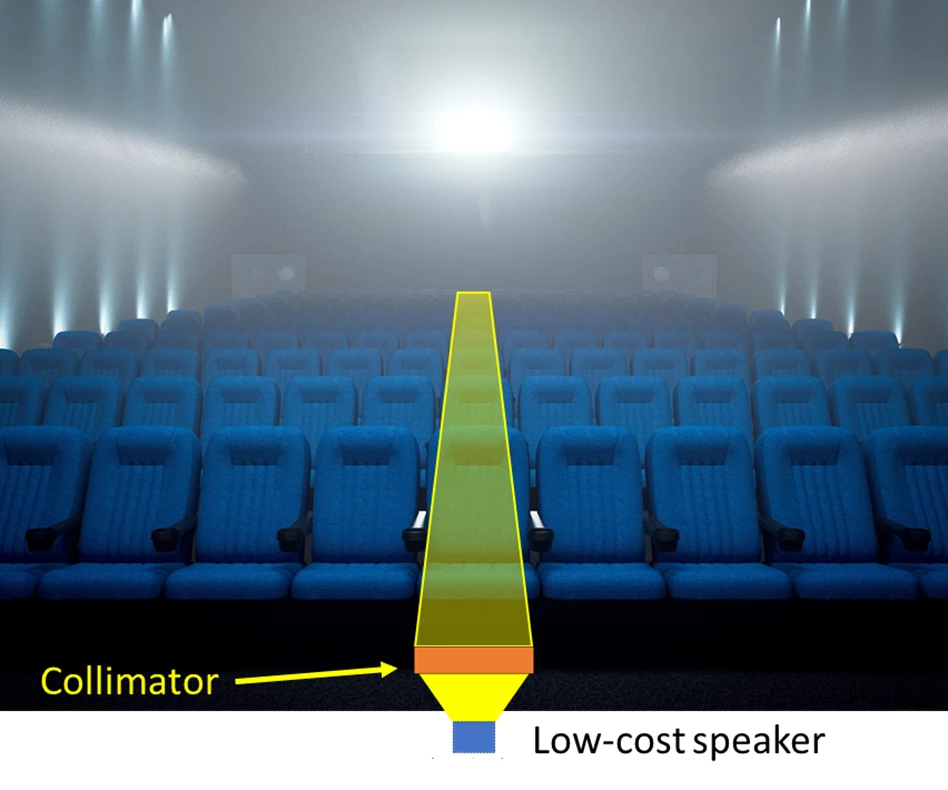

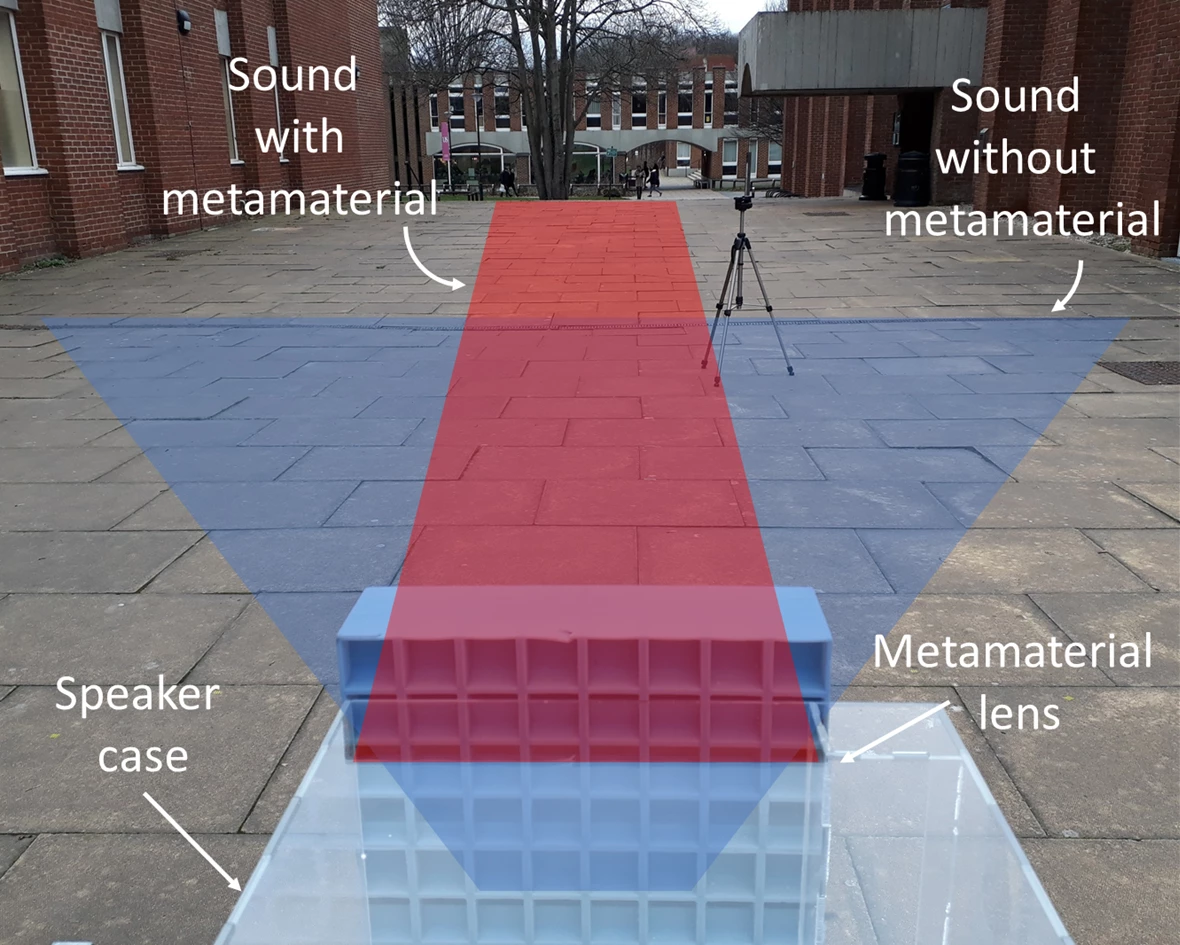

The research team has demonstrated the very first dynamic metamaterial device with the zoom objective of a varifocal lens for sound, dubbed "Vari-sound." The team also built a collimator (a device which produces a parallel beam of rays or radiation) capable of transmitting sound as a narrow, directional beam from a standard speaker. An acoustic collimator could be used for the individual-in-the-crowd scenario mentioned above, or perhaps to aim a signal up the aisles of a cinema, to deter people from sitting on the aisle steps.

"Acoustic metamaterials are normal materials, like plastic, paper, wood or rubber, but engineered so that their internal geometry sculpts the sound going through," says Dr. Gianluca Memoli, who leads this research at the University of Sussex. "The idea of acoustic lenses has been around since the 1960s and acoustic holograms are starting to appear for ultrasound applications, but this is the first time that sound systems with lenses of practical sizes, similar to those used for light, have been explored."

The research team sees a multitude of opportunities for this technology. From using an acoustic lens as a highly-focused, directional microphone pinpointing fatigue within vulnerable machinery parts, to distinguishing the difference between a burglar breaking your glass-door and the sound of your dog barking at the cat outside. Personal assistant devices (e.g. Amazon Echo) can also employ this technology to focus on commands issued from specific locations within a house, making them less sensitive to background noise.

In short, the potential game-changing benefits for the fields of large scale and home entertainment, public communication, safety, manufacturing and even the health industry are huge.

"In the future, acoustic metamaterials may change the way we deliver sound in concerts and theaters, making sure that everyone really gets the sound they paid for," says Letizia Chisari, who contributed to the work while at the University of Sussex. "We are developing sound capability that could bring even greater intimacy with sound than headphones, without the need for headphones."

This new approach has significant advantages over previous technologies.

Surround-sound setups – in cinemas and in the home – work best for those sitting plumb in the middle, and current acoustic lenses (e.g. in high-end home audio and ultrasonic transducers) have scale issues which restricts their use to higher frequencies.

Production is simpler too, as metamaterials are smaller, cheaper and easier to build than phased speaker arrays, and can be fabricated from simple, everyday substances – even recycled materials. Metamaterial devices also lead to less aberrations than speaker arrays.

Most of this is possible using existing speakers – which the team has shown – as the acoustic metamaterial sits in front of the sound source, between the source and the recipient (or receiver).

"Using a single speaker, we will be able to deliver alarms to people moving in the street, like in the movie Minority Report," says Jonathan Eccles, a Computer Sciences undergraduate at the University of Sussex. "Using a single microphone, we will be able to listen to small parts of a machinery to decide everything is working fine. Our prototypes, while simple, lower the access threshold to designing novel sound experiences: devices based on acoustic metamaterials will lead to new ways of delivering, experiencing and even thinking of sound."

The paper was presented at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2019) in Glasgow on Monday, and has been published in the ACM Digital Library. A short video sample of the team's presentation to CHI 2019 is below.

Source: University of Sussex