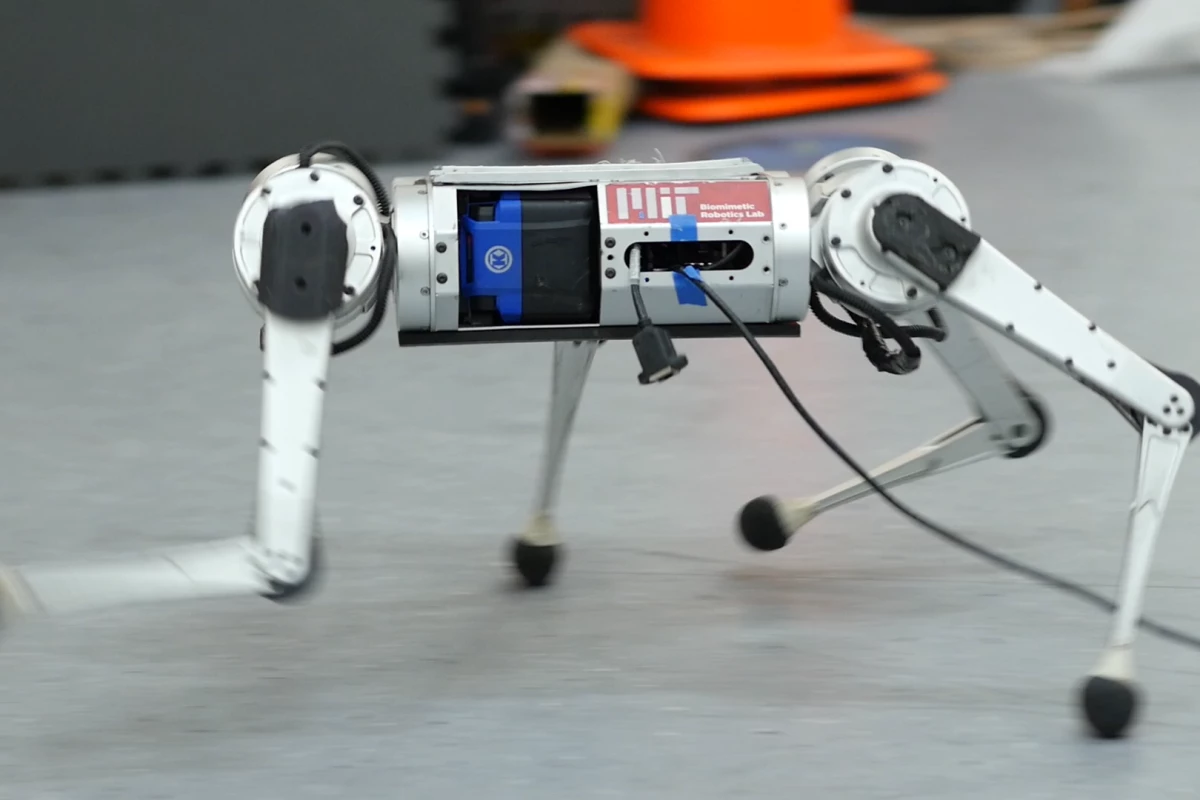

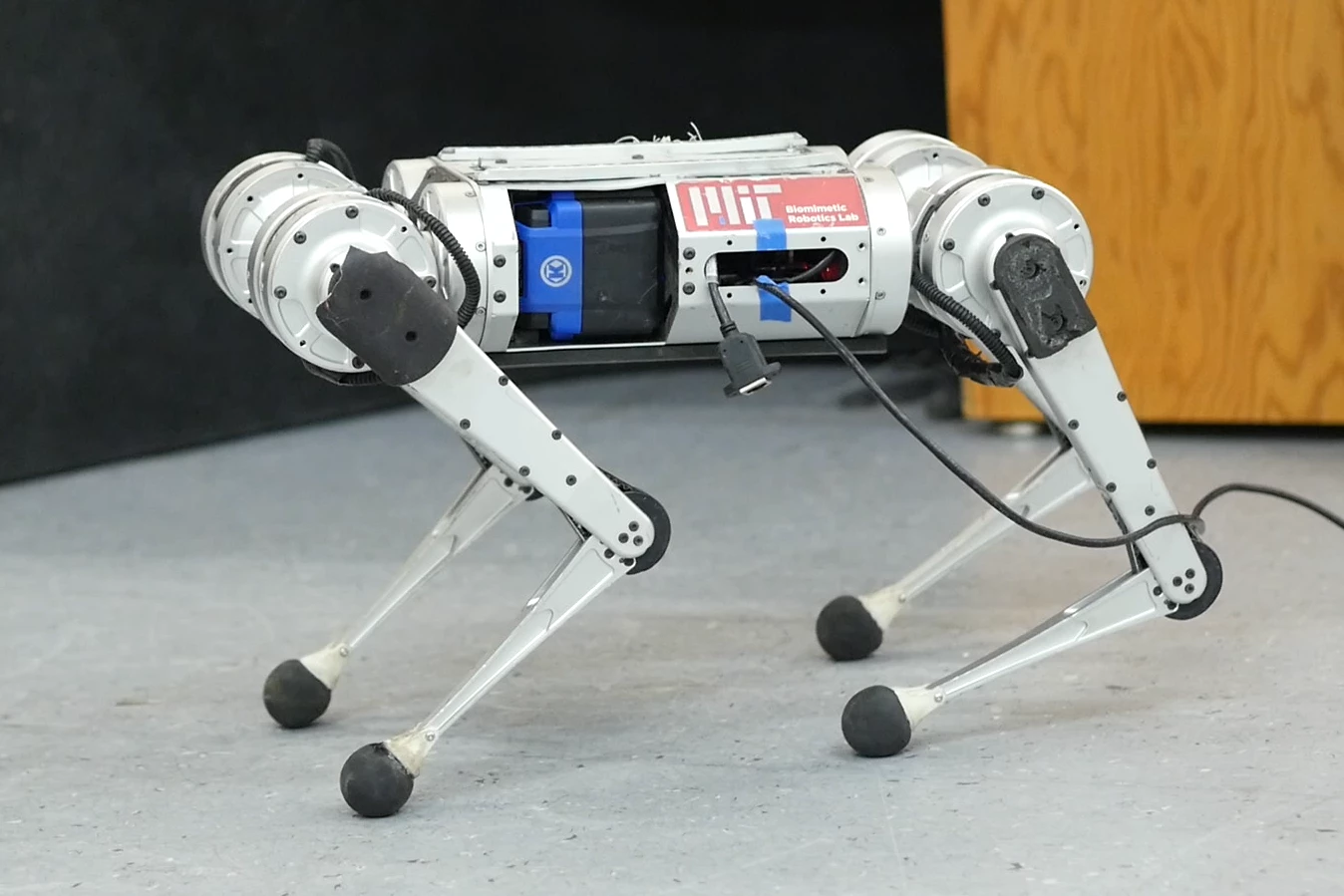

MIT's mini cheetah robot has broken its own personal best (PB) speed, hitting 8.72 mph (14.04 km/h) thanks to a new model-free reinforcement learning system that allows the robot to figure out on its own the best way to run and allows it to adapt to different terrain, without relying on human analysis.

The mini cheetah isn't the fastest quadruped robot going around. In 2012, its larger Cheetah sibling reached a top speed of 28.3 mph (45.5 km/h), but the mini cheetah being developed by MIT’s Improbable AI Lab and the National Science Foundation's Institute of AI and Fundamental Interactions (IAIFI) is much more agile and is able to learn without even taking a step.

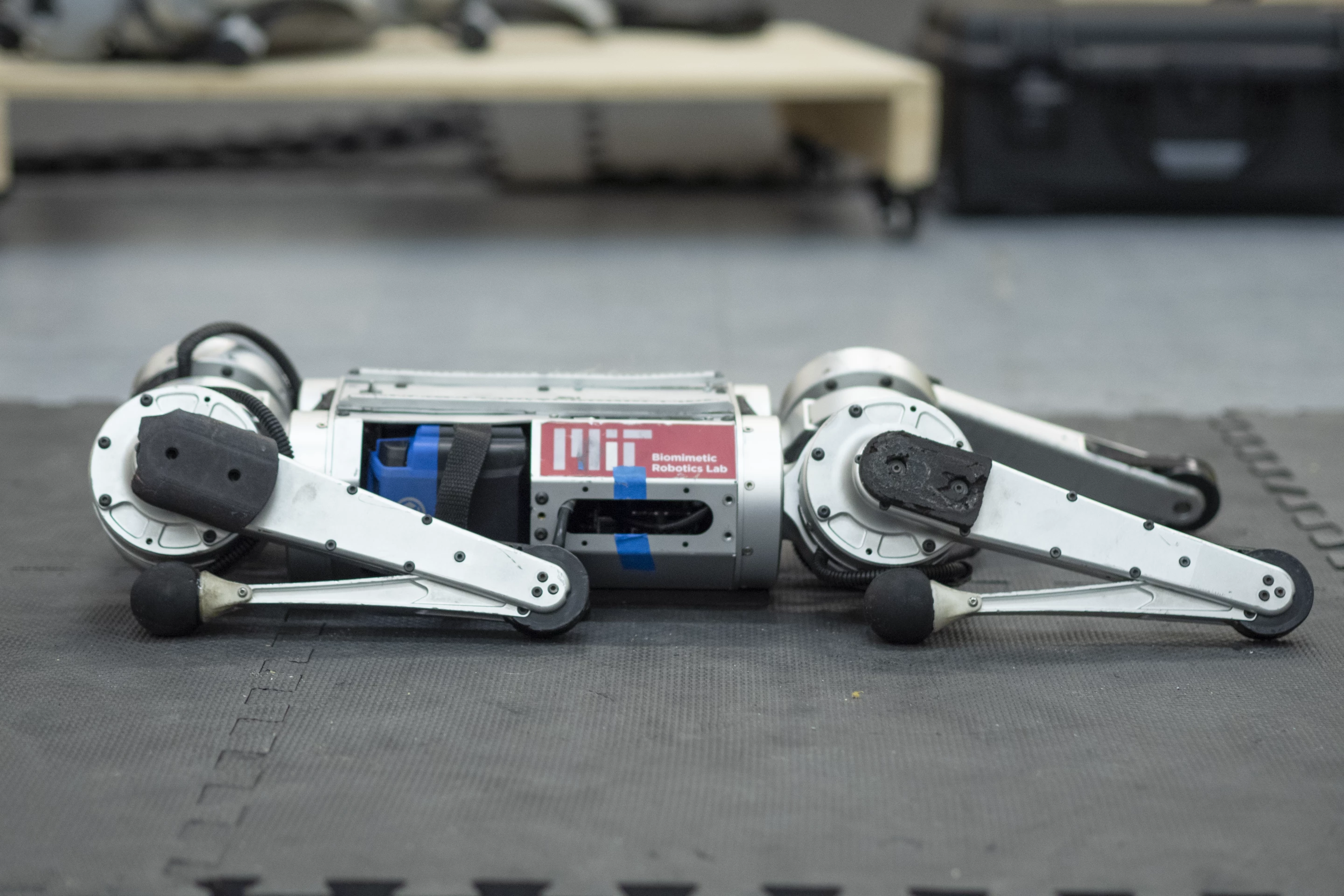

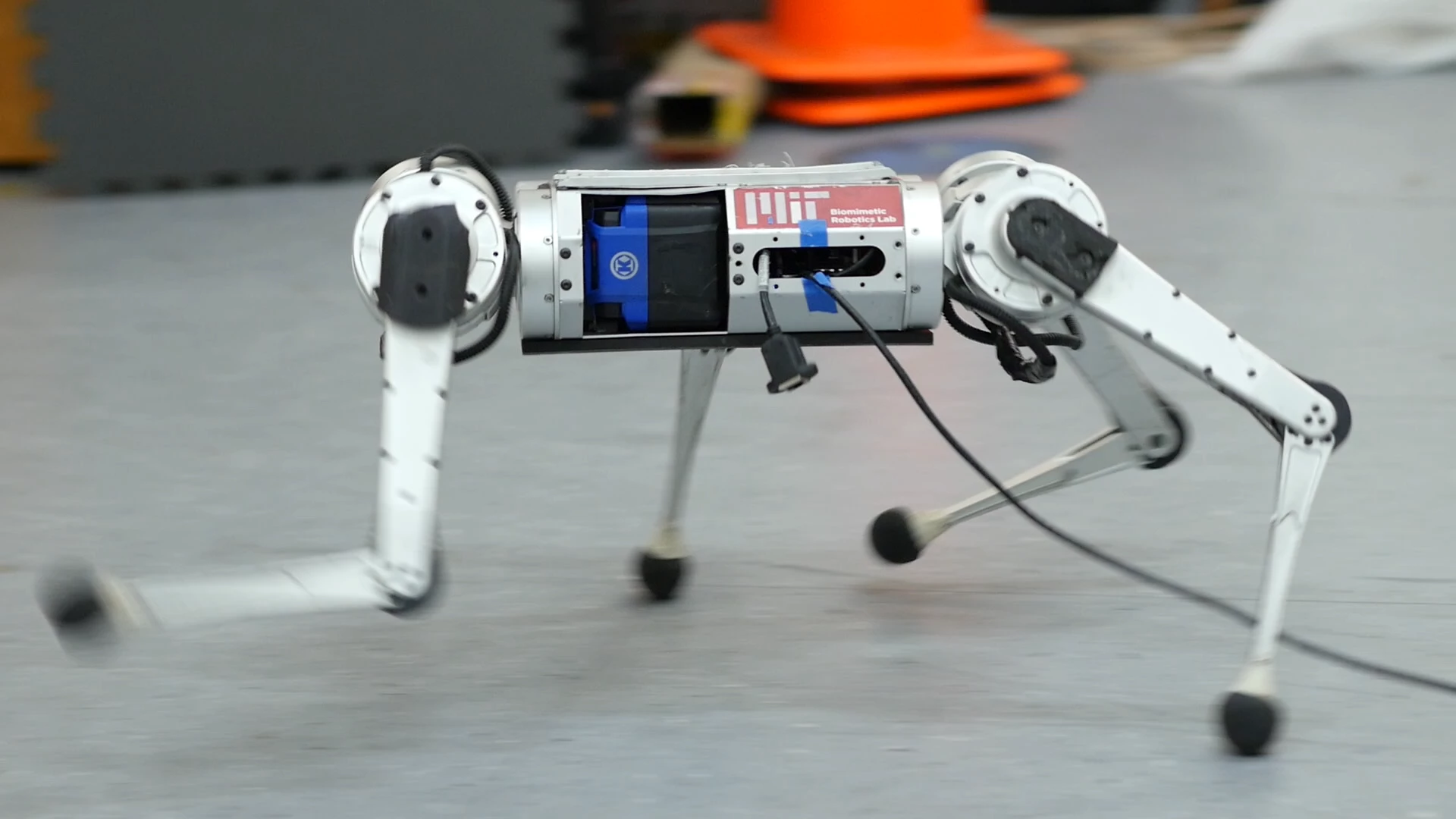

In a new video, the quadruped robot can be seen crashing into barriers and recovering, racing through obstacles, running with one leg out of action, and adapting to slippery, icy terrain as well as hills of loose gravel. This adaptability is thanks to a simple neural network that can makes assessments of new situations that may put its hardwire under high stress.

Normally, how a robot moves is controlled by a system that uses data based on an analysis of how mechanical limbs move to create models that serve as guides. However, these models are often inefficient and inadequate because it isn't possible to anticipate every contingency.

When a robot is running at top speed, it's operating at the limits of its hardware, which makes it very hard to model, so the robot has trouble adapting quickly to sudden changes in its environment. To overcome this, instead of analytically designed robots, such as Boston Dynamics' Spot, which rely on humans analyzing the physics of movement and manually configuring the robot's hardware and software, the MIT team has opted for one that learns by experience.

In this, the robot learns by trial and error without a human in the loop. If the robot has enough experience of different terrains it can be made to automatically improve its behavior. And this experience doesn't even need to be in the real world. According to the team, using simulations, the Mini-Cheetah can accumulate 100 days' of experience in three hours while standing still.

"We developed an approach by which the robot’s behavior improves from simulated experience, and our approach critically also enables successful deployment of those learned behaviors in the real world," said MIT PhD student Gabriel Margolis and IAIFI postdoc Ge Yang. "The intuition behind why the robot’s running skills work well in the real world is: Of all the environments it sees in this simulator, some will teach the robot skills that are useful in the real world. When operating in the real world, our controller identifies and executes the relevant skills in real-time."

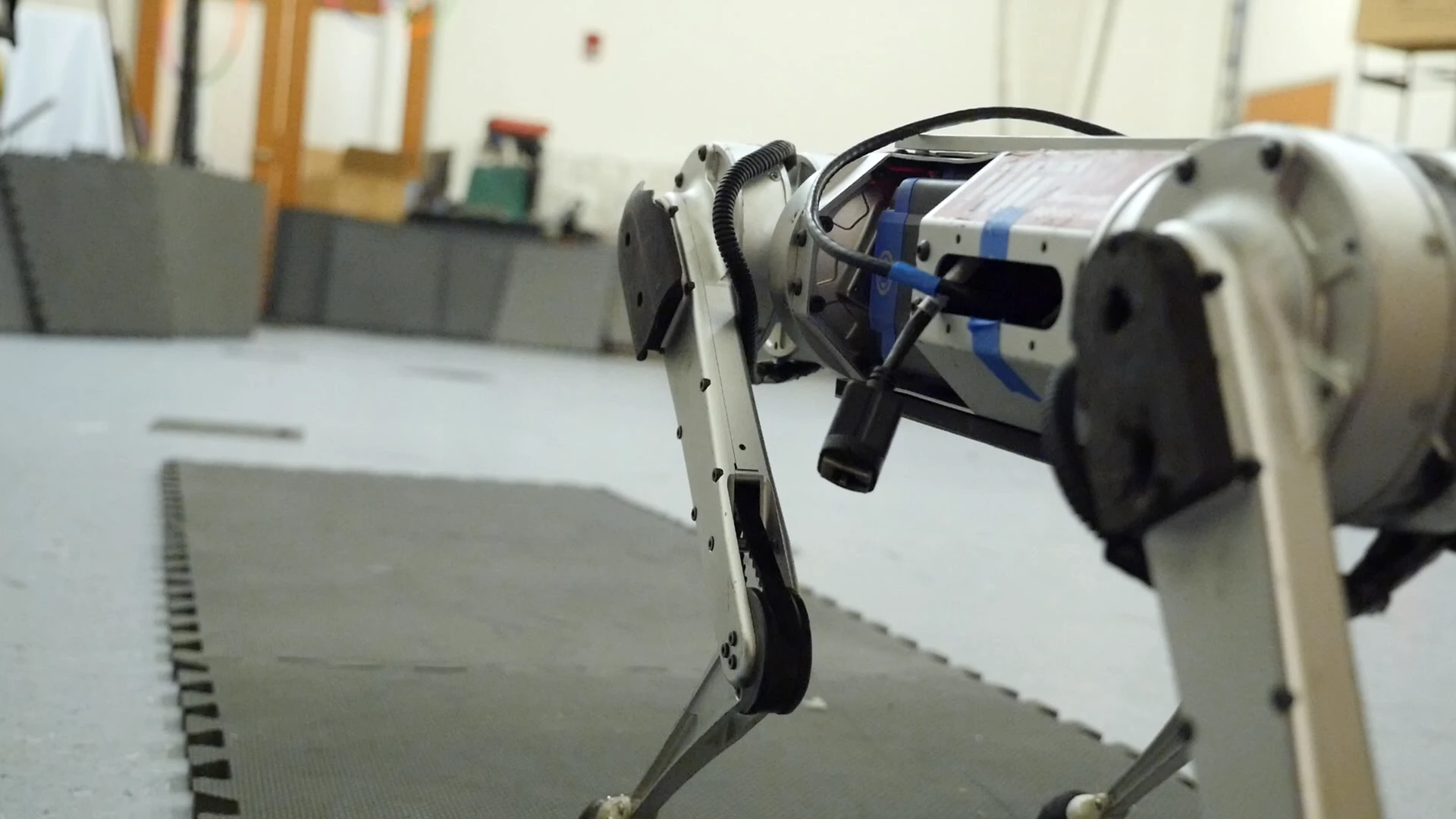

With such a system, the researchers claim that it is possible to scale up the technology, which the traditional paradigm can't do readily.

"A more practical way to build a robot with many diverse skills is to tell the robot what to do and let it figure out the how," added Margolis and Yang. "Our system is an example of this. In our lab, we’ve begun to apply this paradigm to other robotic systems, including hands that can pick up and manipulate many different objects."

The video below is of the mini cheetah showing what it's learned.

Source: MIT