Researchers in South Korea have developed an ultra-small, ultra-thin LiDAR device that splits a single laser beam into 10,000 points covering an unprecedented 180-degree field of view. It's capable of 3D depth-mapping an entire hemisphere of vision in a single shot.

Autonomous cars and robots need to be able to perceive the world around them incredibly accurately if they're going to be safe and useful in real-world conditions. In humans, and other autonomous biological entities, this requires a range of different senses and some pretty extraordinary real-time data processing, and the same will likely be true for our technological offspring.

LiDAR – short for Light Detection and Ranging – has been around since the 1960s, and it's now a well-established rangefinding technology that's particularly useful in developing 3D point-cloud representations of a given space. It works a bit like sonar, but instead of sound pulses, LiDAR devices send out short pulses of laser light, and then measure the light that's reflected or backscattered when those pulses hit an object.

The time between the initial light pulse and the returned pulse, multiplied by the speed of light and divided by two, tells you the distance between the LiDAR unit and a given point in space. If you measure a bunch of points repeatedly over time, you get yourself a 3D model of that space, with information about distance, shape and relative speed, which can be used together with data streams from multi-point cameras, ultrasonic sensors and other systems to flesh out an autonomous system's understanding of its environment.

According to researchers at the Pohang University of Science and Technology (POSTECH) in South Korea, one of the key problems with existing LiDAR technology is its field of view. If you want to image a wide area from a single point, the only way to do it is to mechanically rotate your LiDAR device, or rotate a mirror to direct the beam. This kind of gear can be bulky, power-hungry and fragile. It tends to wear out fairly quickly, and the speed of rotation limits how often you can measure each point, reducing the frame rate of your 3D data.

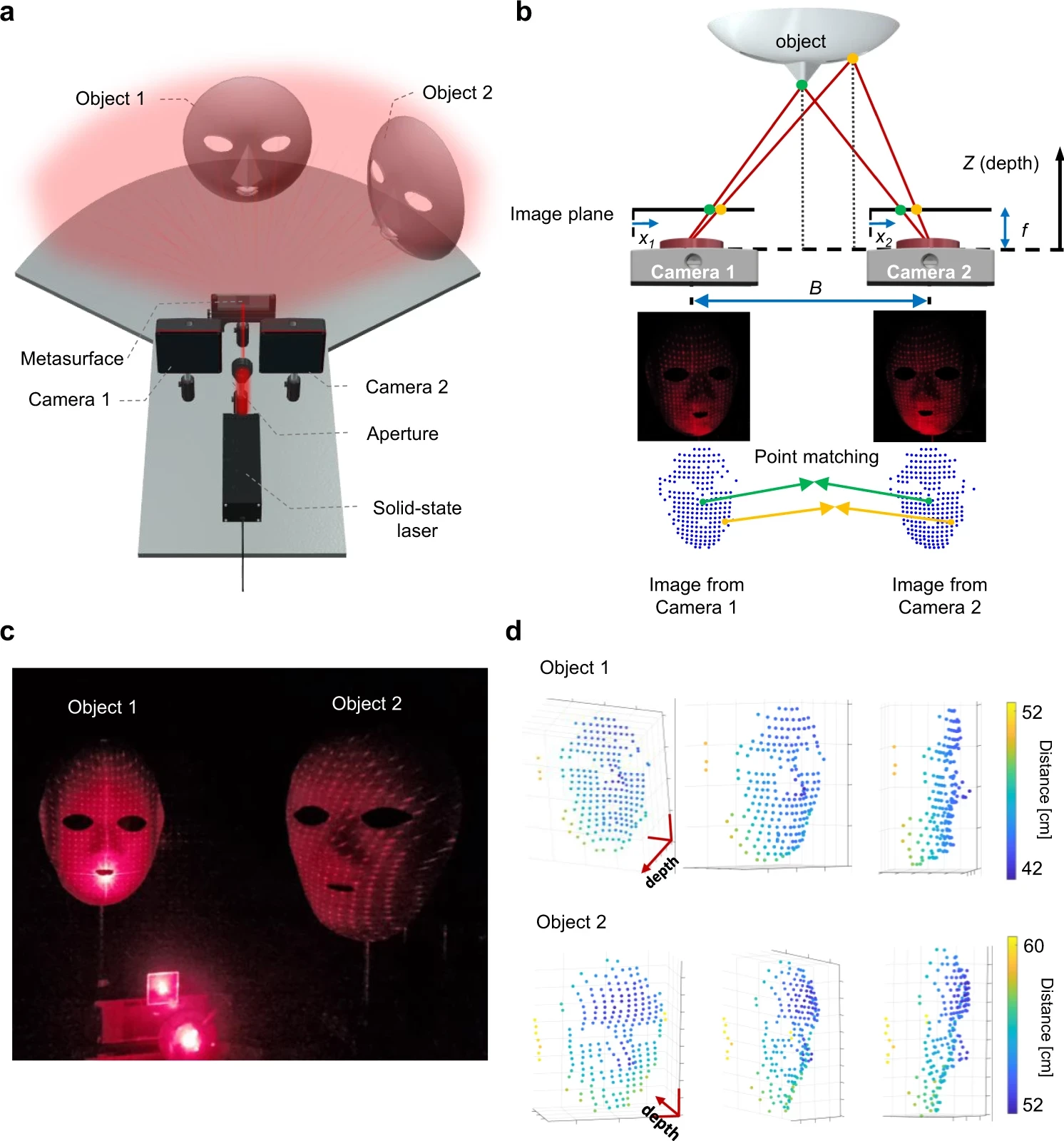

Solid state LiDAR systems, on the other hand, use no physical moving parts. Some of them, according to the researchers – like the depth sensors Apple uses to make sure you're not fooling an iPhone's face detect unlock system by holding up a flat photo of the owner's face – project an array of dots all together, and look for distortion in the dots and the patterns to discern shape and distance information. But the field of view and resolution are limited, and the team says they're still relatively large devices.

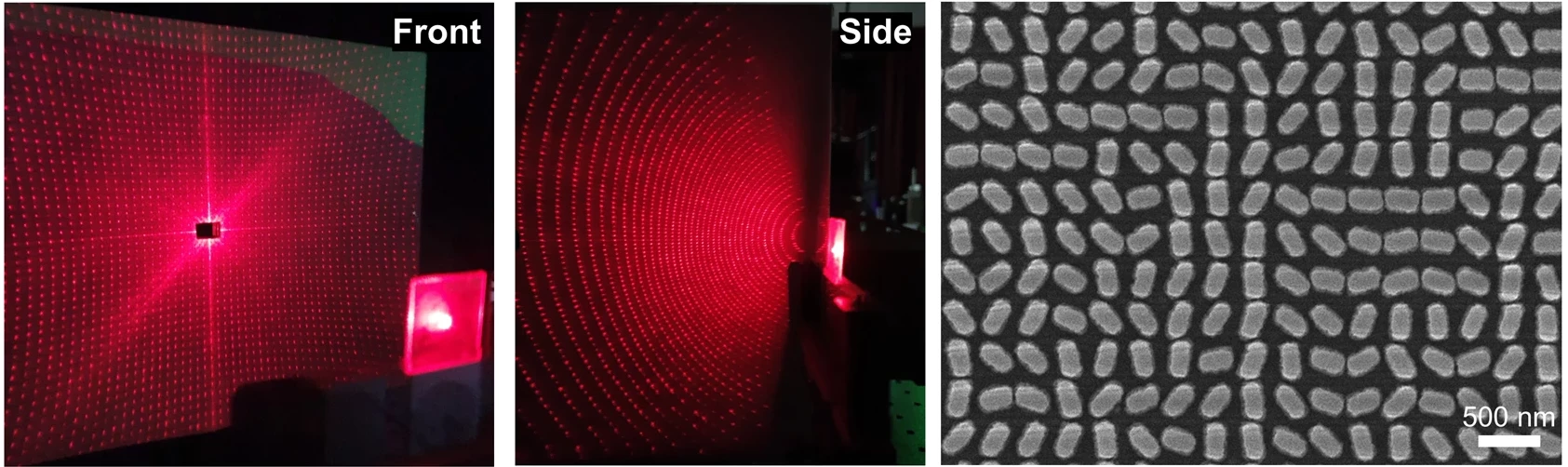

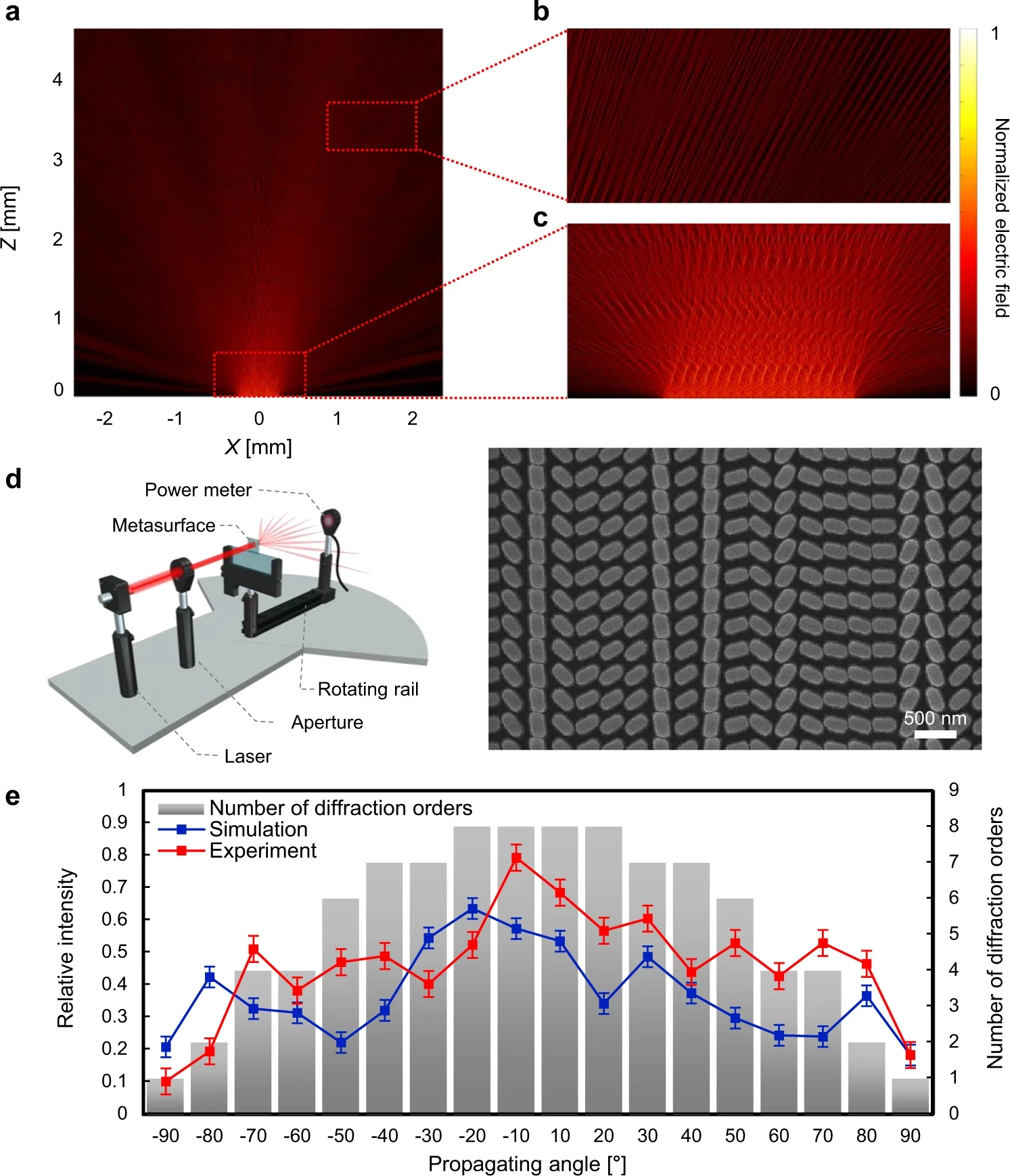

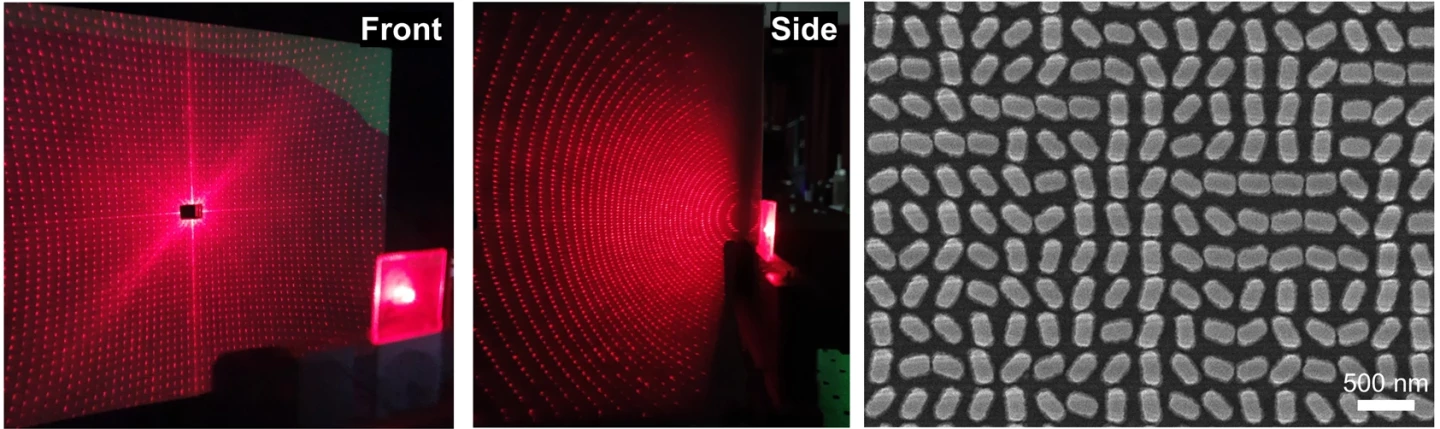

The Pohang team decided to shoot for the tiniest possible depth-sensing system with the widest possible field of view, using the extraordinary light-bending abilities of metasurfaces. These 2-D nanostructures, one thousandth the width of a human hair, can effectively be viewed as ultra-flat lenses, built from arrays of tiny and precisely shaped individual nanopillar elements. Incoming light is split into several directions as it moves through a metasurface, and with the right nanopillar array design, portions of that light can be diffracted to an angle of nearly 90 degrees. A completely flat ultra-fisheye, if you like.

The researchers designed and built a device that shoots laser light through a metasurface lens with nanopillars tuned to split it into around 10,000 dots, covering an extreme 180-degree field of view. The device then interprets the reflected or backscattered light via a camera to provide distance measurements.

"We have proved that we can control the propagation of light in all angles by developing a technology more advanced than the conventional metasurface devices," said Professor Junsuk Rho, co-author of a new study published in Nature Communications. "This will be an original technology that will enable an ultra-small and full-space 3D imaging sensor platform."

The light intensity does drop off as diffraction angles become more extreme; a dot bent to a 10-degree angle reached its target at four to seven times the power of one bent out closer to 90 degrees. With the equipment in their lab setup, the researchers found they got best results within a maximum viewing angle of 60° (representing a 120° field of view) and a distance less than 1 m (3.3 ft) between the sensor and the object. They say higher-powered lasers and more precisely tuned metasurfaces will increase the sweet spot of these sensors, but high resolution at greater distances will always be a challenge with ultra-wide lenses like these.

Another potential limitation here is image processing. The "coherent point drift" algorithm used to decode the sensor data into a 3D point cloud is highly complex, and processing time rises with the point count. So high-resolution full-frame captures decoding 10,000 points or more will place a pretty tough load on processors, and getting such a system running upwards of 30 frames per second will be a big challenge.

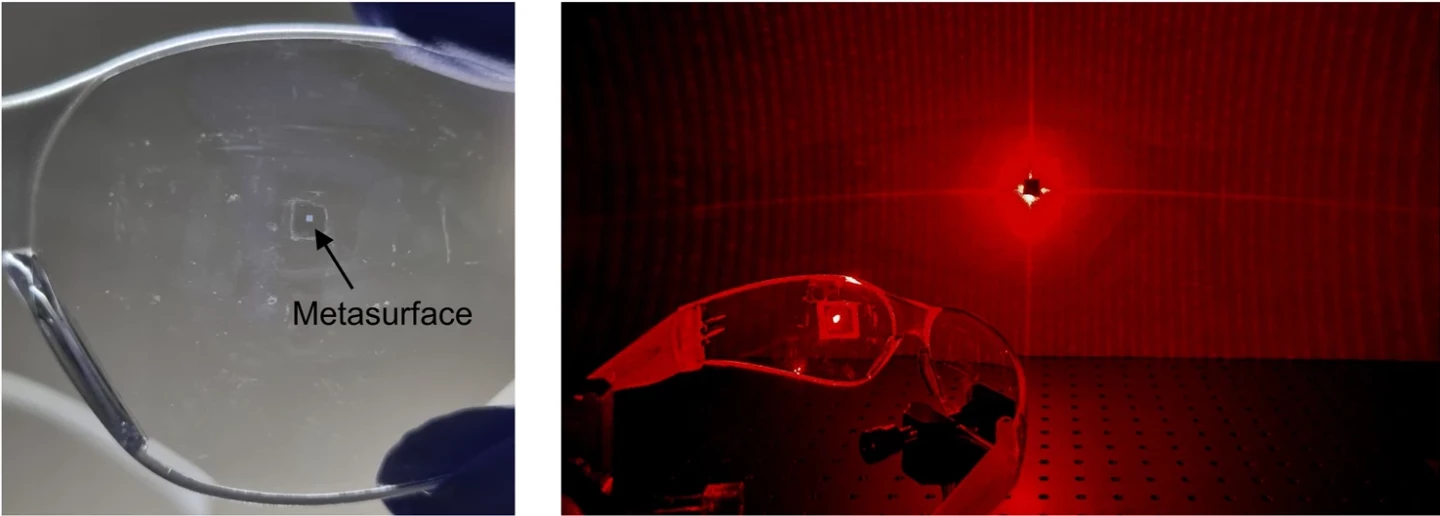

On the other hand, these things are incredibly tiny, and metasurfaces can be easily and cheaply manufactured at enormous scale. The team printed one onto the curved surface of a set of safety glasses. It's so small you'd barely distinguish it from a speck of dust. And that's the potential here; metasurface-based depth mapping devices can be incredibly tiny and easily integrated into the design of a range of objects, with their field of view tuned to an angle that makes sense for the application.

The team sees these devices as having huge potential in things like mobile devices, robotics, autonomous cars, and things like VR/AR glasses. Very neat stuff!

The research is open access in the journal Nature Communications.

Source: POSTECH