Inspired by how the human eye sees, processes, and sends information to the brain, researchers have created a tiny device that captures, recognizes and memorizes images enabling it to make quick, real-time decisions based on what it sees. The device could one day be used in self-driving cars.

Vision-based machine systems with real-time situational awareness represent the next generation in stealth technologies. Currently, these systems are bulky and must execute a series of computer steps to detect, process, and store images due to their separate processing and memory units. Mimicking the way the human eye sees and processes images – called neuromorphic vision – is a way of revolutionizing vision-based systems.

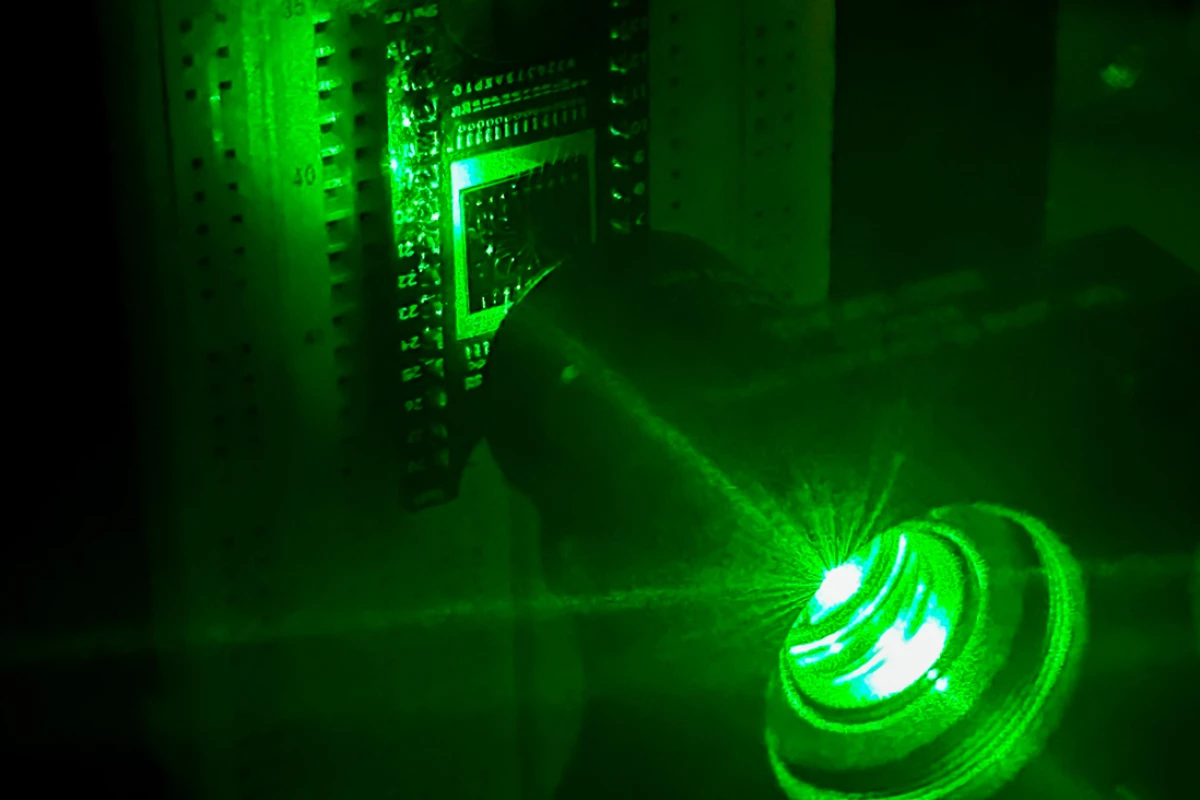

Australia’s RMIT University researchers led the development of a proof-of-concept neuromorphic device, with contributions from researchers at Deakin University and the University of Melbourne. Just like the human eye sends information to the brain for processing and storage, the device captures light, creates and processes information, and classifies and stores it in its memory.

Neuromorphic essentially means something that takes the shape or form of nerves or the nervous system.

“The human eye is exceptionally adept at responding to changes in the surrounding environment in a faster and much more efficient way than cameras and computers can,” said Sumeet Walia, corresponding author of the study. “Taking inspiration from the eye, we have been working for several years on creating a camera that possesses similar abilities through the process of neuromorphic engineering.”

The device comprises a single chip with a sensing layer made from an array of antimony-doped indium oxide less than 3-nm thick, thousands of times thinner than a human hair. The sensors mimic the eye’s retina, storing and processing visual information on one platform.

In developing their device, the researchers aimed to emulate the transmission of impulses between nerve cells (neurons) in the body, called synaptic function. Synapses connect one neuron to another and transmit messages from the nerves to the brain and vice versa. The researchers employed analog processing, similar to what our brains use, enabling the device to process information quickly and efficiently with minimal energy.

“By contrast, digital processing is energy and carbon-intensive and inhibits rapid information gathering and processing,” said Aishani Mazumder, lead author of the study. “Neuromorphic vision systems are designed to use similar analog processing to the human brain, which can greatly reduce the amount of energy needed to perform complex visual tasks compared with today’s technologies.”

The researchers shone ultraviolet (UV) light onto a pattern that was recognized and memorized by the device’s sensors. They found that their device could retain information longer than previously reported devices without needing frequent electrical signals to refresh its memory, significantly reducing energy consumption and enhancing performance.

“Performing all of these functions on one small device had proven to be a big challenge until now,” Walia said. “We’ve made real-time decision-making a possibility with our invention, because it doesn’t need to process large amounts of irrelevant data, and it’s not being slowed down by data transfer to separate processors.”

The researchers plan to continue working on their device, expanding the technology to use visible and infrared (IR) light. Given its tiny size and ability to quickly detect and process visual information in real-time, the researchers consider the device useful for a range of potential applications, including bionic vision, assessing the shelf-life of food and advanced forensics.

“Imagine a self-driving car that can see and recognize objects on the road in the same way that a human driver can or being able to rapidly detect and track space junk,” said Walia. “This would be possible with neuromorphic vision technology. Neuromorphic robots have the potential to run autonomously for long periods, in dangerous situations where workers are exposed to possible cave-ins, explosions and toxic air.”

The study was published in the journal Advanced Functional Materials.

Source: RMIT University