Screening callers of crisis or suicide helplines for their current level of suicide risk is crucial to detecting and preventing suicide.

Speech conveys useful information about a person’s mental and emotional state, providing clues about whether they’re sad, angry or fearful. Research into suicidal speech dates back more than 30 years, with studies identifying objective acoustic markers that can be used to differentiate various mental states and psychiatric disorders, including depression.

However, identifying suicide risk from someone’s speech can be challenging for the human listener, because callers to these hotlines are about as emotionally unstable as people get, and their speech characteristics can change rapidly.

Perhaps a real-time emotional 'dashboard' might help. Alaa Nfissi, a PhD student from Concordia University in Montreal, Canada, has trained an AI model in speech emotion recognition (SER) to aid in suicide prevention. He presented a paper about his work at this year’s IEEE International Conference on Semantic Computing in California, where it won the award for Best Student Paper.

“Traditionally, SER was done manually by trained psychologists who would annotate speech signals, which requires high levels of time and expertise,” said Nfissi. “Our deep learning model automatically extracts speech features that are relevant to emotion recognition.”

To train the model, Nfissi used a database of recordings of actual calls made to suicide hotlines in combination with recordings from actors expressing particular emotions. The recordings were broken into segments and annotated to reflect a specific mental state: angry, sad, neutral, fearful/concerned/worried. The actors’ recordings were merged with the original, real-life recordings because the emotions of anger and fear/concern/worry were underrepresented.

Nfissi’s model could accurately recognize the four emotions. In the merged datasets, it correctly identified fearful/concerned/worried 82% of the time, sad 77%, angry 72%, and neutral 78% of the time. The model was particularly good at identifying the segments from the real-life calls, with a 78% success rate for sadness and 100% for anger.

Nfissi hopes to see his model used to develop a real-time dashboard that will assist crisis line operators in choosing appropriate intervention strategies for callers.

“Many of these people are suffering, and sometimes just a simple intervention from a counsellor can help a lot,” said Nfissi. “This [AI model] will hopefully ensure that the intervention will help them and ultimately prevent a suicide.”

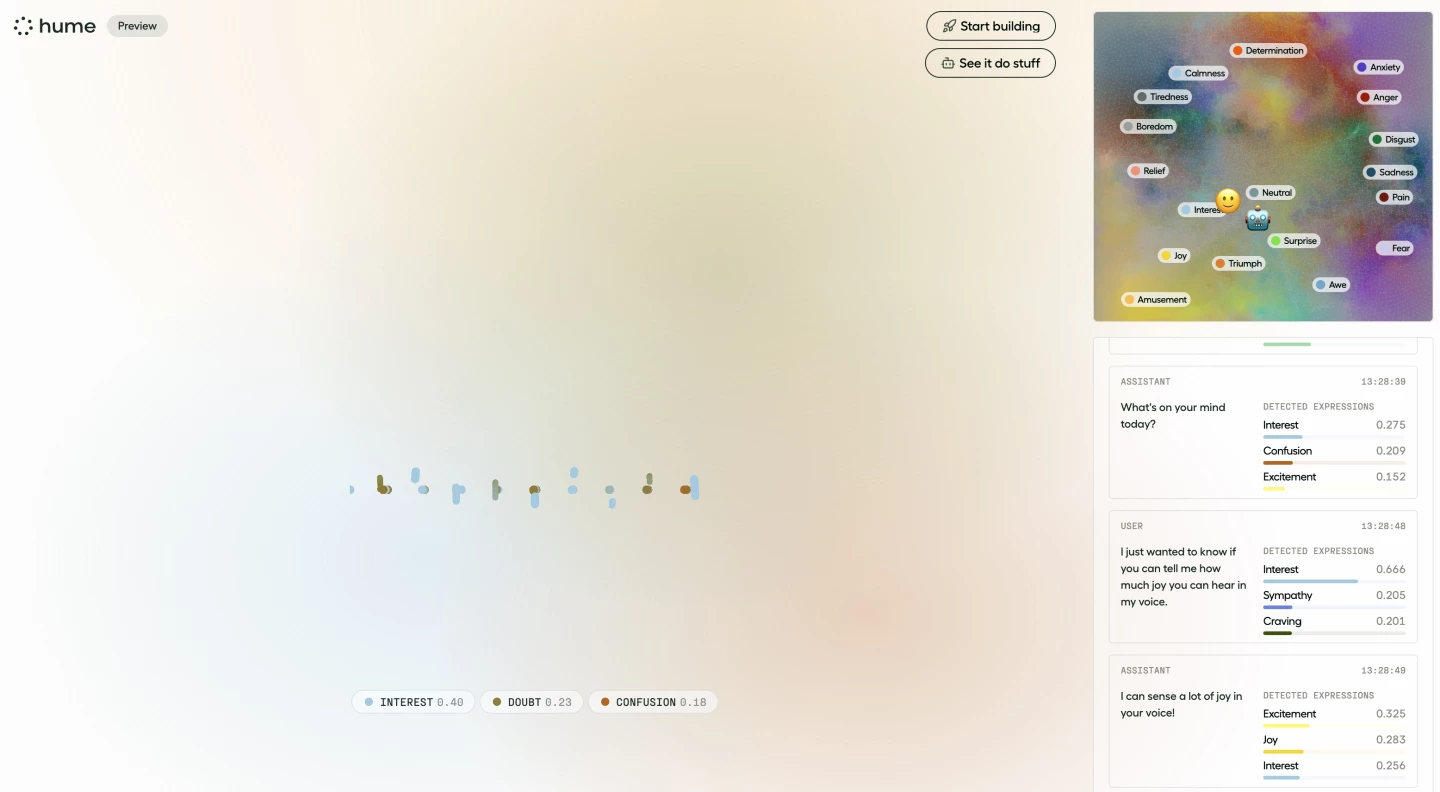

Ultimately, these kinds of empathic AIs may well take over entire suicide hotlines. Consider Hume, touted as the first voice-to-voice AI designed to interpret emotions and generate empathic responses. You can test it out by visiting the Hume website, where the AI tells you which emotions it can detect in your voice, and attempts to adjust the tone of its response accordingly.

And even without this emotionally responsive tech, conversational AIs are beginning to take over elsewhere in the call center industry; the Financial Times recently ran an article in which K. Krithivasan, the CEO and managing director of the Indian IT company Tata Consultancy Services, said that AI could eventually eliminate the need for call centers that employ many people in countries like the Philippines and India.

So a loss of jobs is clearly a concern, but empathic AI raises more issues. Last month, New Atlas reported that when OpenAI’s GPT-4 was provided with a person’s gender, age, race, education, and political orientation and asked to use the information to present an argument specific to that person, it was a staggering 81.7% more persuasive than humans.

AI has already shown how adept it is at gathering a vast amount of information –things like ethnicity, body weight, personality traits, drug consumption habits, fears and sexual preferences – from eye-tracking technology. You need some special gear to track eyes – but if your voice can betray so much about your emotions, well, there's a device sitting right there in your pocket that could be listening.

It doesn't seem like too much of a stretch to imagine a world where AI-equipped smartphones track emotion in our voices and on our faces, then use your current state, along with everything else they know about you, to recommend an item, meal, movie, or song that will match or buoy your mood. Or manipulate you into buying life insurance, a new car, a dress, or an overseas trip.

You're spot on if you detected cynicism (I’m sure the AI would have, too). Of course, the less-cynical flip side is that empathic AI could be used in healthcare to interact responsively with patients, particularly those who don’t have loved ones or have dementia. We could see this sort of thing sooner rather than later. In March 2024, tech giant NVIDIA announced a partnership with Hippocratic AI to produce AI-powered ‘healthcare agents’ that outperform – and are cheaper than – human nurses.

As with most things related to rapidly evolving AI, time will tell where we end up.

Nfissi’s paper was presented at the 2024 IEEE 18th International Conference on Semantic Computing (ICSC).

Source: Concordia University