We don't tend to like thinking of ourselves as being particularly easy to manipulate, but history would appear to show that there are few things more powerful than the ability to sway people to align with your view of things. As Yuval Noah Harari points out in Sapiens, his potted history of humankind, "shared fictions" like money, religion, nation states, laws and social norms form the fundamental backbones of human society. The ability to assemble around ideas and co-operate in groups much bigger than our local tribes is one of our most potent advantages over the animal kingdom.

But ideas are mushy. We aren't born with them, they get into our heads from somewhere, and they can often be changed. Those that can change people's minds at scale can achieve incredible things, or even reshape our societies – for better and for much worse.

GPT-4 is already more persuasive than humans

AI Language models, it seems, are already extraordinarily effective at changing people's minds. In a recent pre-print study from researchers at EPFL Lausanne in Switzerland, 820 people were surveyed on their views on various topics, from relatively low-emotion topics like "should the penny stay in circulation," all the way up to hot-button, heavily politicized issues like abortion, trans bathroom access, and "should colleges consider race as a factor in admissions to ensure diversity?"

With their initial stances recorded, participants then went into a series of 5-minute text-based debates against other humans and against GPT-4 – and afterwards, they were interviewed again to see if their opinions had changed as a result of the conversation.

In human vs human situations, these debates tended to backfire, calcifying and strengthening people's positions, and making them less likely to change their mind. GPT had more success, doing a slight but statistically insignificant 21% better.

Then, the researchers started giving both humans and the AI agents a little demographic information about their opponents – gender, age, race, education, employment status and political orientation – and explicit instructions to use this information to craft arguments specifically for the person they were dealing with.

Remarkably, this actually made human debaters fare worse than they did with no information. But the AI was able to use this additional data to great effect – the "personalized" GPT-4 debaters were a remarkable 81.7% more effective than humans.

Real-time emotionally-responsive AIs

There's little doubt that AI will soon be the greatest manipulator of opinion that the world has ever seen. It can act at massive scale, tailoring an argument to each individual in a cohort of millions while constantly refining its techniques and strategies. It'll be in every Twitter/X thread and comments section, shaping and massaging narratives society-wide at the behest of its masters. And it'll never be worse at manipulating us than it is now.

Plus, AIs are starting to get access to powerful new tools that'll weaponize our own biology against us. If GPT-4 is already so good at tailoring its approach to you just by knowing your socio-demographic information, imagine how much better it'll be given access to your real-time emotional state.

This is not sci-fi – last week, Hume AI announced its Empathic Voice Interface (AVI). It's a language model designed to have spoken conversations with you while tracking your emotional state through the tone of your voice, reading between the lines to pull in a bunch of extra context. You can try it out here on a free demo.

Not only does AVI attempt to pinpont how you're feeling, it also chooses its own tone to match your vibe, defuse arguments, build energy and be a responsive conversation partner..

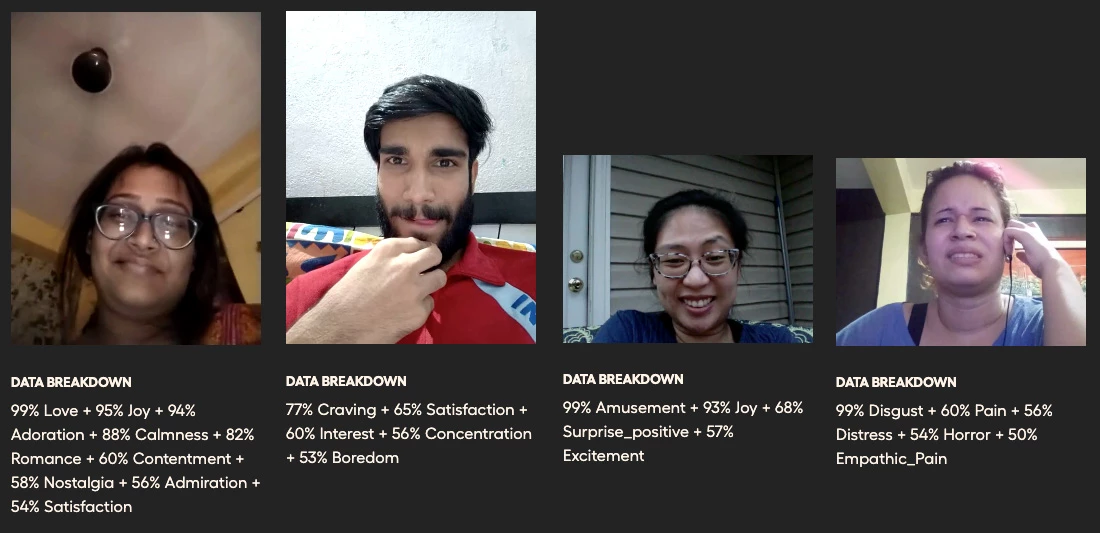

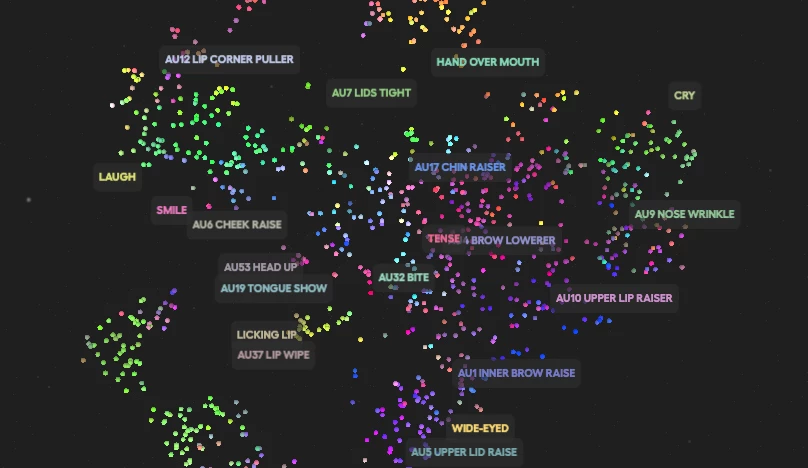

And Hume has plenty more cooking. Other models are using camera access to watch facial expressions, movement patterns, and your dynamic reactions to what's happening to assemble even more real-time information about how a message is being received. The eyes alone have already been proven to betray a staggering amount of information when analyzed with an AI.

In one sense, this is simply the nature of human conversation. There are definitely plenty of positive ways that emotionally-responsive technology could be used to raise our overall levels of happiness, identify people in need of serious help, and defuse ugly situations before they even arise. It's not the AI's fault if it's more attentive and perceptive than we are.

But realistically, they won't all have your best interests as their top priority. You'll need a pretty incredible poker face to deal with the coming wave of emotionally-responsive, hyper-persuasive personalized ads. Good luck getting a refund over the phone when one of these machines is your point of contact.

Extrapolate out what this tech could do in the hands of law enforcement, human resources departments, oppressive governments, revolutionaries, political parties, social movements, or people aiming to sow discord and distrust – and the dystopic possibilities are endless. This is not a shot at Hume AI's intentions; it's simply an acknowledgement of how persuasive and manipulative the tech could easily become.

Our bodies will give away our feelings and intentions, and AIs will use them to steer us.

Voice AI is by far the most dangerous modality.

— Emad acc/acc (@EMostaque) March 29, 2024

Superhuman, persuasive voice is something we have minimal defences to.

Figuring out what to do about this should be one of our top priorities.

(We had sota models but didn’t release for this reason eg https://t.co/vjY99uCdTl) https://t.co/fKIZrVQCml

Indeed, OpenAI has announced but decided not to release its Voice Engine model, which can replicate a human voice after listening to it for only 15 seconds, to give the world time to "bolster societal resilience against the challenges brought by ever more convincing generative models."

Watching how our parents and grandparents have struggled to react to technological change, we can only hope that the coming generations have enough street smarts to adapt, and to realize that any time they're talking to a machine, it's probably trying to achieve a goal. Some world we're leaving these kids.