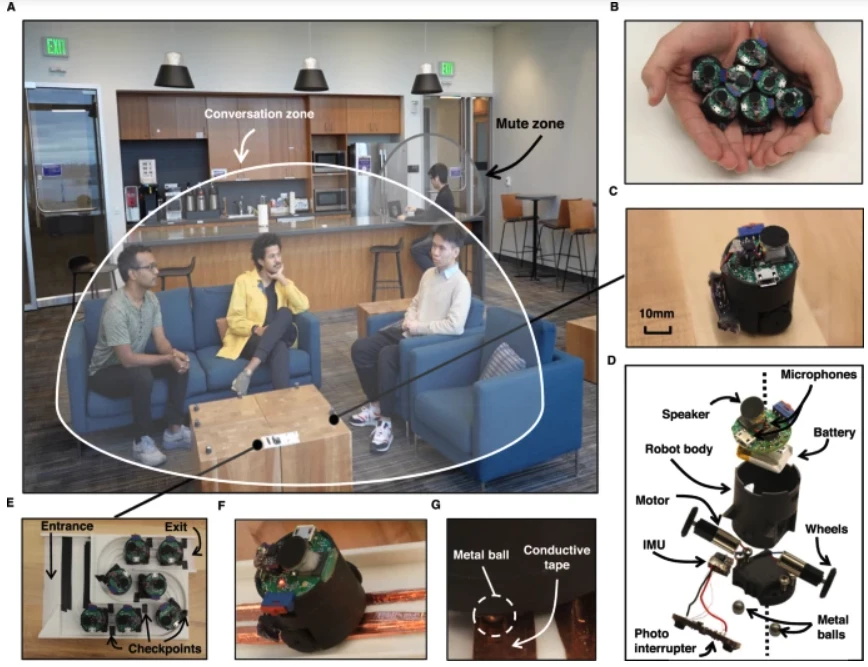

UW researchers say they can now mute different parts of a noisy room, or isolate one conversation in a chaotic environment, thanks to a swarm of small audio robots that auto-position themselves to pinpoint and follow multiple moving sound sources.

We humans have a rudimentary ability to locate sound sources with our eyes shut, thanks to the slightly distributed two-mic array and audio-shaping screening effects provided by our ears. But when audio environments become complex, things get extremely confusing – which doesn't jive well with our weird tendency to seek out noisy, crowded and high-energy spaces, like Sunday-morning cafes, and then try to have conversations in them.

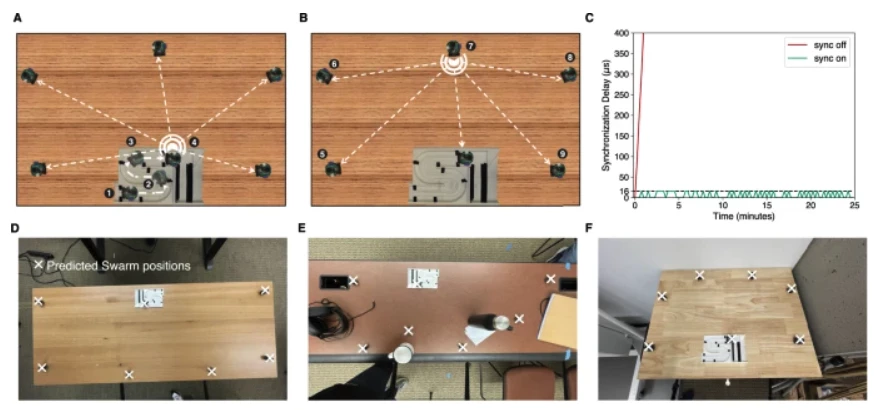

In these more chaotic audio spaces, the only way to isolate single audio sources and mute out the rest is to deploy larger arrays of microphones, and process all the audio streams together to create a spatial map, triangulating the location of each sound my measuring tiny differences in the timing as it propagates through the air and reaches each mic. You can then re-process all your audio streams together using inscrutable deep-learning algorithms to create separate audio streams for each sound source, with all the noise from the others canceled out.

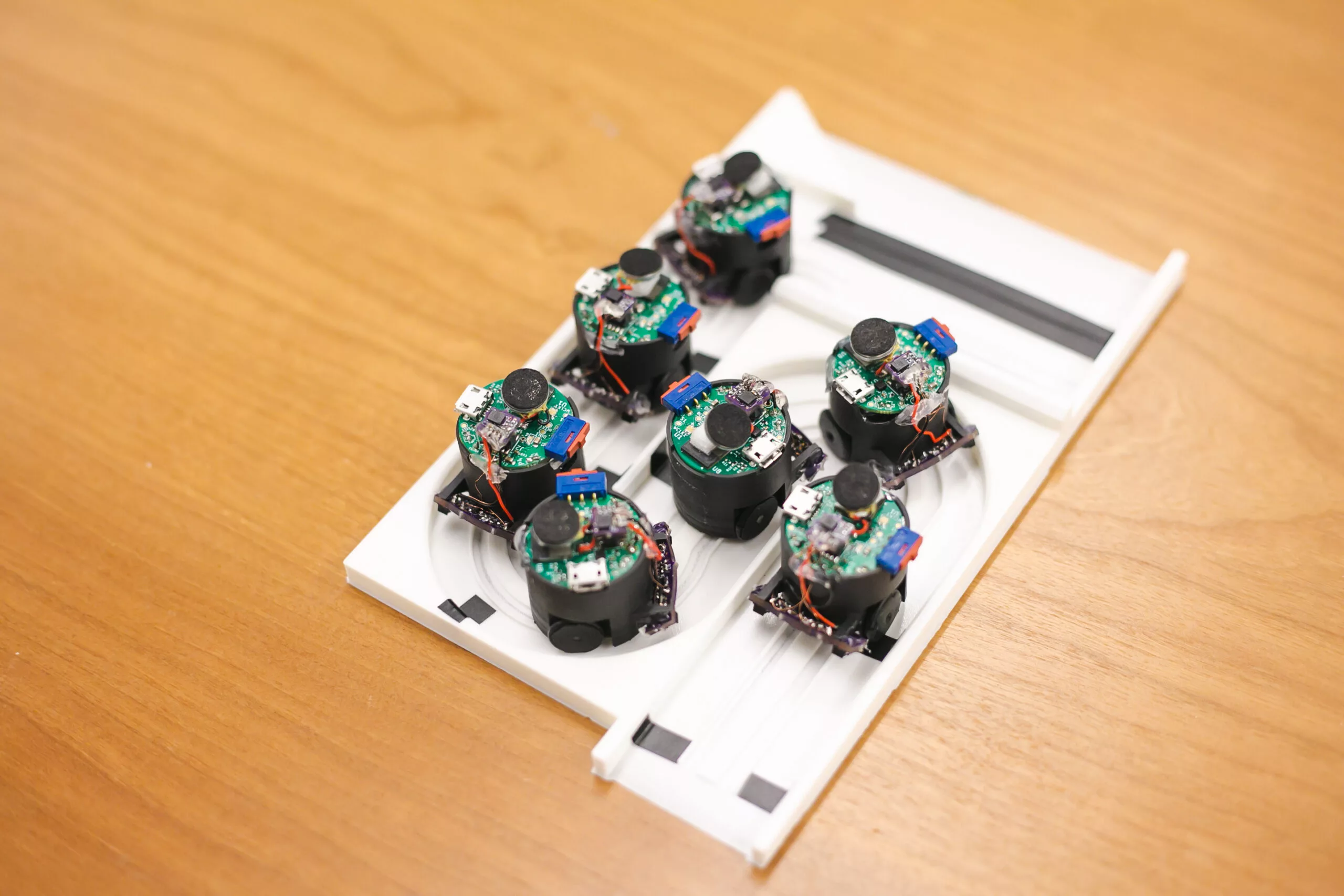

This idea in itself is not new, but University of Washington researchers have now presented a new take on the concept, using a swarm of seven little mic-bots on wheels, each the size of a fancy chocolate truffle, that autonomously deploy themselves from a charging station and create a self-optimizing array in the space available.

The robots use their inbuilt microphones and speakers to navigate around a table surface by sonar, dodging obstacles and distributing themselves as widely as possible to maximize time differential between mics. Unfortunately, this does mean they have to move about one at a time, but once in place, they do a pretty amazing job, as you can see in the video below.

So what's the endgame here? The research team suggests a robotic array like this could function as a portable, self-deploying, sound-isolating mic array for live streaming from conference rooms, for example, theoretically doing a better job of dispersing themselves than a human might.

It's not going to be much use for two-way video calls, says the team, because while it does a good job, it currently takes about 1.82 seconds to process every three-second chunk of sound. The latency also means it's not going to be streaming clean audio of your conversation partner to your earphones in a noisy cafe any time soon – although both these applications might well come within reach as computing power and speed increases.

It could of course also be a very handy surveillance tool, eliminating the masking effect of crowd noise to record private conversations. And interestingly, the UW team says it might be handy for the opposite.

“It has the potential to actually benefit privacy, beyond what current smart speakers allow,” said doctoral student Malek Itani, co-lead author on the study. “I can say, ‘Don’t record anything around my desk,’ and our system will create a bubble 3 feet (0.9 m) around me. Nothing in this bubble would be recorded. Or if two groups are speaking beside each other and one group is having a private conversation, while the other group is recording, one conversation can be in a mute zone, and it will remain private.”

Realistically, static distributed mic arrays may start being implemented in smart room or smart home designs, where they might make it easy to isolate voice-control instructions from various areas. Like listening only to noises from the sofa to control the TV, perhaps, or maybe even picking out drinks orders from people standing at the bar in a noisy venue.

The paper is open access at the journal Nature Communications.

Source: University of Washington