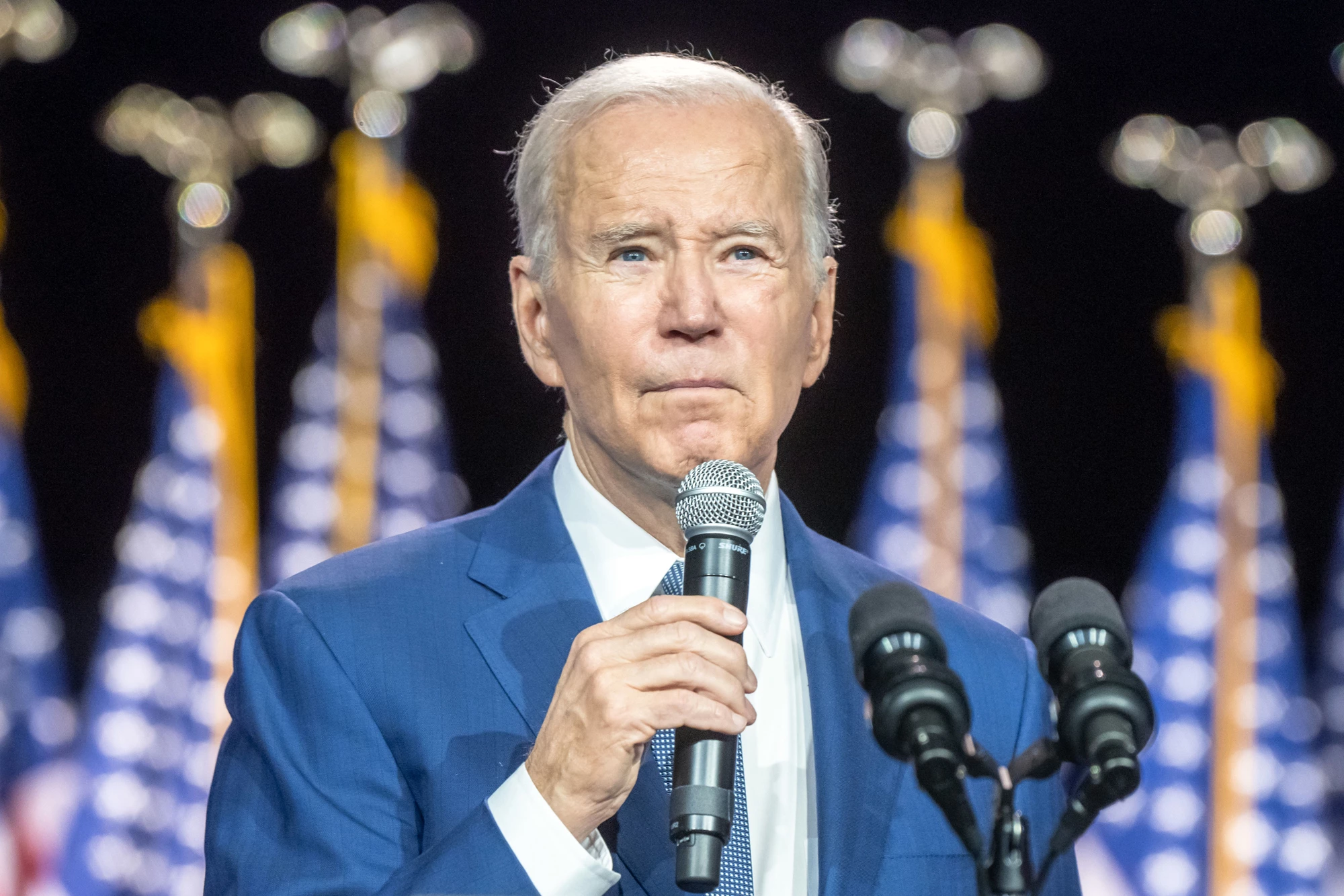

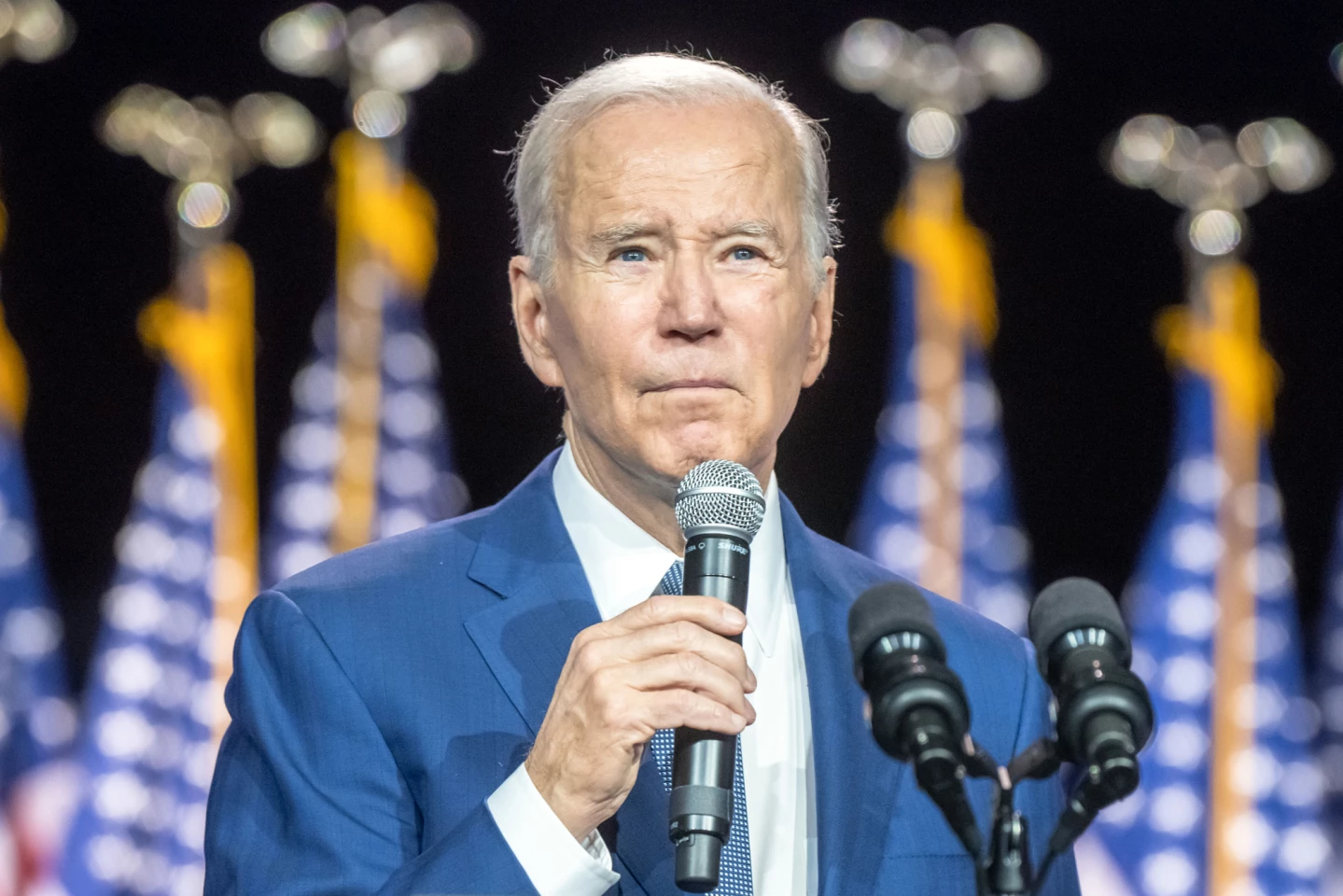

In the wake of US President Joe Biden seemingly calling New Hampshire residents to tell them not to vote in the state’s first-in-the-nation presidential primary, the Federal Communications Commission (FCC) announced on the 8th of February that, effective immediately, robocalls made using AI-generated voices are illegal.

When phones started ringing in New Hampshire homes on the 21st of January, two days before the 2024 Republican presidential primary, many residents probably weren’t expecting to hear President Biden tell them to stay home and save their vote for the November election. Of course, it wasn’t the real Biden; it was a robocall using an AI-generated voice. But some were likely duped.

In response to the hoax, on February 6, the Federal Communications Commission (FCC) issued a cease-and-desist letter to Lingo Telecom, a Texas company that carried the robocalls on its phone network, and to another Texas entity, Life Corporation, that allegedly made the robocalls. Two days later, they announced a unanimously supported Declaratory Ruling that calls made with AI-generated voices are “artificial” and, therefore, illegal under the Telephone Consumer Protection Act (TCPA). The ruling came into immediate effect on the day it was announced.

“Bad actors are using AI-generated voices in unsolicited robocalls to extort vulnerable family members, imitate celebrities, and misinform voters,” said FCC Chairwoman Jessica Rosenworcel. “We’re putting the fraudsters behind these robocalls on notice. State Attorneys General will now have new tools to crack down on these scams and ensure the public is protected from fraud and misinformation.”

Before, State Attorneys General could only target the outcome of an AI-voice-generated robocall – the scam or fraud the robocaller sought to, or did, perpetrate. With this ruling, using AI itself is made illegal, expanding the legal avenues available to law enforcement agencies to hold perpetrators accountable. Now, before robocalling using an AI-generated voice, a scammer must have the express, written consent of the person they’re calling.

Robocalls have been annoying people for a long time without the assistance of technology. But, exponential improvements in AI over the last few years have meant that AI-voice-generated robocalls now sound more realistic. When placed in the hands of a ‘bad actor,’ that level of believability means it’s more likely that someone receiving an AI-voice-generated call will hand over their personal details, social security, or credit card numbers.

A now-12-month-old video by software company ElevenLabs showing off their voice conversion technology gives you an idea of what AI is capable of – and it’s only going to get better. The more it improves, the more difficult it will be to detect AI-generated content.

That someone generated a realistic-sounding replica of the voice of a prominent figure like Biden shone a great big spotlight on the problem. While the FCC had already launched its Notice of Inquiry into robocalls by the time ‘Biden’ was making phone calls, the incident likely highlighted to them the threat AI impersonation of political figures poses to democracy. The Associated Press reported that 73-year-old New Hampshire resident Gail Huntley was convinced the voice on the end of the phone was the President’s, realizing it was a scam only when what he was saying didn’t make sense.

“I didn’t think about it at the time that it wasn’t his real voice,” Huntley said. “That’s how convincing it was.”

If the fake Biden had at least some New Hampshirites convinced they shouldn’t vote in an upcoming election, what’s next?

On the day it announced its ruling prohibiting AI-generated robocalls, the FCC released an online guide for consumers, educating them about deep fakes and providing 12 tips on avoiding robocall and robotext scams. “You have probably heard about Artificial Intelligence, commonly known as AI,” the guide begins. “Well, scammers have too, and they are now using AI to make it sound like celebrities, elected officials, or even your own friends and family are calling.”

Those who break the FCC’s new law face steep fines, issued on a 'per call' basis, and the Commission can take steps to block calls from phone carriers that facilitate robocalls. In addition, the TCPA allows individual consumers or an organization to bring a lawsuit against robocallers to recover damages for each unwanted call.

Time will tell if the new law thwarts AI-voice-generated robocallers.

Source: FCC