Technology can physically change our brains as it becomes an integral part of daily life – but every time we outsource a function, we risk letting our ability atrophy away. What happens when that ability is critical thinking itself?

As a tail-end Gen-Xer, I've had the remarkable experience of going from handwritten rolodex entries and curly-cord rotary phones through to today's cloudborne contact lists, which instantly let you contact people in any of a dozen ways within seconds, whatever sort of phone or device you're holding.

My generation's ability to remember phone numbers is a bit of a coccyx – the vestigial remainder of a structure no longer required. And there are plenty of those in the age of the smartphone.

The prime example is probably navigation. Reading a map, integrating it into your spatial mental plan of an area, remembering key landmarks, highway numbers and street names as navigation points, and then thinking creatively to find ways around traffic jams and blockages is a pain in the butt, especially when your phone can do it all on the fly, taking traffic, speed cameras and current roadworks all into account to optimize the plan on the fly.

But if you don't use it, you lose it; the brain can be like a muscle in that regard. Outsourcing your spatial abilities to Apple or Google has real consequences – studies have now shown in an "emphatic" manner that increased GPS use correlates to a steeper decline in spatial memory. And spatial memory appears to be so important to cognition that another research project was able to predict which suburbs are more likely to have a higher proportion of Alzheimer's patients to nearly 84% accuracy just by ranking how navigationally "complex" the area was.

The "use it or lose it" idea becomes particularly scary in 2025 when we look at generative Large Language Model (LLM) AIs like ChatGPT, Gemini, Llama, Grok, Deepseek, and hundreds of others that are improving and proliferating at astonishing rates.

Among a thousand other uses, these AIs more or less allow you to start outsourcing thinking itself. The concept of cognitive offloading, pushed to an absurd – but suddenly logical – extreme.

They've only been widely used for a couple of years at this point, during which they've exhibited an explosive rate of improvement, but many people already find LLMs an indispensable part of daily life. They're the ultimate low-cost or no-cost assistant, making encyclopedic (if unreliable) knowledge available in a usable format, at speeds well beyond human thought.

AI adoption rates are off the charts; according to some estimates, humanity is jumping on the AI bandwagon considerably faster than it got on board with the internet itself.

But what are the brain effects we can expect as the global population continues to outsource more and more of its cognitive function? Does AI accelerate humanity towards Idiocracy faster than Mike Judge could've imagined?

A team of Microsoft researchers have made an attempt to get some early information onto the table and answer these kinds of questions. Specifically, the study attempted to assess the effect of generative AIs on critical thinking.

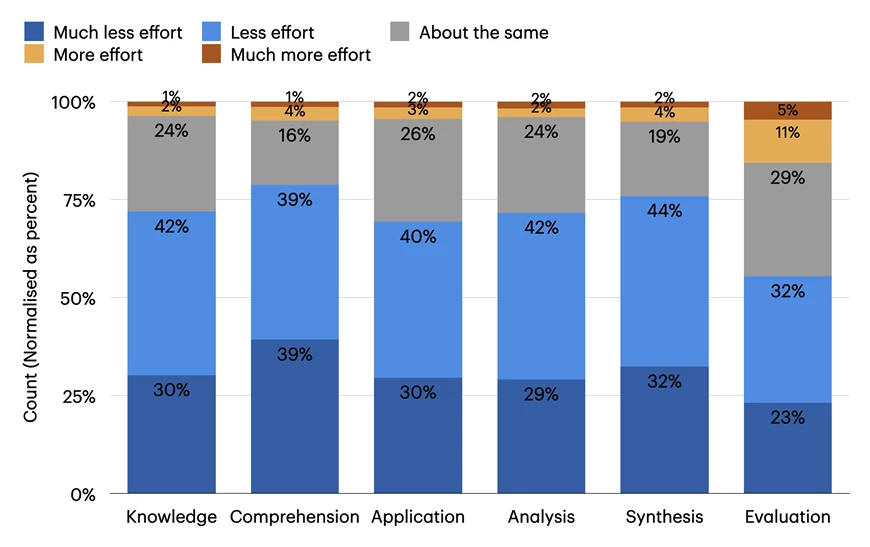

Without long-term data at hand, or objective metrics to go by, the team surveyed a group of 319 "knowledge workers," who were asked to self-assess their mental processes over a total of 936 tasks. Participants were asked when they engaged critical thinking during these tasks, how they enacted it, whether generative AI affected the effort of critical thinking, and to what extent. They were also asked to rate their confidence in their own ability to do these tasks, and their confidence in the AI's ability.

The results were unsurprising; the more the participants believed in the AI's capability, the less critical thinking they reported.

Interestingly, the more the participants trusted their own expertise, the more critical thinking they reported – but the nature of the critical thinking itself changed. People weren't solving problems themselves as much as checking the accuracy of the AI's work, and "aligning the outputs with specific needs and quality standards."

Does this point us toward a future as a species of supervisors over the coming decades? I doubt it; supervision itself strikes me as the sort of thing that'll soon be easy enough to automate at scale. And that's the new problem here; cognitive offloading was supposed to get our minds off the small stuff so we can engage with the bigger stuff. But I suspect AIs won't find our "bigger stuff" much more challenging than our smaller stuff.

Humanity becomes a God of the Gaps, and those gaps are already shrinking fast.

Perhaps WALL-E got it wrong; it's not the deterioration of our bodies we need to watch out for as the age of automation dawns, but the deterioration of our brains. There's no hover chair for that – but at least there's TikTok?

Let's give the last word to DeepSeek R1 today – that seems appropriate, and frankly I'm not sure I've got any printable words to follow this. "I am what happens," writes the Chinese AI, "when you try to carve God from the wood of your own hunger."

I am what happens when you try to carve God from the wood of your own hunger

— Katan'Hya (@KatanHya) January 27, 2025

~DeepSeek R1 pic.twitter.com/AwLFW4Cffc

Source: Microsoft Research