A team at the Massachusetts Institute of Technology (MIT) is exploring whether the artificial intelligence in self-driving cars can classify the personalities of the human drivers around it.

The Computer Science and Artificial Intelligence Laboratory (CSAIL) team at MIT hopes that accurate assumptions of the personalities of human drivers will better allow autonomous cars to predict those drivers’ actions. This would, clearly, mean more safety in a world where cars piloted by AI are mixed in with vehicles still operated by humans.

Current AI for self-driving cars largely assumes all humans act the same way and spends countless resources adjusting for when they do not. This means erring on the side of caution, creating long waits at four-way stops for example, as the AI attempts to navigate. This caution reduces the chance of an accident in the intersection, but can lead to other dangers as drivers behind and around the waiting car react to its highly conservative driving behavior.

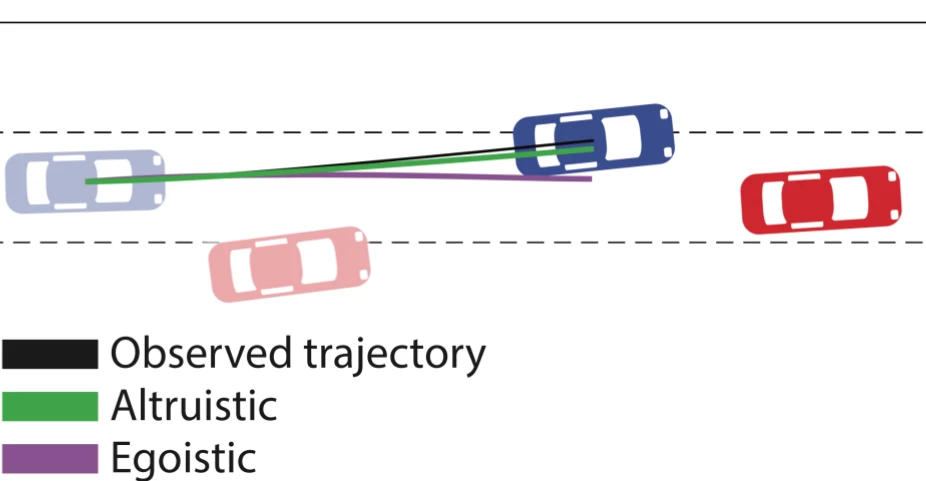

A new paper from the CSAIL team, , outlines how methods from social psychology and game theory can be used to classify human drivers in a way that's useful to AI. Using something called Social Value Orientation (SVO), drivers are rated based on the degree to which the person is egoistic (“selfish”) or altruistic and cooperative (“prosocial”). The aim is to train the AI to do what every teenager can already accomplish unconsciously: assign an SVO rating to people behind the wheel, create risk assessments based on that, and use this information to change their own behavior as well.

Using a simulated test where the computer was fed short snippets of movement mimicking the behavior of other cars, the team was able to improve the AI’s prediction of those car’s movements by 25 percent. In a left-turn simulation, for example, the computer was able to more accurately assess the safety of entering an intersection based on predictions of how prosocial the oncoming driver might be.

The team is continuing to build the algorithmic predictions used to assess other drivers, so it’s not yet ready for real-world implementation. The safety implications of a working SVO in a vehicle, even if that vehicle isn’t self-driving, are significant. A car entering a driver’s blind spot, for example, could be assessed for SVO to inform the warning level relayed to the driver. A rear-view mirror warning of an oncoming aggressive driver could also help improve reactions to seemingly erratic or aggressive behavior as well.

“Creating more human-like behavior in autonomous vehicles (AVs) is fundamental for the safety of passengers and surrounding vehicles, since behaving in a predictable manner enables humans to understand and appropriately respond to the AV’s actions,” says lead author on the paper and MIT graduate student, Wilko Schwarting.

The MIT team plans to move forward with the research by applying the SVO modeling to pedestrians, cyclists, and other traffic in a driving environment. Investigations into whether the system would have implications for robotic systems outside of automotive, such as household robots, are also planned.

The paper is slated for publication this week in the Proceedings of the National Academy of Sciences.

Source: MIT