Our knowledge of the large-scale structure of the Universe is gradually taking shape. However, our improved vision is mostly being statistically squeezed from huge data sets. Working backward from a statistical analysis to a putative fact about the (singular) Universe, to which statistics do not apply on a cosmological scale, is a dicey business. A case in point is a recent look at the biggest known structures in the Universe – large quasar groups.

A quasar is a very energetic active region at the center of a galaxy , typically about the size of the Solar System, and with an energy output roughly 1000 times the energy produced by the entire Milky Way.

At present, the largest structures in the Universe appear to be collections of quasars that form large quasar groups (LQGs). LQGs are measured in hundreds of megaparsecs (a parsec is about 3.26 light years), and have been proposed as the precursors for the sheets, walls, and filaments of galaxies and dark matter that fill the present-day Universe.

Early this year, Professor R.G. Clowes and his research group at the University of Central Lancashire in the UK reported that their analysis of quasar locations and distances from the Sloan Digital Sky Survey had identified a candidate for a very large quasar group (now referred to as the Huge-LQG) at a distance that required 9 billion years for its light to reach Earth.

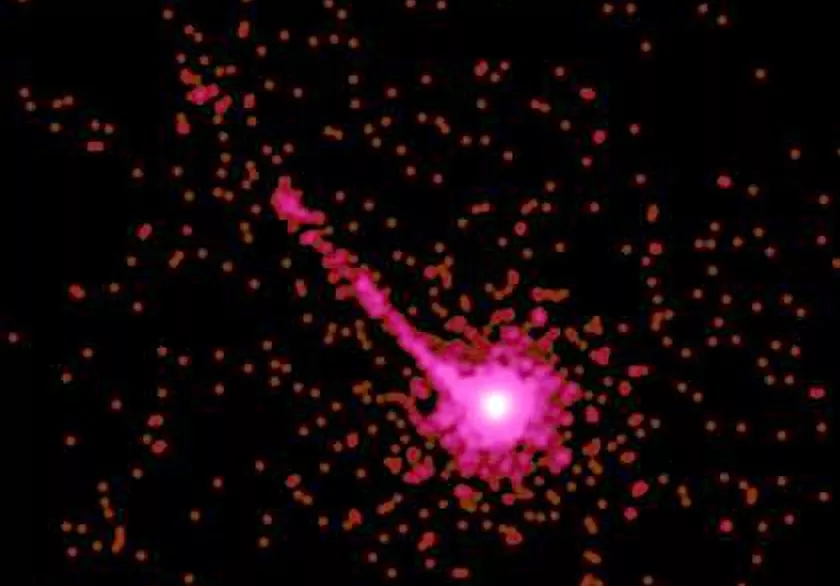

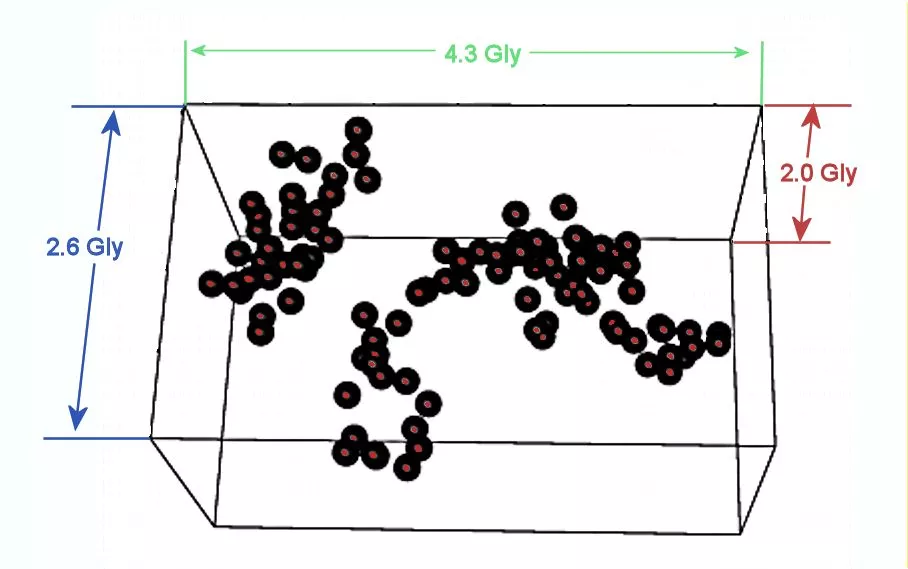

The Huge-LQG contains 73 quasars spread over a region measuring some 1.24 x 0.64 x 0.37 Gigaparsecs, which would make it the largest structure known in the Universe. Their analysis suggested that the quasars in the cluster appear closer together than expected for random positions.

Let's take a quick look at how clusters of quasars were identified. A sphere of a given size (100 Mpc diameter spheres were used) is drawn about the location of a quasar. If any other quasars appear in that sphere, they are considered to be part of a cluster with the first quasar, and similar spheres are drawn about them. Eventually, when no more quasars are caught within the latest generation of spheres, the extent of the cluster is defined by the volumes of the spheres. This is sometimes called a friends-of-friends algorithm.

This procedure is terribly sensitive to the sphere size chosen to examine a region of space for the presence of a cluster. The map of the Huge-LQG above (also including a second LQG at the upper left) is described in spheres that are two-thirds the size of the spheres used to define the cluster; a difference large enough to split the single cluster into a number of smaller groupings. On the other end, if the sphere size chosen for the study were increased by only 20 percent, the algorithm will identify a LQG spanning the entire data set. It is difficult to know which set of clusters are real, if any.

The Huge-LQG, if validly identified, was claimed to represent a violation of the Cosmological Principle (CP). The CP roughly says that the Universe appears about the same from any position within itself, that there are no special locations. At first glance, the existence of such a large structure appears to violate that concept.

If so, nothing much would actually change in theoretical cosmology, as the CP only provides a starting point – the structures of more complex cosmologies are treated as perturbations around the homogeneous and isotropic universe described by the CP. In particular, this has no bearing on the occurrence (or not) of a Big Bang at the beginning of our Universe, despite the delighted claims of many online skeptics and "creation theory" sites.

A key issue, however, presents itself. Is the perceived clustering of the Huge-LQG of sufficiently low probability that it reflects physics, rather than randomness? What does that even mean when we have only the one Universe, making odds of multiple outcomes meaningless?

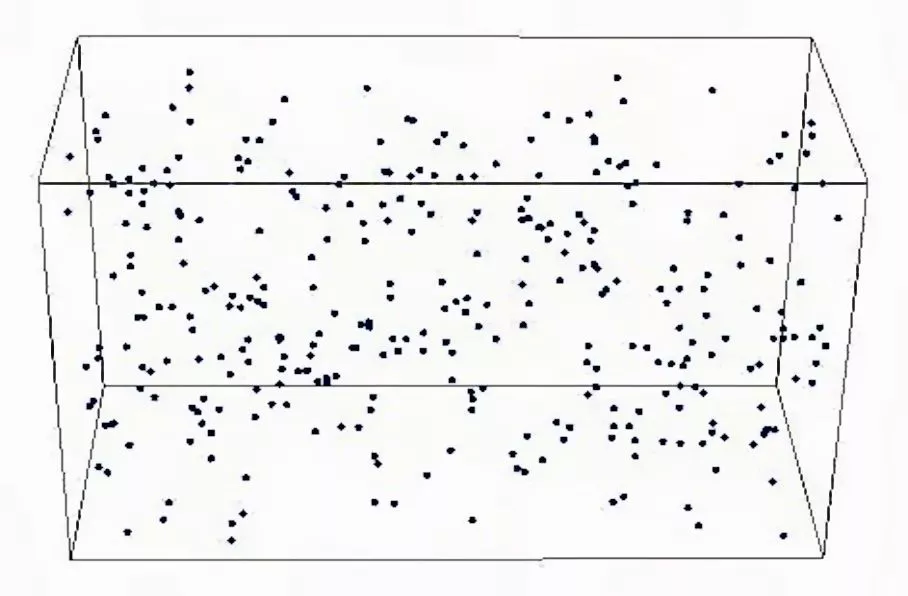

A post-doctoral researcher named Seshadri Nadathur, then at the University of Bielefeld, decided to take a closer look at the Huge-LQG. His map above duplicates Clowes' map of Huge-LQG, but includes all the quasars in the immediate region, and shows no obvious sign of what is very clear clustering in Clowes' map.

After performing a number of statistical studies on the quasar data, and finding extreme changes in the Huge-LQG membership and shape with small changes in the cluster finding parameters, he decided to determine the probability that apparent clusters the size of the Huge-LQG would appear in a random assortment of quasars. He set up 10,000 regions identical in size to that studied by Prof. Clowes, and filled them with randomly distributed quasars with the same position statistics as did the actual quasars in the sky.

He found that 850 (8.5 percent) of the 10,000 simulated randomly populated regions had clusters larger than the Huge-LQG. His conclusion was that, in the absence of better data, the observation of the Huge-LQG is best explained as the action of a computer algorithm biased to find clusters looking at a spatially random scattering of quasars.

What, if anything, does this mean? Nothing, in a way, as the argument on both sides goes backward from a statistical analysis of possible universes to what an observation means in this solitary Universe we call home. This brings up the concept of naturalness in science.

Most people feel more comfortable with an explanation whose predictions don't change drastically when the parameters are changed. For example, in Newton's theory of gravity, physical behavior doesn't change qualitatively when the gravitational constant is changed a bit; orbits just swing a little closer in or further out. Perhaps the most famous reaction to arguments based on naturalness is Niels Bohr's irritated comment asking Einstein to stop telling God what to do.

On the other hand, when an explanation requires not only a physical mechanism, but also a statistically unlikely set of parameters, to believe in the explanation feels more difficult. Such explanations are often called "fine-tuned," usually with derision.

Whether one embraces or eschews naturalness in physical theory, the Universe doesn't care one way or the other – it embodies the natural. Naturalness is a statistical, philosophical, and psychological notion that provides no authority for understanding a single entity like our Universe. Despite this, many scientists do use naturalness as a guide to help make choices (usually when guidance provided by actual data fades): It may still lead to a path out of a foggy maze.

Good scientists are skeptics, especially of what they themselves believe. An important discovery or new concept is quickly studied and criticized by the herd, with the goal of refuting or refining. However, if the Universe says your description of its operations is correct, it doesn't matter if a committee of geniuses thinks you are wrong. The opposite is also true. What makes science work is that the final authority is the Universe, which cannot be swayed, influenced, or confused. That outbids intellectual consensus, sloth, error, deafness, and ignorance any day of the week.

Sources: University of Central Lancashire[PDF] and University of Bielefeld[PDF]