In addition to human divers equipped with sonar cameras, the U.S. Navy has also trained dolphins and sea lions to search for bombs on and around vessels. All these methods are expensive and can’t always deliver the best performance in all environments. Robots would seem to be the obvious answer and underwater robots have been the focus of much research and development in recent years. Now researchers at MIT have developed new algorithms to vastly improve the navigation and feature-detecting capabilities of these robots.

With the ultimate goal of designing completely autonomous robots that can navigate and map cloudy underwater environments without any prior knowledge of the environment and detect mines as small as 10 cm in diameter, Franz Hover, the Finmeccanica Career Development Assistant Professor in the Department of Mechanical Engineering, and graduate student Brendan Englot came up with algorithms to program a robot called the Hovering Autonomous Underwater Vehicle (HAUV).

Getting a robot to provide a complete picture of a massive structure, such as a naval combat vessel, including small features such as bolts, struts and any small mines, is no easy task. And with mines as small as 10 centimeters (3.9 in) in diameter still powerful enough to cause serious damage to a ship, attention to detail is of the utmost importance.

“It’s not enough to just view it from a safe distance,” Hover says. “The vehicle has to go in and fly through the propellers and the rudders, trying to sweep everything, usually with short-range sensors that have a limited field of view.”

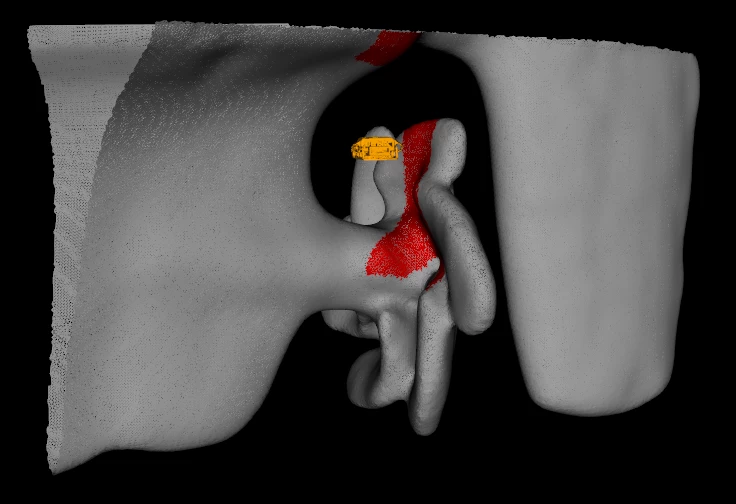

To provide a detailed sweep of a ship's hull, the researchers took a two-stage approach. Firstly, the robot is programmed to swim in a square around the ship’s hull at a safe distance of 10 meters (33 ft), using its sonar camera to gather data that is used to produce a grainy point cloud. Although a ship’s large propeller can be identified at this low resolution, it isn’t detailed enough to make out a small mine.

Additionally, the point cloud may not necessarily tell the robot where a ship’s structure begins and ends – a problem if it wants to avoid colliding with a ship’s propellers. To generate a three-dimensional, “watertight” mesh model of the ship, the researchers translated this point cloud into a solid structure by adapting computer-graphics algorithms to the sonar data.

Once the robot has a solid structure to work with, the robot moves onto the second stage. This sees the robot programmed to swim closer to the ship, with the idea of covering every point in the mesh at spaces of 10 centimeters apart.

While the seemingly most obvious sweep pattern would be one strip at a time – much like mowing a lawn – the researchers came up with a more efficient approach. Using optimization algorithms the robot is programmed to sweep across the structures while taking into account their complicated 3D shapes. The researchers say this technique significantly shortens the path the robot needs to take to view the entire ship.

The team has field tested its algorithms by creating underwater models of the Curtiss, a 183-meter (600-ft) military support ship in San Diego, and the Seneca, an 82-meter (269-ft) cutter in Boston. Tests on the system are continuing this month in Boston Harbor. With the U.S. Navy also developing autonomous hull-cleaning robots, there may soon be a number of robots keeping each other company on and around the hulls of ships.

“The goal is to be competitive with divers in speed and efficiency, covering every square inch of a ship,” Englot says. “We think we’re close.”

Hover and his colleagues have detailed their approach in a paper to appear in the International Journal of Robotics Research.

The video below shows the 3D coverage path taken by the HAUV when mapping the SS Curtiss using the new algorithms.

Source: MIT