Researchers at Carnegie Mellon University have presented some remarkable audio from a new optical microphone system that uses cameras to see and reconstruct sonic vibrations. Remarkably, it can cleanly separate a single instrument playing in a group.

This kind of sonic isolation is extremely difficult even for high-end audio microphones, so to be able to achieve it using nothing but two cameras and a laser? It feels a bit like black magic. But the results, which you can see in the video embedded at the end of this piece, are stunning.

Here's the basic theory: sound is nothing but a series of pressure waves that travel through the air. Anything that makes sound is simply vibrating to create those pressure waves. An optical microphone is basically a camera system designed to monitor and interpret vibrations on the surface of a sound source – or even objects placed near a sound source, which vibrate in sympathy with the sound waves in the air around them.

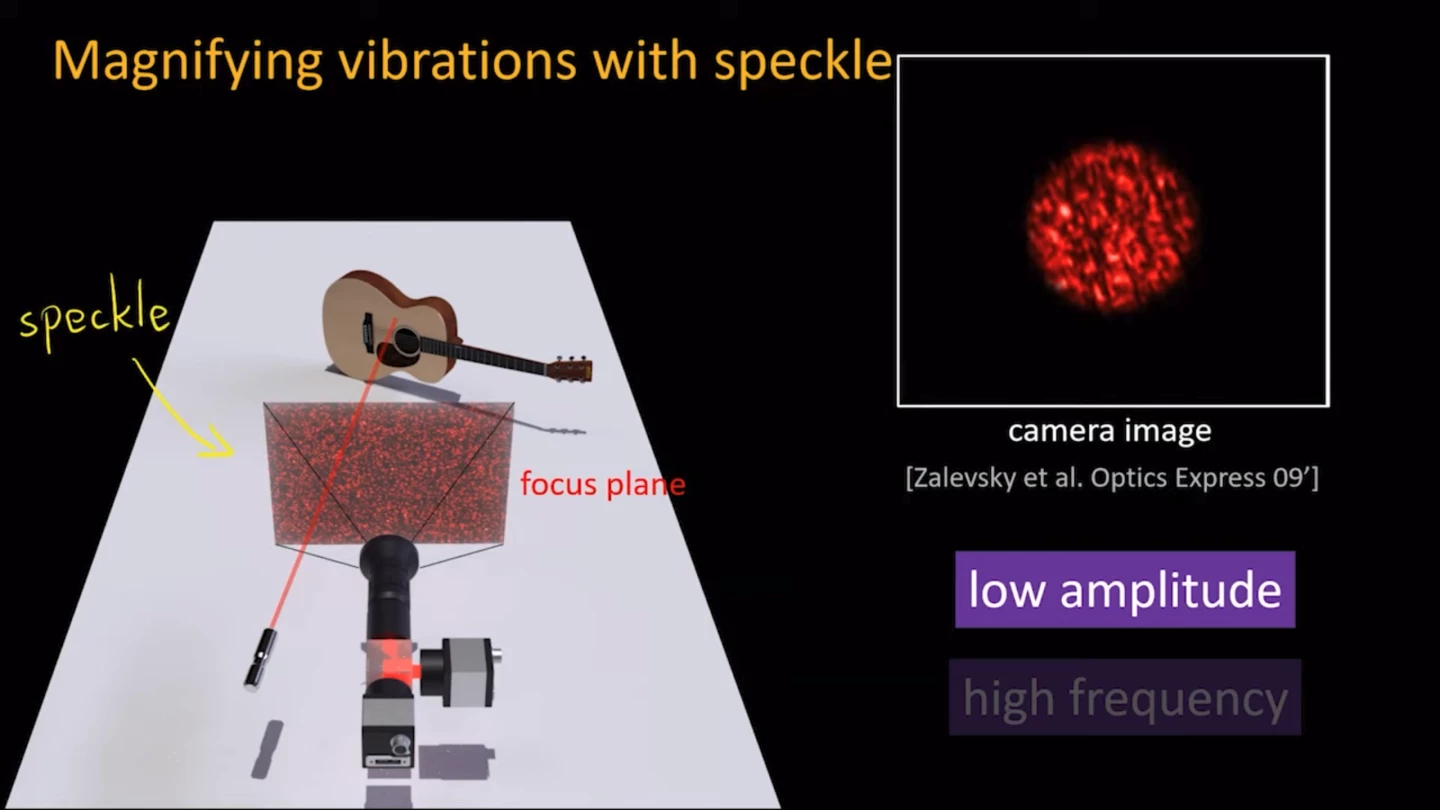

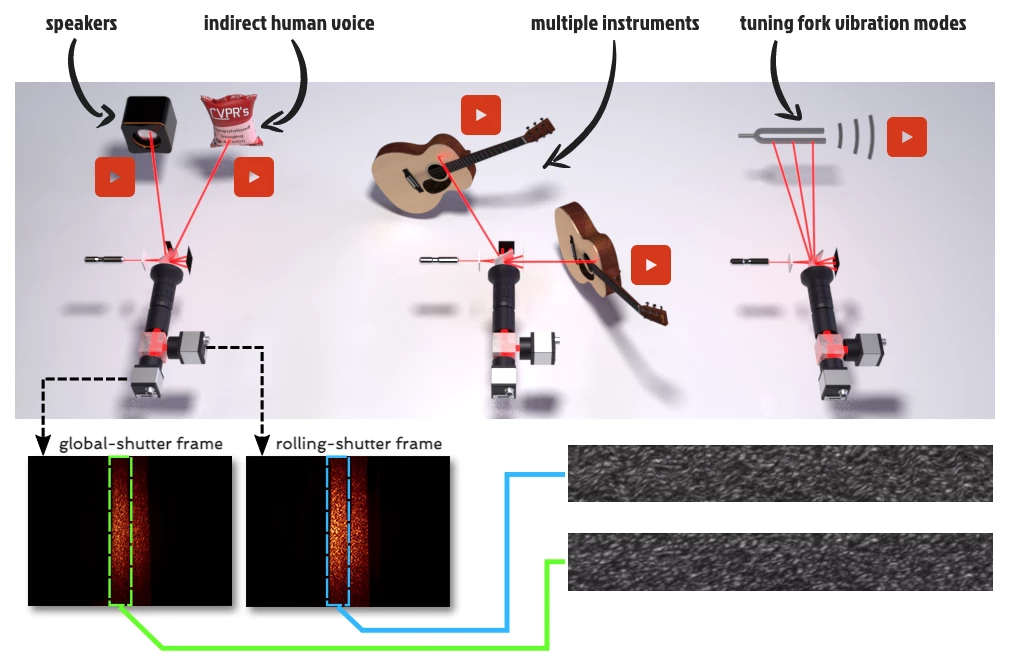

The Carnegie Mellon team's system shines a laser on the vibrating surface, creating a precise speckle pattern that distorts as the sound source vibrates. A pair of cameras record the changes in the speckle pattern at 63 frames per second, and a software algorithm is used to analyze the speckle pattern variations in the footage across the two cameras, and reconstruct an audio signal.

The 63-fps frame rate might seem counter-intuitive here; human hearing can distinguish tones oscillating at between somewhere around 20 and 20,000 cycles per second, so ignoring all the other challenges here, a 63-fps limit on input data would seem to place a 63-Hz upper limit on the sound this device can "see."

Not so. Indeed, this optical mic can read sound up to a remarkable 63,000 Hz thanks to some very clever use of the cameras involved. One camera uses a global shutter, meaning that it reads its entire image sensor at the same time for each frame. The other camera uses a rolling shutter, so it reads its sensor as a series of a thousand consecutive horizontal lines per frame. The rolling shutter image therefore contains high-frequency information, which can be compared back to the global shutter image to account for things like the movement and tilt of a guitar as a musician plays, and the software algorithm is clever enough to assemble a sound out of this information.

"We've invented a new way to see sound," said Mark Sheinin, lead author on the research paper and a post-doctoral research associate at the Illumination and Imaging Laboratory in Carnegie Mellon's Robotics Institute. "It's a new type of camera system, a new imaging device, that is able to see something invisible to the naked eye."

The team has tested this optical mic on guitar and violin, on a speaker cone, on tuning forks, and even on a Doritos bag sitting in front of a speaker and vibrating in sympathy with the ambient sound. They also used it to separate the audio from two guitars playing a duet, and from two speakers, each playing a different song beside each other.

"This system pushes the boundary of what can be done with computer vision," said co-author Matthew O'Toole, an assistant professor in the Robotics Institute. "This is a new mechanism to capture high speed and tiny vibrations, and presents a new area of research."

The team believe this tech could be used for much more than deciphering audio signals; vibration can be a key indicator of mechanical wear as faults develop in machinery, for example.

"If your car starts to make a weird sound, you know it is time to have it looked at," Sheinin said. "Now imagine a factory floor full of machines. Our system allows you to monitor the health of each one by sensing their vibrations with a single stationary camera."

Check out the optical mic setup and hear the results in the video below.

Source: Carnegie Mellon University