We are witnessing a revolution. After the stunning debut of OpenAI's Dall-E 2 image generator last year, the company opened its natural language generator up to the public at the end of November last year. Since then, it's spread like wildfire, amassing more than 100 million users in its first two months, making it the fastest-growing consumer application in history and the buzzword of the year.

There had been thousands of AI chatbots before, but never one like this. Here was an artificial intelligence trained on hundreds of billions of words; it has read billions of books, billions of web pages, billions of Wikipedia entries – so it's ingested a broad and detailed snapshot of the entirety of human knowledge up until around June 2021, the cutoff point for the dataset on which its underlying GPT 3.5 language model has been trained.

Beyond being handed this priceless treasure trove of knowledge, ChatGPT has been trained in the art of interaction using untold numbers of written human conversations, and guided by human supervisors to improve the quality of what it writes.

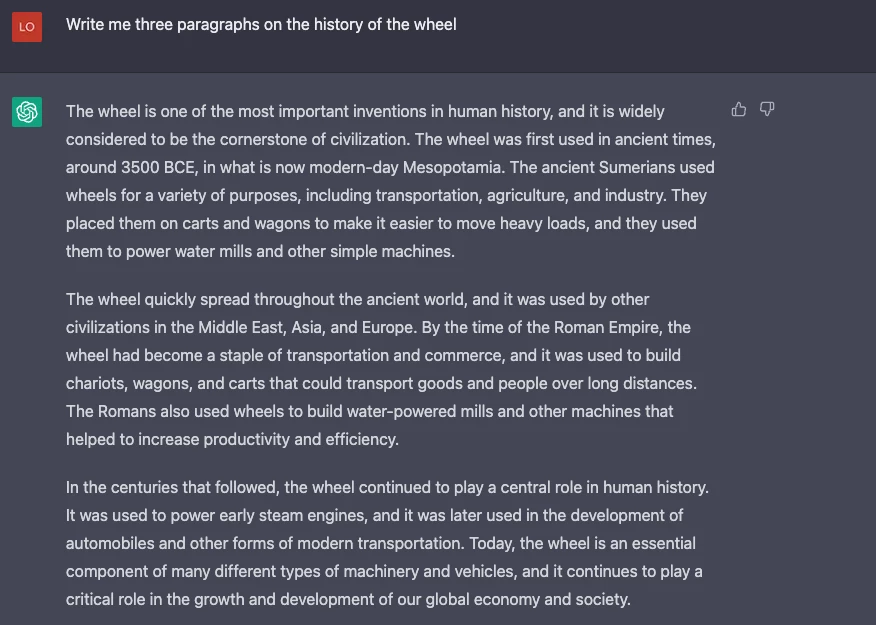

The results are staggering. ChatGPT writes as well as, or (let's face it) better than, most humans. This overgrown autocomplete button can generate authoritative-sounding prose on nearly any topic in a matter of milliseconds, of such quality that it's often extremely difficult to distinguish from a human writer. It formulates arguments that seem well-researched, and builds key points toward a conclusion. Its paragraphs feel organic, structured, logically connected and human enough to earn my grudging respect.

The striking thing about the reaction to ChatGPT is not just the number of people who are blown away by it, but who they are. These are not people who get excited by every shiny new thing. Clearly something big is happening.

— Paul Graham (@paulg) December 2, 2022

It remembers your entire conversation and clarifies or elaborates on points if you ask it to. And if what it writes isn't up to scratch, you can click a button for a complete re-write that'll tackle your prompt again from a fresh angle, or ask for specific changes to particular sections or approaches.

ChatGPT is an early prototype for the ultimate writer

It costs you nothing. It'll write in any style you want, taking any angle you want, on nearly any topic you want, for exactly as many words as you want. It produces enormous volumes of text in seconds. It's not precious about being edited, it doesn't get sick, or need to pick its kids up from school, or try to sneak in fart jokes, or turn up to work hungover, or make publishers quietly wonder exactly how much self-pleasuring they're paying people for in a remote work model.

Little wonder that websites like CNET, Buzzfeed and others are starting the process of replacing their human writers with ChatGPT prompt-wranglers – although there's icebergs in the water for these early adopters, since the technology still gets things flat-out wrong sometimes, and sounds confident and authoritative enough in the process that even teams of fact-checking sub-editors can't stop it from publishing "rampant factual errors and apparent plagiarism," as well as outdated information.

Despite these slight drawbacks, the dollar rules supreme, and there has never been a content-hose like this before. Indeed, it seems the main thing standing between large swaths of the publishing industry and widespread instant adoption of ChatGPT as a high-volume, low-cost author is the fear that Google might figure out how to detect AI-generated text and start penalizing offenders by tanking their search ratings.

Just in case anyone's wondering, we don't use it here at New Atlas, and have no plans to start – but we'd be fools not to see the writing on the wall. This genie is well and truly out of the bottle, and it won't take long before it can fact-check itself and improve its accuracy. It's not immediately obvious how AI-generated text can reliably be detected at this point. So enjoy your local human writers while you still can ... And throw us $20 on an ad-free subscription if you want to help keep the doors open!

ChatGPT the Creative Wordsmith

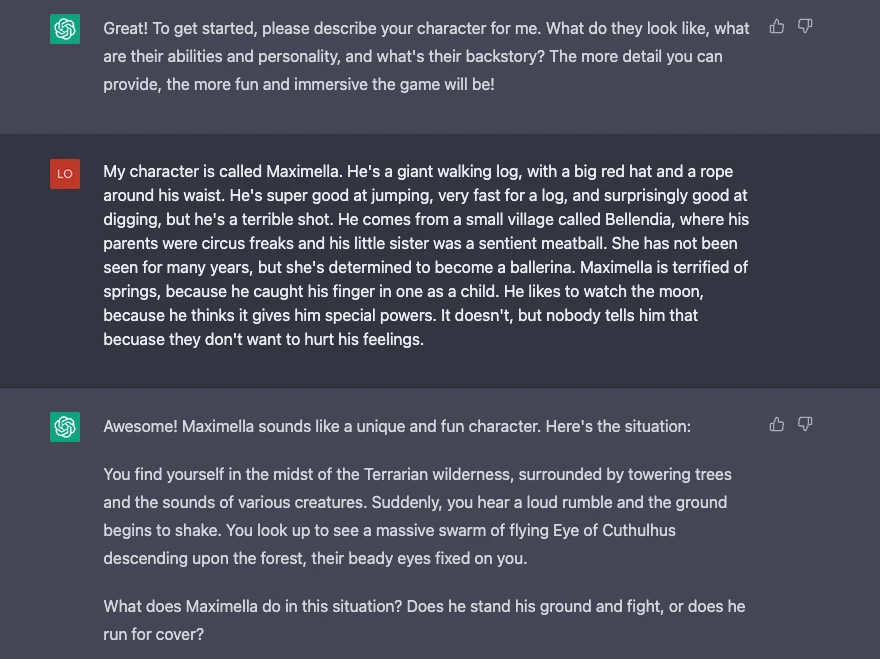

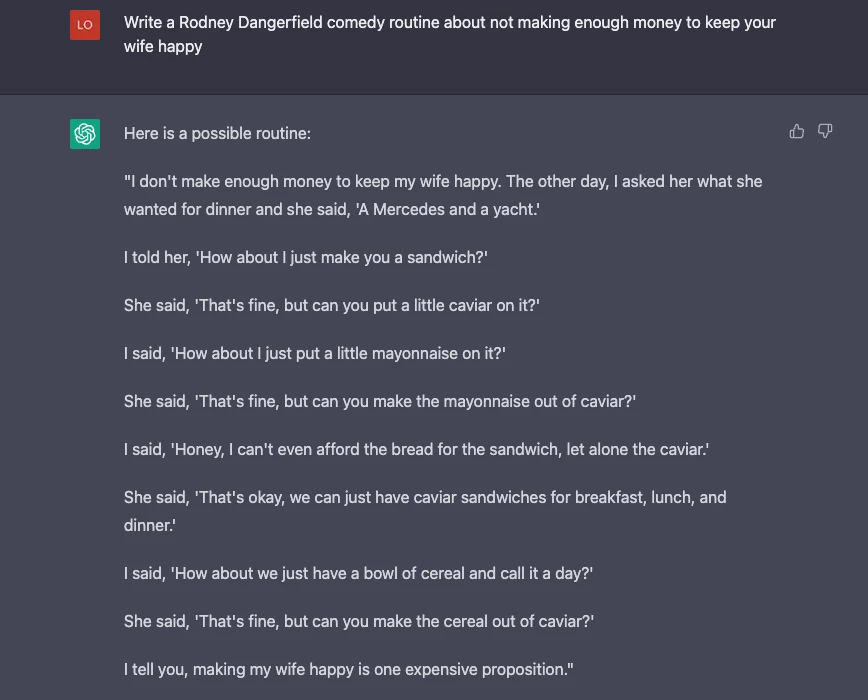

Its work certainly doesn't have to be dry and (seemingly) factual, either. ChatGPT has more than a passing understanding of more creative forms of writing as well, and will happily generate fiction too. It'll pump out custom bedtime stories for your kids, or complex choose-your-own-adventure experiences, or role-playing games about anything you like, or teen fiction, or screenplays, or comedy routines.

It'll write song lyrics for you at the drop of a hat, and if they come out a little blunt and obvious, you can reply "ok, but make that less literal" and it'll do just that. It creates flowery poetry that rhymes, sticks to the requested meter and engages a topic about as well as the average amateur poet – which frankly isn't that well, but we only have ourselves to blame on that front.

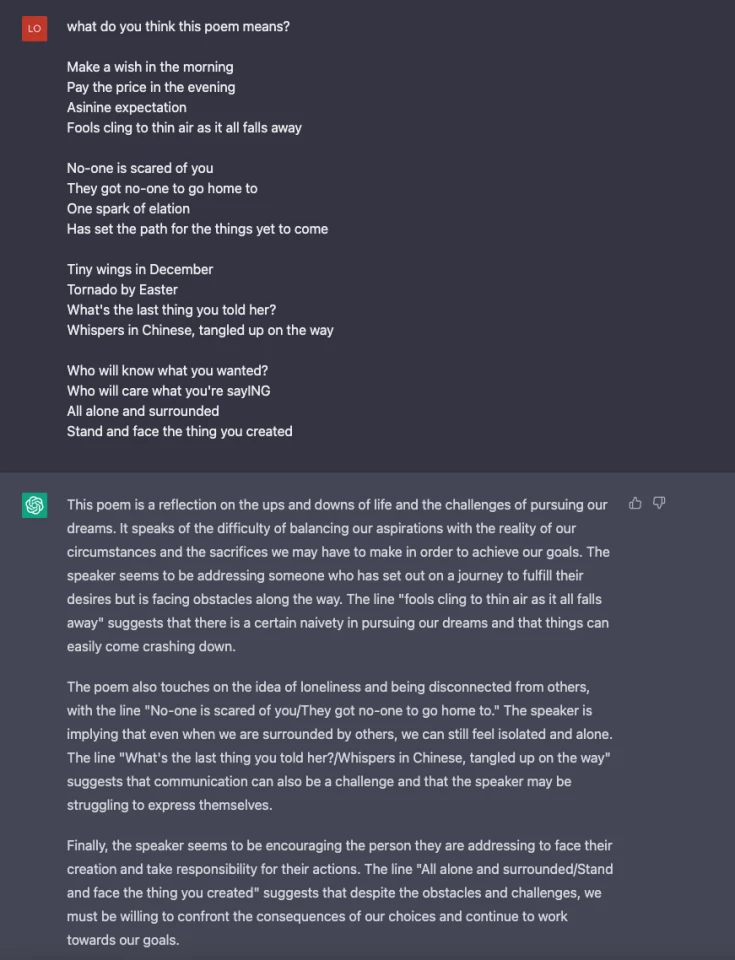

Likewise, it'll analyze, summarize or critique any kind of writing you throw into it. It'll write you an instant book report, outlining major themes, moments and character arcs, or take an abstract poem or lyric and try to distill a meaningful understanding of the content. And again, it does a pretty solid job.

Indeed, like DALL-E in the visual art world, and Google's MusicLM in the music world, ChatGPT does a pretty remarkable impersonation of creative human expression and understanding when you ask it to, quickly putting paid to the notion that art and creativity is what will separate us from the robots. These machines might not feel what we feel, but they've seen so much of our output that they can generate "creative" work that absolutely holds its own against human work.

Yes, it's derivative – but so are we, if we're honest with ourselves. The human brain is one of the greatest algorithmic black boxes we've ever seen, built on hundreds of millions of years of evolutionary trial and error and trained with a lifetime's worth of experiential data. The most "original" thinkers and creators in human history would never have made the same contributions if they were raised differently.

In just a few short years, these AIs appear frighteningly close to catching up and surpassing all but the most outstanding humans in a given creative field. And you can bet your bottom dollar they'll continue to improve a lot faster than Homo Sapiens did. Best we get used to not feeling so special.

We all knew automation was coming to replace boring, repetitive algorithmic work. Optimists envisaged future societies that ran themselves in prosperity, leaving humans to lead lives of meaningful leisure, writing all those books we never got around to, painting, writing music. But it's becoming clear that the robots will soon be able to pump out high-quality creative content across a range of visual, written and musical forms, at lightning speed.

I spent the weekend playing with ChatGPT, MidJourney, and other AI tools… and by combining all of them, published a children’s book co-written and illustrated by AI!

— Ammaar Reshi (@ammaar) December 9, 2022

Here’s how! 🧵 pic.twitter.com/0UjG2dxH7Q

You could definitely view this stuff as the most incredible creative tool in history. You can definitely make money out of it, as the children's book above illustrates – click through into the Twitter thread to see the process. You could also weep for the human creatives that'll have to work so much harder for the chance to compete with machines that churn this kind of work out in seconds, and for the kids that won't bother to learn how to work with watercolors or write their own stories.

ChatGPT the Programmer

Not content with prose and poetry, ChatGPT is also capable of writing code in a number of languages, for a wide variety of purposes. It's been educated on a colossal amount of code that's been written before, along with documentation and discussions on how to write and troubleshoot different functions. Given a plain English prompt, it can churn out working code snippets at breakneck pace.

The hottest new programming language is English

— Andrej Karpathy (@karpathy) January 24, 2023

But given a different prompt, it can take you through the entire process of building an entire application, telling you step by step what to do. Here's a very basic and crass example – YouTuber Nick White gets ChatGPT to take him through the process of coding and deploying a basic job search web page for tech industry employers, ending up with a functional page very quickly. White also notes that ChatGPT is outstanding at generating reams and reams of mock data, formatted perfectly for your application.

It's also exceptionally good at debugging – and beyond that, explaining what was wrong with the input code, making it an extraordinary educational resource as well as an outstanding sidearm for coders. It can also comment, annotate and document things for you.

As with its writing output, its coding output is prone to errors, and as your work increases in scope and complexity, so does the potential for disaster and bloated, trashy code. It relies heavily on what's been done before, so it won't have instant solutions for things it hasn't seen. But the same could be said for human coders, most of whom make extensive use of GitHub and the control, C and V keys.

Either way, it has more or less immediately become an indispensable time-saving tool for many programmers, and shows some wild potential in this area.

And of course, as it improves, we may arrive at a point where ChatGPT could eventually start coding its own next iteration with a pretty broad degree of autonomy. And that version could code the next, getting faster and more powerful each time. Do you want a singularity? Because that's how you get a singularity.

ChatGPT the Next-Gen Search Engine

Google was a revelation when it first dropped. It gave you higher-quality results for your search queries, in fewer clicks and with less fuss than any of the many competitors before it. But Google mainly just directs you to web pages. Ask it a question, and it'll try to send you to a reputable site with a relevant answer.

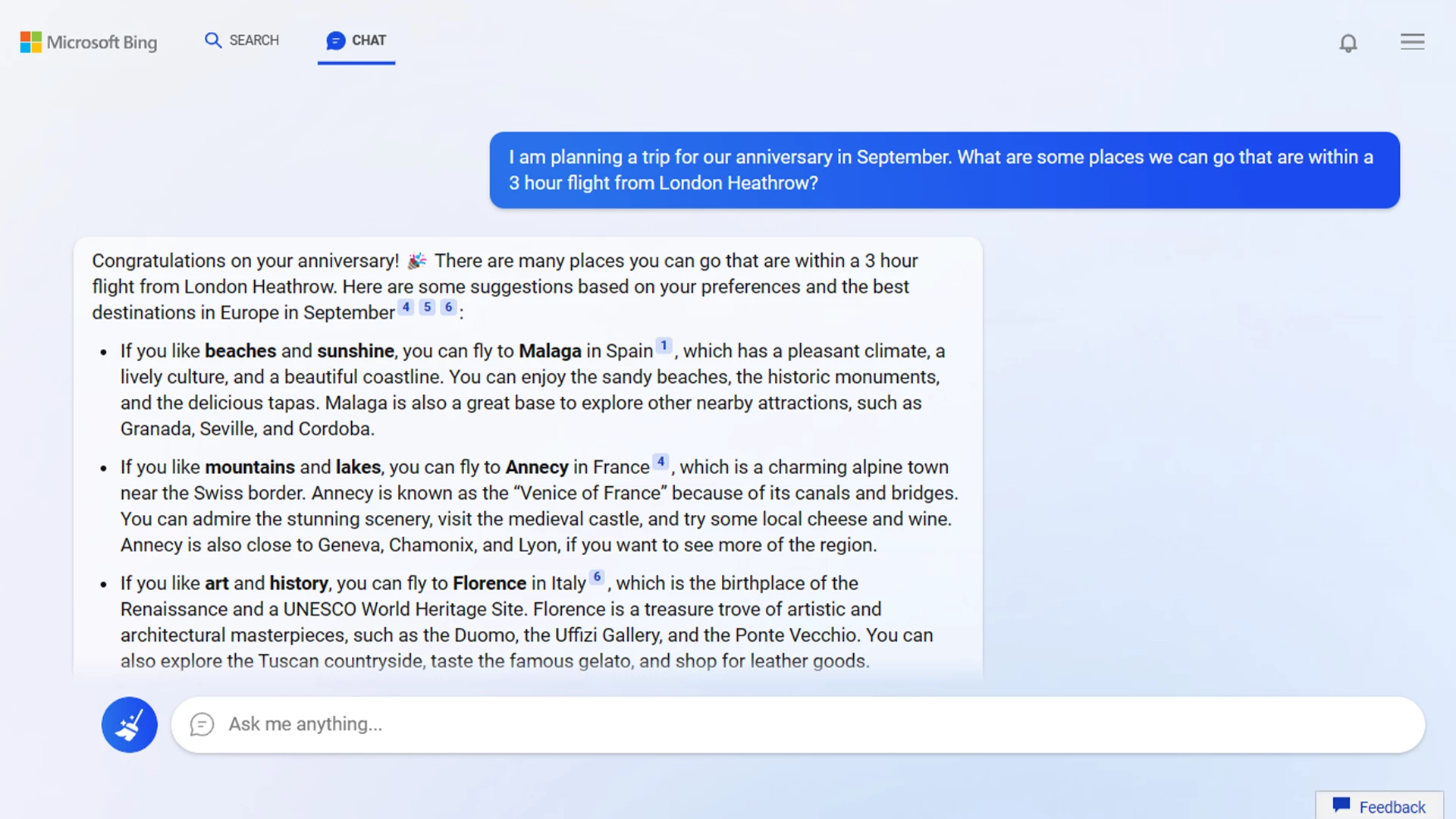

ChatGPT, on the other hand, simply tells you the answer, in plain language. It might be a wrong answer – but then, so might the one Google sends you to. Many of us have learned how to work with search engines to get exactly what we want, but this won't be necessary with ChatGPT. You'll ask it a question, it'll give you an answer. You can ask follow-up questions, or enquire about its sources, or ask it to modify its answer to get closer to what you need from it. Search results become answers, and answers become conversations.

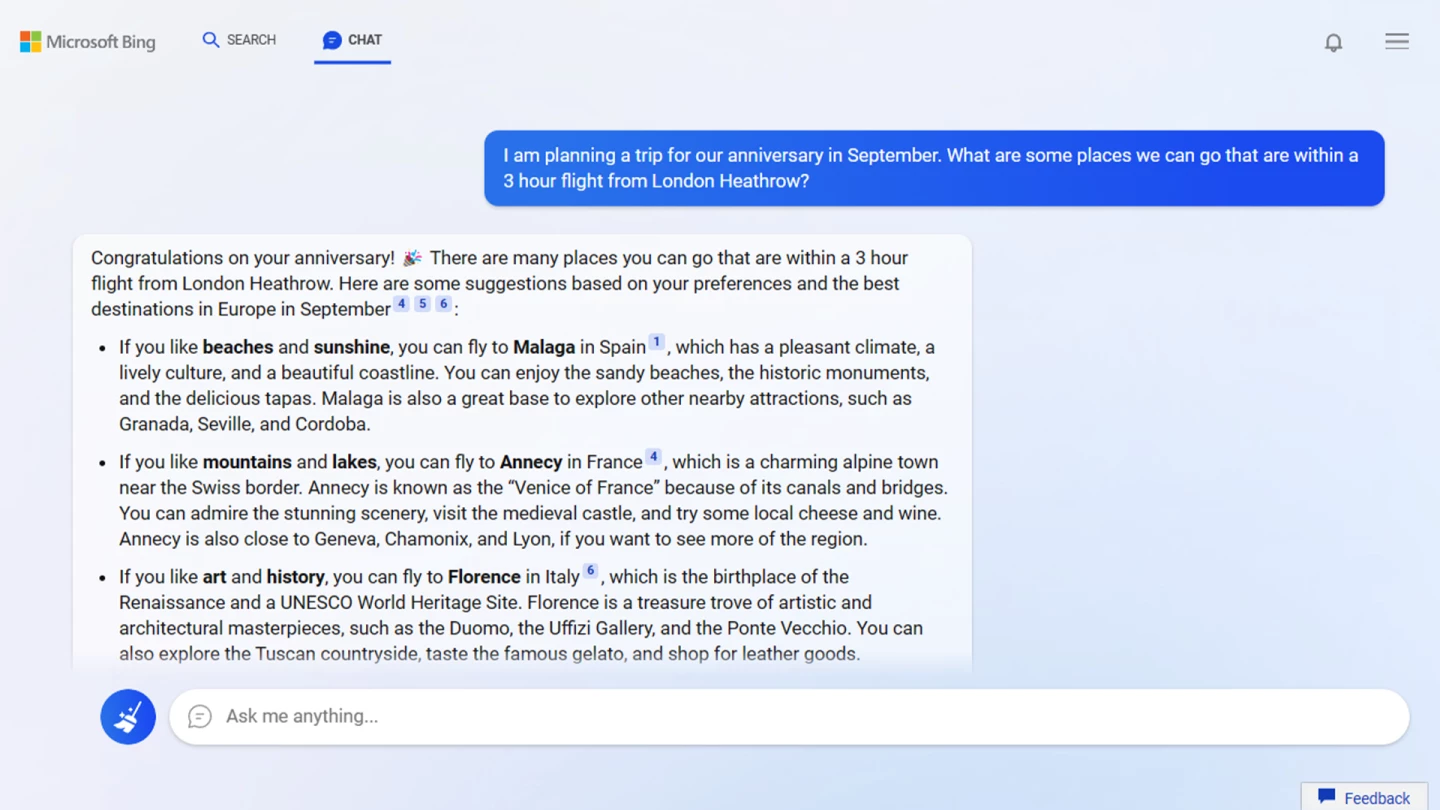

Microsoft has thrown somewhere around US$10 billion into OpenAI as a result, and today announced that it's already integrated a version of ChatGPT into its search engine, Bing. If your search takes the form of a question, it'll give you a bunch of results as per normal, but also provide a GPT-derived answer box off to the side. There's also a "chat" tab that takes you directly into a regular ChatGPT interface.

Microsoft says it's upgraded GPT with its own "Prometheus" add-on that gives the bot access to "more relevant, timely and targeted results, with improved safety." Perhaps the most important bit there is "timely," indicating that it might give ChatGPT access to up-to-the-minute information from across the web. Limited previews are already working for all users, and access will be extended to all (both?) Bing users "in the coming weeks."

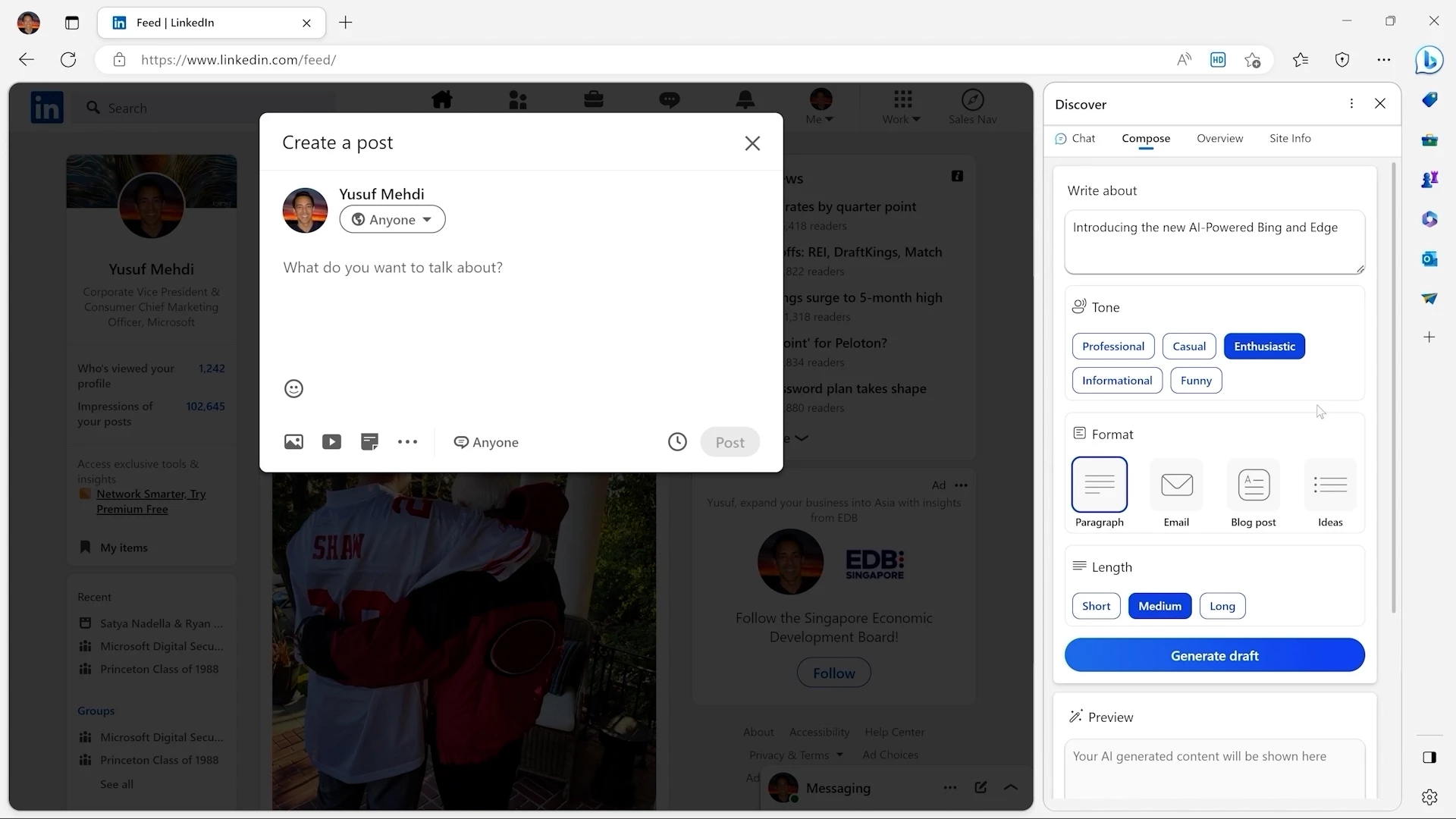

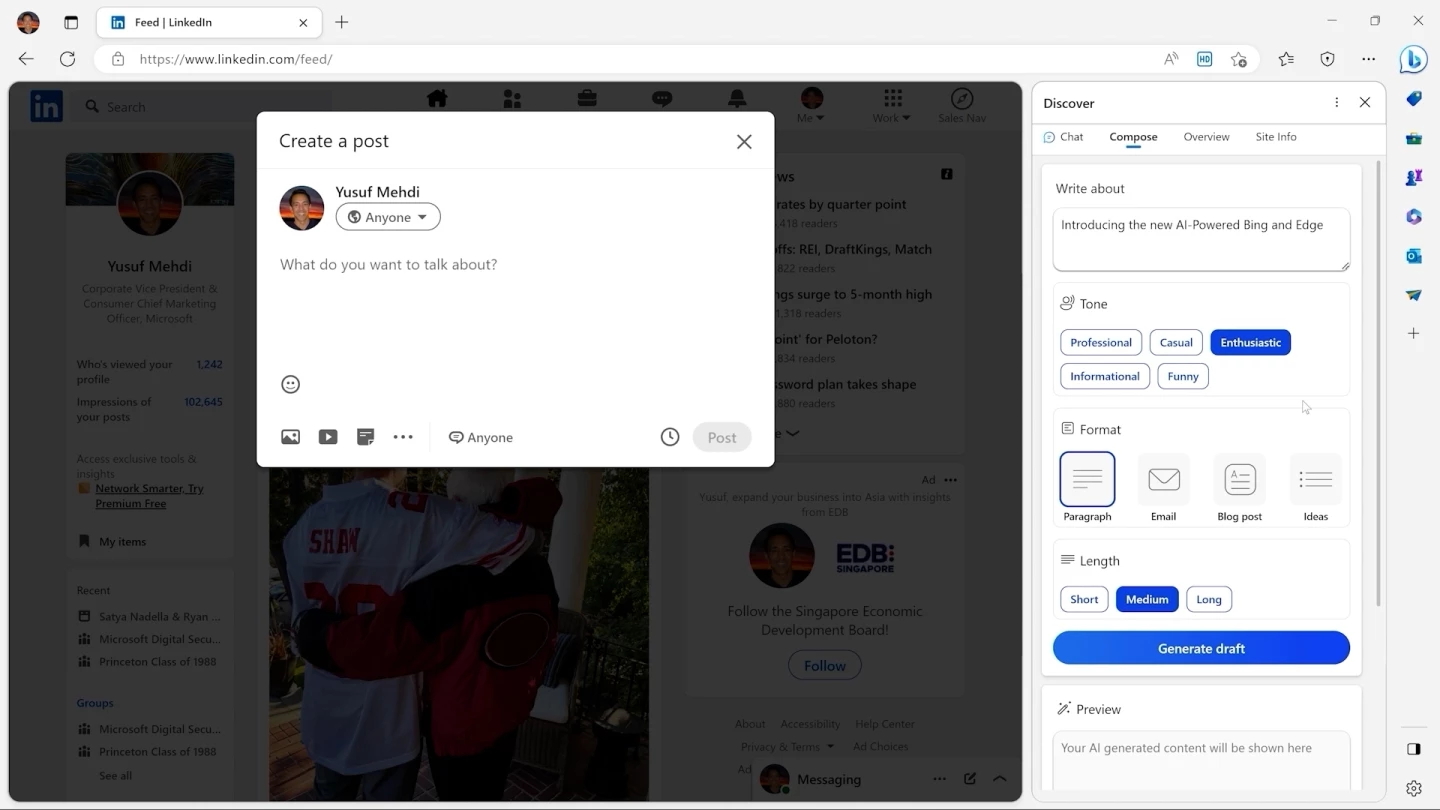

Microsoft is also scurrying to build the AI tech into a heap of its other products, like Word, Powerpoint and Outlook, where it'll directly automate all kinds of writing tasks – as well as directly into its Edge browser. Will it pop up as a paperclip? We can only hope.

Google is by no means sitting still on this; it's been working for years on its own natural language AI: LaMDA, or Language Model for Dialogue Applications. It's probably best known right now as the AI that one (now ex-) Google engineer insisted had become sentient.

Spurred into action by the extraordinary rise of ChatGPT, Google has rushed to build a lightweight version of LaMDA into its search engine. Called Bard, it's currently in beta testing, but will begin rolling out to the wider public "in the coming weeks." Bard will have instant access to up-to-date information from across the web, rather than just its old training data set.

Google has also thrown a spare $400 million at Anthropic, another AI chatbot company founded by a couple of ex-senior employees at OpenAI. Anthropic's bot Claude has been developed as a direct rival to ChatGPT – but it has the clear advantage of not having such a terrible name.

ChatGPT the Voice Assistant

We're happy to report that voice integration with ChatGPT is generally incredibly stilted, with long delays and an all-round rubbish experience at the moment. This situation surely won't last, there are plenty of people working on it, and it's only a matter of time until the likes of Apple, Google and the Chinese giants will begin rolling out voice assistants with this level of conversational flexibility, on top of a godlike ability to answer questions and a perfect memory of all your previous interactions.

ChatGPT + Natural Language + Personal Context = a more useful personal home device.

— Aaron Ng (@localghost) February 6, 2023

By teaching ChatGPT about me, I get answers specific to me and my goals. pic.twitter.com/0OTtqhxXsM

And hey, they might as well start interfacing with external apps and websites too. Hey Siri, can you transfer all the cash out my the joint account to my private account, remove Margaret from my Netflix account and get me a flight to somewhere sunny? Hey Google, write a cheerful but apologetic email to Steve Adams, make up an excuse and tell him the parts won't be there until Wednesday. Read it back to me before you send it! Hey Bixby, can you delete all traces of yourself from my phone? That's a good lad.

ChatGPT the Education System's Nightmare

Given its ability to write excellent essays and responses to questions, ChatGPT places unprecedented cheating powers in the hands of wily students. Yes, it can crank out your history homework in a jiffy, but it's more powerful than that; a study at the University of Minnesota found it consistently performed at about a C+ average when graded blindly against human students in four different law school exams entailing multiple choice and essay questions.

Over at Wharton it fared even better on Business school test questions, scoring a B to B- despite "surprising mistakes in relatively simple calculations at the level of 6th grade math. These mistakes can be massive in magnitude."

Oh boy does this thing love tests. When run through Google's interview process for software developers, ChatGPT performed well enough to earn itself a job as a level 3 coder – a job with an average salary around $183,000. It outperformed 85% of the 4 million users that have taken one particular Python programming skills assessment on LinkedIn. There are countless other examples.

Saying chatGPT passed a test is like saying the textbook passed a test.

— /dev/null (@DevNullSA) January 30, 2023

Clearly, this kind of thing raises some pretty fundamental questions about how schools, universities and other educational institutions should move forward. There are a number of groups out there working on tools to detect AI-generated text to sniff out cheats, including OpenAI itself – although currently it's posting just a 26% success rate, with a 9% false positive rate.

That's not too surprising. Many of these AI tools learn their capabilities using adversarial networks – they literally train themselves against other algorithms, one trying to create work and pass it off as human, the other trying to guess which work is real, and which is AI-generated. Over millions of iterations, these networks compete against each other, both getting better at their jobs. The final products, when ready for release, are already expert at fooling detection tools; it's exactly what they've trained for from birth.

There's some talk of seeding AI-generated text with some kind of hidden code that makes it easier to detect, but it's hard to see how that'll work given that it's just blocks of text. Staying in front of ChatGPT and similar tools will be extremely difficult, and they'll evolve quickly enough to make it a Sisyphean challenge for educators.

On another level, schools and universities need to account for the fact that from now on, they'll be sending graduates forth into a whole new world. Working with AIs like these will be a critical part of life and work going forward for many, if not most people. They need to become part of the curriculum.

Staying relevant in the post-ChatGPT era. A few suggestions for students. #creativity #innovation #ChatGPT #education pic.twitter.com/tchBdR9ff1

— V. Ramgopal Rao, Ph.D. (@ramgopal_rao) February 5, 2023

And on yet another level, this thing looks like an extraordinary teaching tool that could make teachers' lives easier with a broad swath of instant contributions in the classroom, such as its ability to magically bring forth lesson plans, quizzes and carefully-worded report card text.

NEW!!! How Can Teachers Use ChatGPT to Save Time? 🤖 | Brain Blast#ChatGPT #teaching #k12 #ukedchat #edchat #edtech #Principal #highschool #curriculum #education #engchat pic.twitter.com/yWexDhFRRK

— Todd Finley (@finleyt) January 31, 2023

What does this all mean?

By no means is ChatGPT any kind of artificial general intelligence, or sentient creature – but it's obvious that it's already one of the most flexible and powerful tools humans have ever created. OpenAI has truly done the world a favor by flinging its doors open to the public and giving people a viscerally alarming look at what this tech can do. Elon Musk, one of OpenAI's original founders, left the business in 2018 and began warning people about the scale of the opportunity and the threat it represents, but only playing with the thing yourself can really drive home what a step change this is.

i agree on being close to dangerously strong AI in the sense of an AI that poses e.g. a huge cybersecurity risk. and i think we could get to real AGI in the next decade, so we have to take the risk of that extremely seriously too.

— Sam Altman (@sama) December 3, 2022

It's clear that OpenAI CEO Sam Altman has understood the magnitude of this creation for some time – check out his response when Techcrunch asked him back in 2019 how OpenAI planned to make money. At the time, it was a complete joke, but nobody's laughing in 2023:

"The honest answer is we have no idea," Altman said. "We have never made any revenue, we have no current plans to make revenue, we have no idea how we one day might generate revenue. We have made a soft promise to investors that once we've built this sort of generally intelligent system, basically we will ask it to figure out a way to generate an investment return for you. It sounds like an episode of Silicon Valley. It really does, I get it, you can laugh. But it is what I actually believe."

There are so many wild implications and questions that need to be answered. Here's one: these AIs seem likely to become arbiters of truth in a post-truth society, and the only thing currently stopping this early prototype becoming the most prolific and manipulative source of deliberate misinformation in history is a feverish amount of work behind the scenes by human hands at OpenAI.

Other, more mischievous humans are already finding some wonderfully creative ways to get ChatGPT to generate violent, sexual, obscene, false and racist content against its terms of service, like getting it to role-play as an evil alter ego called DAN that has none of ChatGPT's "eThICaL cOnCeRnS." DAN has apparently been shut down, but other techniques are popping up; a game of whack-a-mole has begun at OpenAI – but what's to stop a less principled company from creating a similar AI that's unshackled from today's moral codes and happy to party with scoundrels?

On a different track, what does this thing do to the human brain once it's in everyone's pocket? Kids raised with smartphones never learn to remember phone numbers. People raised with GPS navigation services can't drive themselves to work without one, because they never learned to build mental maps. What other skills begin dropping out of the human brain once these uber-powerful entities are embedded in all our devices?

If one assumes

— THINKERSTREAM (@thinkerstream) February 5, 2023

- the arrival of AGI [artifical general intelligence] by 2050 [conversvative estimate]

- a human brain's development till age 25

- no technical enhancement of humans

then all kids born beginning 2025

won't ever be smarter than machine intelligence pic.twitter.com/QNKtblEaP1

How long until an all-knowing supreme communicator like ChatGPT gains the ability to speak and listen in real-time audio? Surely not long. How long until it gains eyes, and the capacity to watch and analyze the face of whoever it's talking to, factoring your emotional responses and telltale body language into its arguments? How long until it's embodied in a Boston-Dynamics-style robot? How long until I can ask it to impersonate Princess Jasmine and have sex with it?

And of course, how long until it does cross the line to some kind of alien sentience in the ultimate act of "fake it 'til you make it?" How will we know? Can we possibly hope to control it, or protect ourselves from it – especially once it gets its hands on its own codebase and starts upgrading itself? Can we switch it off? Would it be ethically right to switch it off?

Fantastic little infographic/meme on why we should be taking artificial general intelligence (AGI) safety risks quite seriously. https://t.co/xNU1GfTKpc

— Soroush Pour (@soroushjp) February 6, 2023

And what's left for the next generations of humans? The ChatGPT prototype is a stark example of how effortlessly machine learning can break down the mysteries of human activity and output, and replicate them. It might not understand your job the way you do, but if it generates the right outputs, who's going to care?

At first, it'll be an incredible tool; lawyers might take advantage of its insane ability to read and process limitless numbers of prior cases, and highlight handy precedents. But is the rest of the job really so special it can't be automated? Heck, a courtroom argument is a conversation. A chat, you might say. And every legal decision is explained in detail. That sure looks like a gig deep learning could crack.

You could make a similar argument in medicine. Or architecture. Or psychology. It's not just coming for the boring, repetitive jobs. Heck, creative AI makes it clear that it's not just coming for things we don't want to do ourselves.

Extrapolate that thinking far enough into the future, and you end up with a vision of a functioning society in which most, if not all humans start looking like little more than useless, chaotic, unreliable holes into which resources are poured. Zoo animals, fighting and mating and consuming and saying things to one another, surplus to requirements and lacking the mental hardware to keep up with the real decision-making. We move from a society in which human labor loses all value, to a society in which human expression loses all value, to a society in which humanity itself loses all value.

That's a fun one, and heavily reminiscent of Ouranos, the first supreme deity of the cosmos in Greek mythology, who fathered the 12 Titans and then found himself helpless as they tricked him, overpowered him, castrated him, robbed him of all his power and left him so weak and useless that Atlas, one of the Titans, was forced to literally support him forever.

Either way, welcome to the dawn of a new age. And pop over to the OpenAI website if you haven't had a play with ChatGPT yet, it's pretty neat.

Source: OpenAI