If humanoid robots are ever going to fully integrate in society, they're going to need to get good at reading our emotional states and responding appropriately. A new wearable from researchers in Korea could help them do just that.

Robots are good at a great many things. They can lift impressive loads, learn amazingly fast, and even fly a plane.

But when it comes to truly getting us – understanding our messy human emotions, mood swings, and inner neediness – they're still about as good as a toaster is at making art (although some would argue that the perfect piece of toast is a kind of art, but we digress). This has been slowly changing over time though, and a new system announced by researchers from Korea's Ulsan National Institute of Science and Technology (UNIST) may just push the emotional intelligence of our tech ahead even faster.

A team there has created a stretchable wearable facial system that uses skin friction and vibration monitoring to evaluate human emotions and generate its own power. And yes, it is as weird as it sounds.

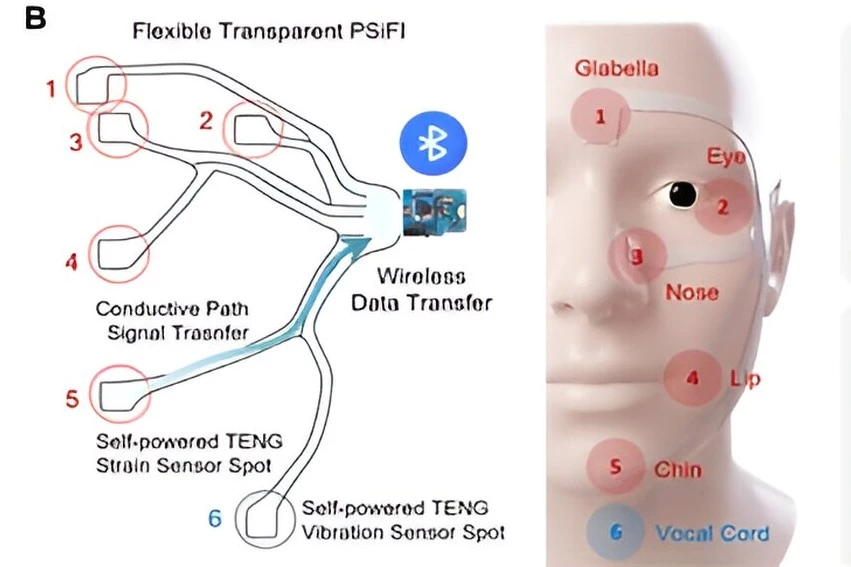

The wearable consists of a set of thin, clear, flexible sensors that adhere to the face on the left and right side of the head. The bulk of each sensor sticks between the eye and ear, and branches extend over and above each eye, down to the jaw, and around to the back of the head. The team says that the sensors can be custom made to fit any face.

Once the sensors are in place, they connect to an integrated system that's been trained to decode human emotions based on the strain patterns in our faces and the vibrations of our voice. Unlike other systems that have used similar techniques, this one is completely self powered by the stretching of the sensor material through a piezoelectric principle. This means it can be worn all day (as if you'd want to!) without the worry of needing to recharge it. According to the UNIST researchers, this represents the first time a fully independent, wearable emotion-recognition system has ever been created.

While face-based stickers aren't likely to catch on as a daily wearable, the UNIST team incorporated their tech in VR environments, where it's a little easier to imagine it thriving. Imagine the development of more comprehensive VR headsets that could monitor our emotions and adjust our virtual worlds accordingly. In fact, during their testing process, the researchers used their new emotion-sensing system to deliver book, music, and movie recommendations in various virtual settings based on how the wearer was feeling.

You just get me

The UNIST work comes as the latest in a long line of efforts that seek to make technology more sensitive to the humans that use it.

We've seen a necklace that can read facial expressions to deduce our emotional states; a robotic head that can mirror human facial expressions; a smart speaker that suggests tunes based on how you're feeling derived from voice analysis; and an AI system that can help autonomous cars predict the actions of other drivers based on their personalities. There was even a 2015 effort that perhaps foreshadowed the new UNIST study: facial stickers that could help robots understand our emotions. Plus, who could forget the wild success of the emotion-reading Pepper robot from Japan, which was launched in 2015 and is now helping out in over 2,000 companies around the world?

As technologies get better at understanding our emotional states, not only will androids be better able to leverage our moods against us to take over the world (kidding), but such advances could break down some of the remaining walls between humans and robots.

Imagine the implications for medical companion robots for the elderly. Instead of an annoying bot that just rolls by three times a day urging you to take your meds or drink more water in a flat mechanical voice, such a machine could engage you in a conversation, gauge your mood, and employ the right kind of cajoling conversational strategy to overcome your stubborn resistance to self care.

Emotionally smart robots could help children deal with bullying issues at school by vaporizing said bullies (again, we kid). But they could represent a safe place for kids to discuss topics that are too hard to talk about with human companions. Because such bots could keep their cool and, in a linguistic twist of irony, not have "their buttons pushed," they could deliver clear-headed advice in a way that a frustrated parent might not be able to.

In a more nefarious imagining, emotion-reading tech could act as a kind of advanced lie detector, decoding how a person really feels regardless of how they say they feel.

The ways in which emotionally intelligent technologies could impact our lives are nearly as limitless as the range of emotions we experience daily as a species. And, while wearing sticky sensors on our face might not be the way forward, the UNIST work certainly helps add in another step on the mighty climb to machines that "just get us."

Or, as study lead Jiyun Kim puts it: "For effective interaction between humans and machines, human-machine interface (HMI) devices must be capable of collecting diverse data types and handling complex integrated information. This study exemplifies the potential of using emotions, which are complex forms of human information, in next-generation wearable systems."

Said study has been published in the journal Nature Communications.

Source: UNIST