We are living at the dawn of the general-purpose robotics age. Dozens of companies have now decided that it's time to invest big in humanoid robots that can autonomously navigate their way around existing workspaces and begin taking over tasks from human workers.

Most of the early use cases, though, fall into what I'd call the Planet Fitness category: the robots will lift things up, and put them down. That'll be great for warehouse-style logistics, loading and unloading trucks and pallets and whatnot, and moving things around factories. But it's not all that glamorous, and it certainly doesn't approach the usefulness of a human worker.

For these capabilities to expand to the point where robots can wander into any job site and start taking over a wide variety of tasks, they need a way of quickly upskilling themselves, based on human instructions or demonstrations. And that's where Toyota claims it's made a massive breakthrough, with a new learning approach based on Diffusion Policy that it says opens the door to the concept of Large Behavior Models.

Diffusion Policy is a concept Toyota has developed in partnership with Columbia Engineering and MIT, and while the details quickly become very arcane as you look deeper into this stuff, the group describes the general idea as, "a new way of generating robot behavior by representing a robot's visuomotor police as a conditional denoising diffusion process." You can learn more and see some examples in the group's research paper.

Essentially, where Large Language Models (LLMs) like ChatGPT can ingest billions of words of human writing, and teach themselves to write and code – and even reason, for god's sake – at a level astonishingly close to humans, Diffusion Policy allows robotic AIs to watch how a human does a given physical task in the real world, and then essentially program itself to perform that task in a flexible manner.

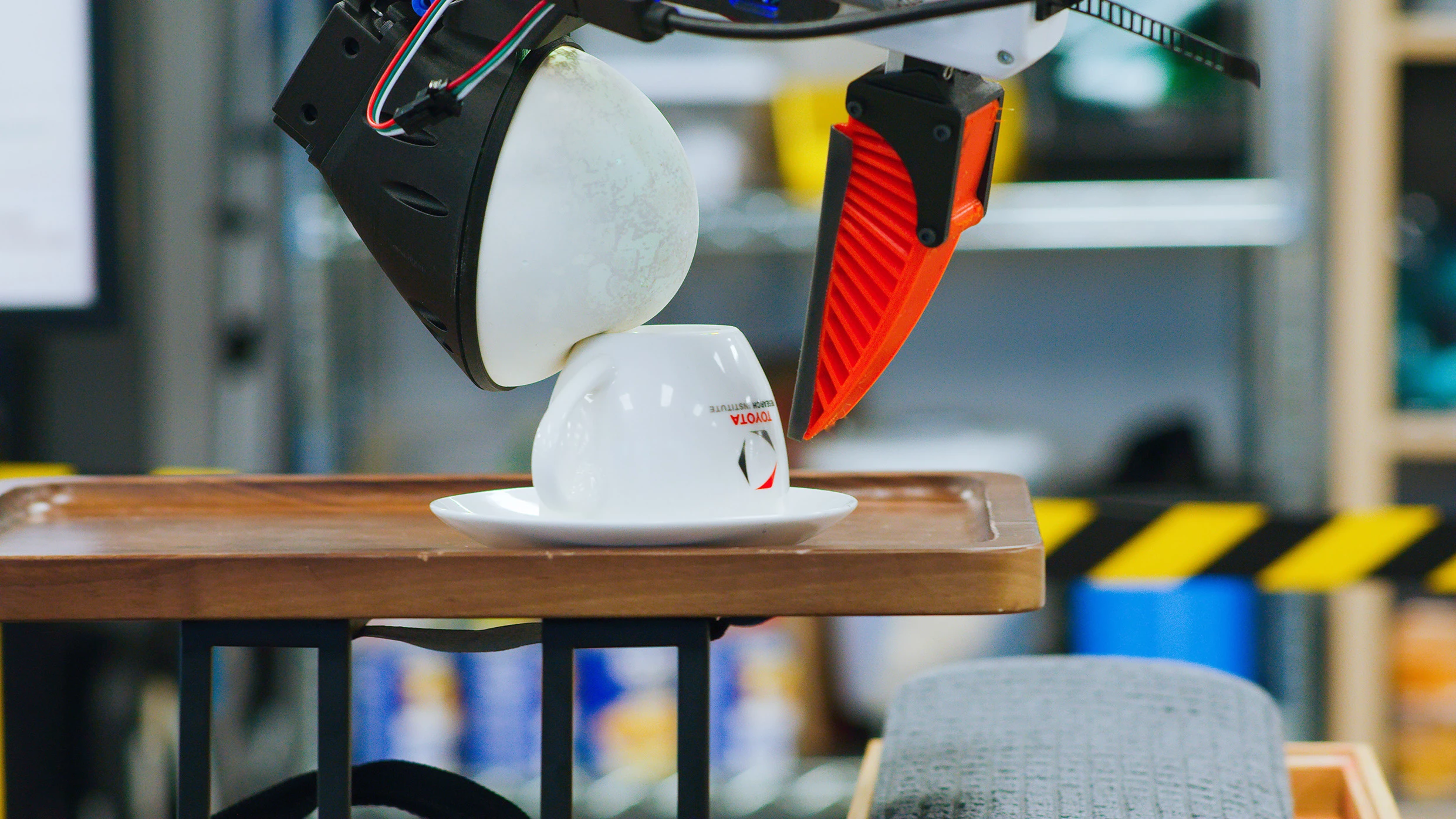

While some startups have been teaching their robots through VR telepresence – giving a human operator exactly what the robot's eyes can see and allowing them to control the robot's hands and arms to accomplish the task – Toyota's approach is more focused on haptics. Operators don't wear a VR headset, but they receive haptic feedback from the robot's soft, flexible grippers through their hand controls, allowing them in some sense to feel what the robot feels as its manipulators come into contact with objects.

Once a human operator has shown the robots how to do a task a number of different times, under slightly different conditions, the robot's AI builds its own internal model of what success and failure looks like, and then goes and runs thousands upon thousands of physics-based simulations based on its internal models of the task, to home in on a set of techniques to get the job done.

"The process starts with a teacher demonstrating a small set of skills through teleoperation," says Ben Burchfiel, who goes by the fun title of Manager of Dextrous Manipulation. "Our AI-based Diffusion Policy then learns in the background over a matter of hours. It's common for us to teach a robot in the afternoon, let it learn overnight, and then come in the next morning to a working new behavior."

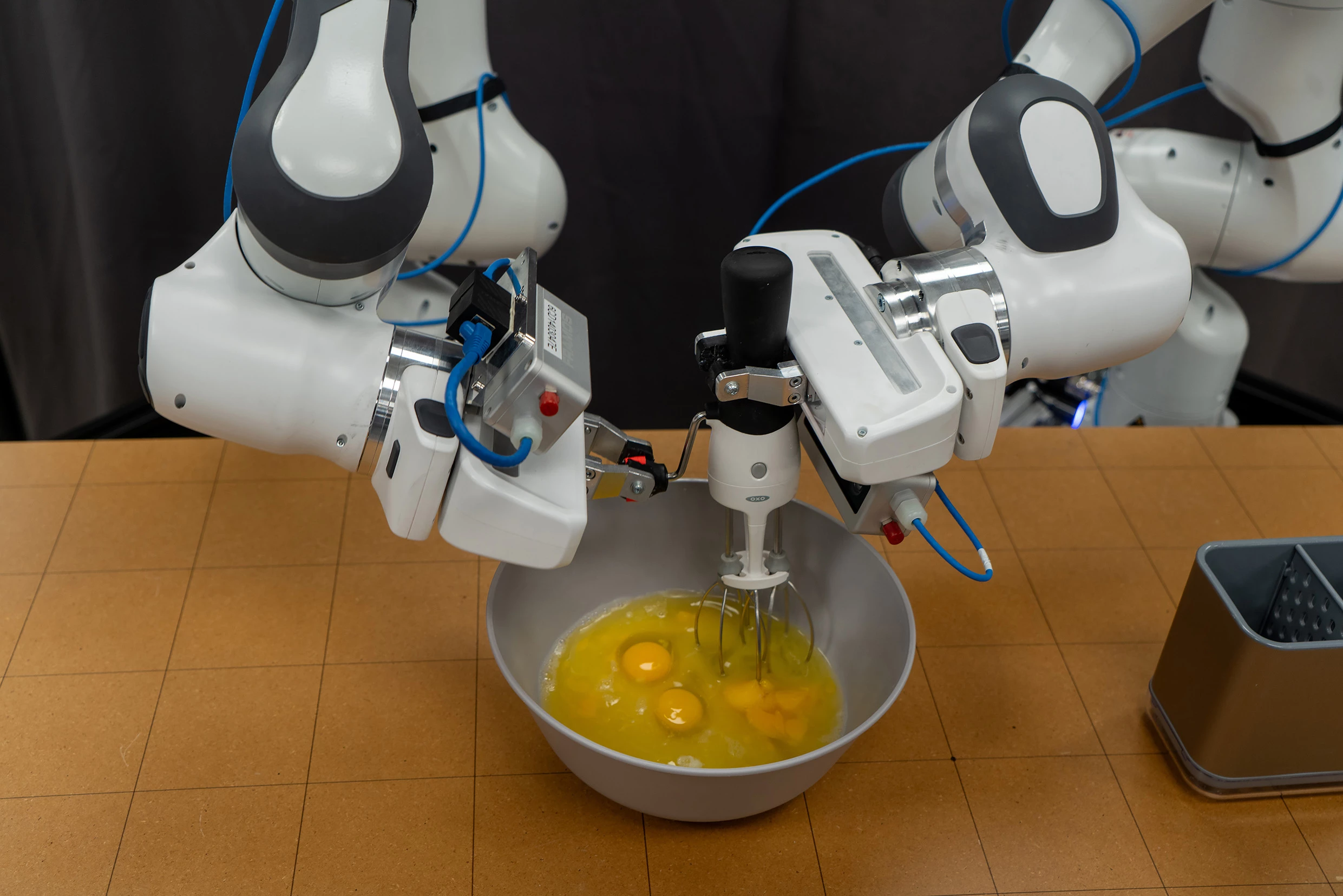

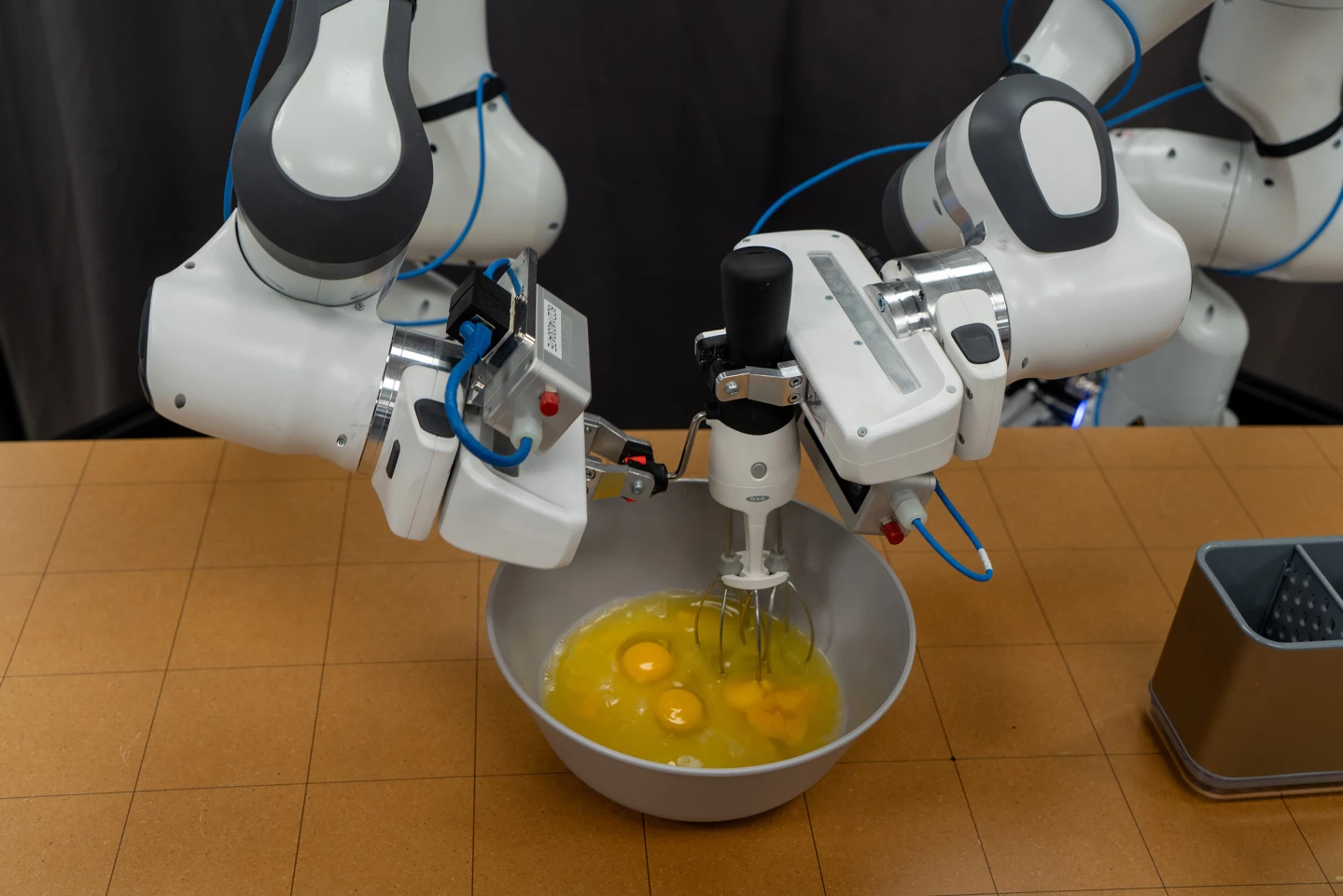

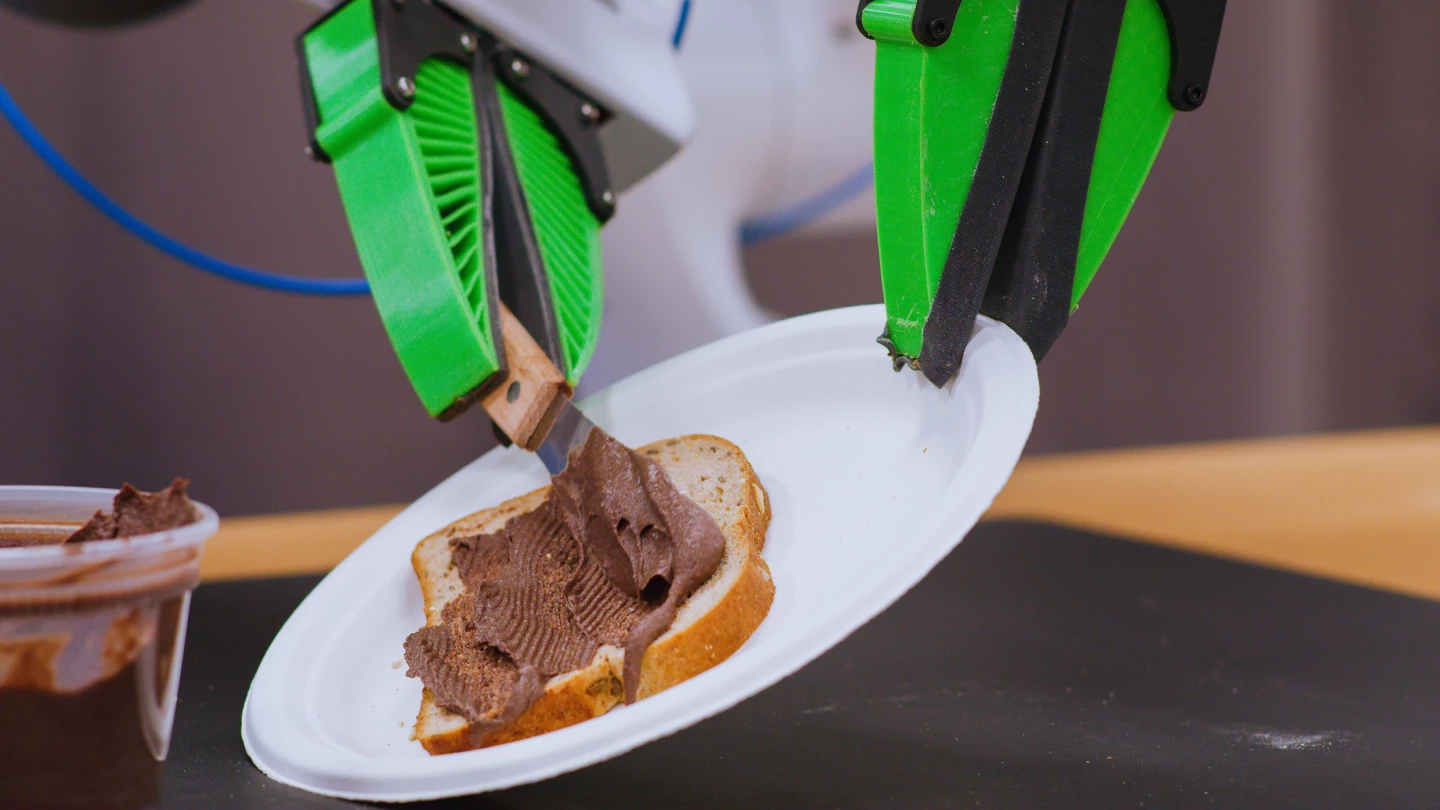

The team has used this approach to rapidly train the bots in upwards of 60 small, mostly kitchen-based tasks so far – each relatively simple for the average adult human, but each requiring the robots to figure out on their own how to grab, hold and manipulate different types of items, using a range of tools and utensils.

We're talking using a knife to evenly put a spread on a slice of bread, or using a spatula to flip a pancake, or using a potato peeler to peel potatoes. It's learned to roll out dough into a pizza base, then spoon sauce onto the base and spread it around with a spoon. It's eerily like watching young kids figure things out. Check it out:

Toyota says it'll have hundreds of tasks under control by the end of the year, and it's targeting over 1,000 tasks by the end of 2024. As such, it's developing what it believes will be the first Large Behavior Model, or LBM – a framework that'll eventually expand to become something like the embodied robot equivalent of ChatGPT. That is to say, a completely AI-generated model of how a robot can interact with the physical world to achieve certain outcomes, that manifests as a giant pile of data that's completely inscrutable to the human eye.

The team is effectively putting in place the procedure by which future robot owners and operators in all kinds of situations will be able to rapidly teach their bots new tasks as necessary – upgrading entire fleets of robots with new skills as they go.

“The tasks that I’m watching these robots perform are simply amazing – even one year ago, I would not have predicted that we were close to this level of diverse dexterity,” says Russ Tedrake, VP of Robotics Research at the Toyota Research Institute. “What is so exciting about this new approach is the rate and reliability with which we can add new skills. Because these skills work directly from camera images and tactile sensing, using only learned representations, they are able to perform well even on tasks that involve deformable objects, cloth, and liquids — all of which have traditionally been extremely difficult for robots.”

Presumably, the LBM Toyota is currently constructing will require robots of the same type it's using now – custom-built units designed for "dextrous dual-arm manipulation tasks with a special focus on enabling haptic feedback and tactile sensing." But it doesn't take much imagination to extrapolate the idea into a framework that humanoid robots with fingers and opposable thumbs can use to gain control of an even broader range of tools designed for human use.

And presumably, as the LBM develops a more and more comprehensive "understanding" of the physical world across thousands of different tasks, objects, tools, locations, and situations, and it gains experience with a range of dynamic, real-world interruptions and unexpected outcomes, it'll become better and better at generalizing across tasks.

Every day, humanity's inexorable march toward the technological singularity seems to accelerate. Every step, like this one, represents an astonishing achievement, and yet each catapults us further toward a future that's looking so different from today – let alone 30 years ago – that it feels nearly impossible to predict. What will life be like in 2050? How much can you really put outside the range of possible outcomes?

Buckle up friends, this ride isn't slowing down.

Source: Toyota