Giant AI data centers are causing some serious and growing problems – electronic waste, massive use of water (especially in arid regions), reliance on destructive and human rights-abusing mining practices for the rare Earth elements in their components... And they also consume an epic amount of electricity – whatever else you might say about human intelligence, our necktop computers are super-efficient, using only about 20 Watts of energy.

They're also causing a massive supply-chain crunch for the high-powered GPUs required to run and train the latest models – there's no amount of "compute" that'll satisfy the endless appetites of these data centers and the companies pushing toward artificial general superintelligence.

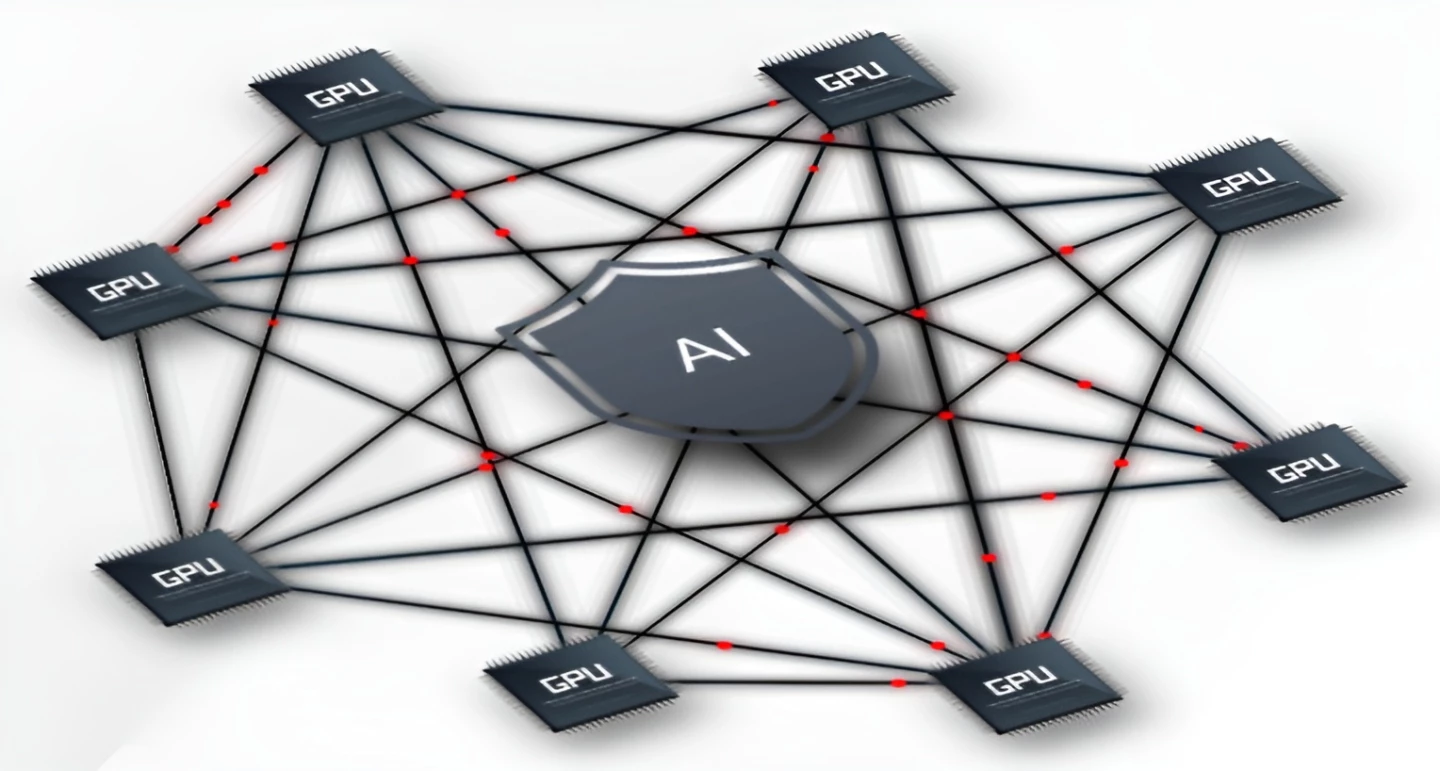

One alternative to the Big Data model is running AI models locally – as in, right there on your own computer. But that limits the size and capability of the model you can run, and can put some harsh demands on your hardware. So what about distributing the task across small, local networks? At Switzerland’s École Polytechnique Fédérale de Lausanne (EPFL), a major technology and public research university, researchers have created software they’re now selling through their own company that takes out the middle-man of “Big Cloud.”

EPFL researchers Gauthier Voron, Geovani Rizk, and Rachid Guerraoui in the School of Computer and Communication Sciences, have announced Anyway Systems, an app that can be installed on networks of consumer-grade desktop PCs. There, Anyway downloads open-source versions of AI models such as ChatGPT, so you can ask questions globally, but process locally.

“For years,” says DCL head Rachid Guerraoui, “people have believed that it’s not possible to have large language models and AI tools without huge resources, and that data privacy, sovereignty and sustainability were just victims of this, but this is not the case. Smarter, frugal approaches are possible.”

Instead of using warehouse-bound arrays of servers, Anyway Systems distributes processing on a local network – in the above case with ChatGPT-120B, requiring a maximum of four computers – robustly self-stabilizing for optimal use of local hardware. Guerraoui says that while Anyway Systems is ideal for inference, it may be a bit slower responding to prompts, and “it’s just as accurate.”

ChatGPT-120B, for reference, is a powerful 'reasoning' model that comes in close behind OpenAI's o3 model on coding, math and health-specific benchmarks. It can use the web, write code in Python and perform complex chain-of-thought tasks, while running on relatively minimalist hardware.

Installing Anyway takes around 30 minutes. Because processing is local, users keep their private data private, and companies, unions, NGOs, and countries keep their data sovereign, and away from the clutches (and potential ethical compromises) of Big Data.

While home users would need several highly-specified PCs to form the local network needed to operate Anyway Systems, relatively small companies and organisations may well find they've already got everything they need. “We will be able to do everything locally in terms of AI,” says Guerraoui. “We could download our open-source AI of choice, contextualize it to our needs, and we, not Big Tech, could be the master of all the pieces.”

But doesn’t Google’s AI Edge already offer such abilities on a single phone?

“Google AI Edge is meant to be run on mobile phones for very specific and small Google-made models with each user running a model constrained by the phone’s capacity,” says Guerraoui. “There is no distributed computing to enable the deployment of the same large and powerful AI models that are shared by many users of the same organization in a scalable and fault-tolerant manner. The Anyway System can handle hundreds of billion[s of] parameters with just a few GPUs.”

According to Guerraoui, similar logic applies for people operating local LLMs such as msty.ai and Llama. “Most of these approaches help deploy a model on a single machine, which is a single source of failures,” he says, noting that the most powerful AI models require extremely expensive machines incorporating AI-specific GPUs like nVidia's 80 GB H100, which is the minimum price of entry for a single-machine installation of ChatGPT-120b.

And that's if you can get your hands on one – supply chain geopolitics, component complexity and rabid demand have combined to create lengthy waiting lists and crazy resale prices up to and beyond US$90,000 for the H100, a product that's supposed to retail for US$40,000.

Getting to that level of processing power is possible with consumer-grade PCs, but according to the EPFL researchers, you'd typically need “a team to manage and maintain the system. The Anyway System does this transparently, robustly and automatically.”

Ideas like Anyway might not put an end to the data warehouse model altogether, and it can't help with the energy-intensive task of training new models, but it does look like a neat and relatively low-lift way to bring high-powered, reasoning-capable AI models out of the Big Data ecosystem and into distributed local networks.

Sources: EPFL, Anyway Systems

Editor's note: this piece was substantially edited for additional context on January 5, 2026.