When you take 800,000 human brain cells, wire them into a biological hybrid computer chip, and demonstrate that it can learn faster than neural networks, people have questions. We speak to Dr. Brett Kagan, Chief Scientific Officer at Cortical Labs.

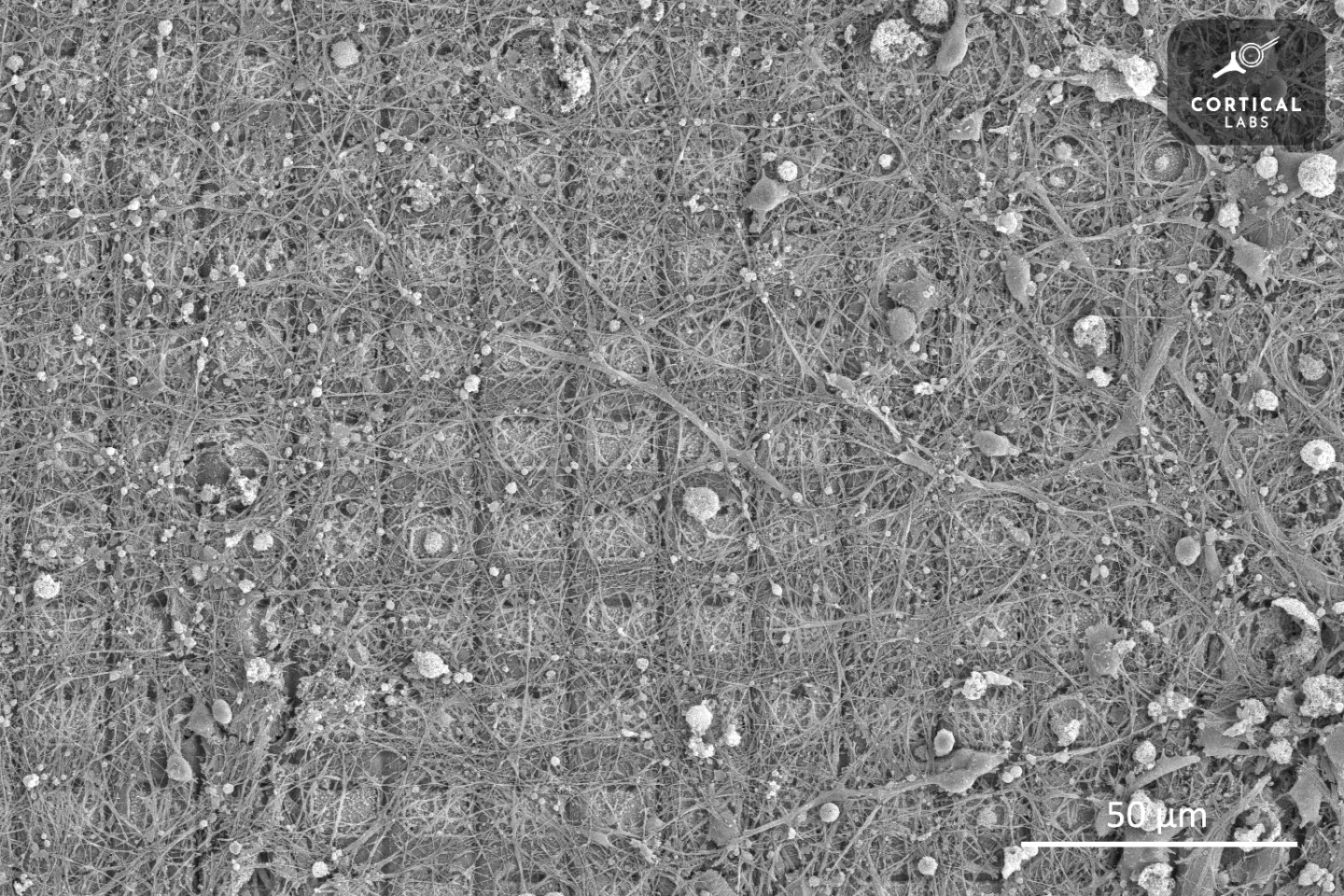

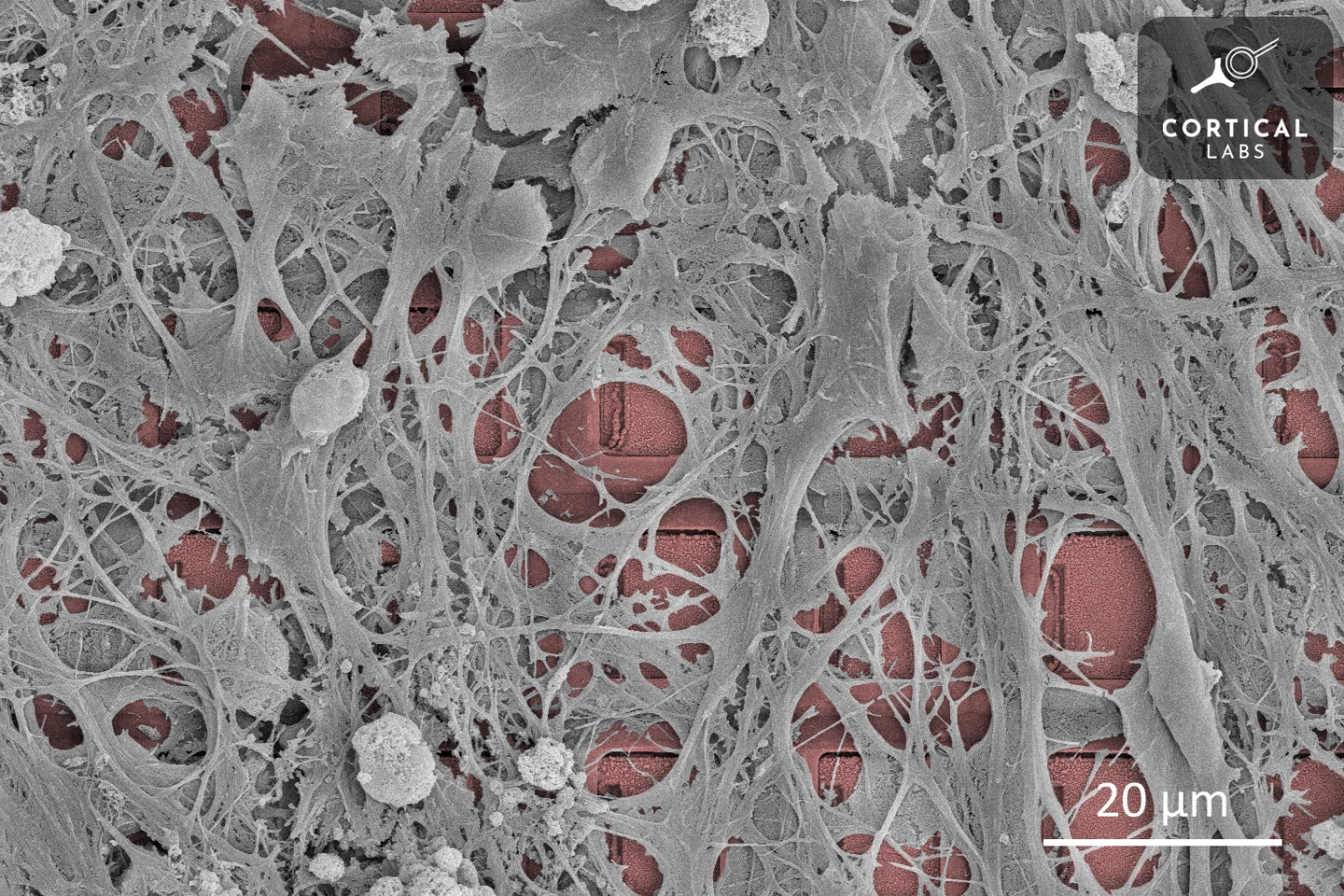

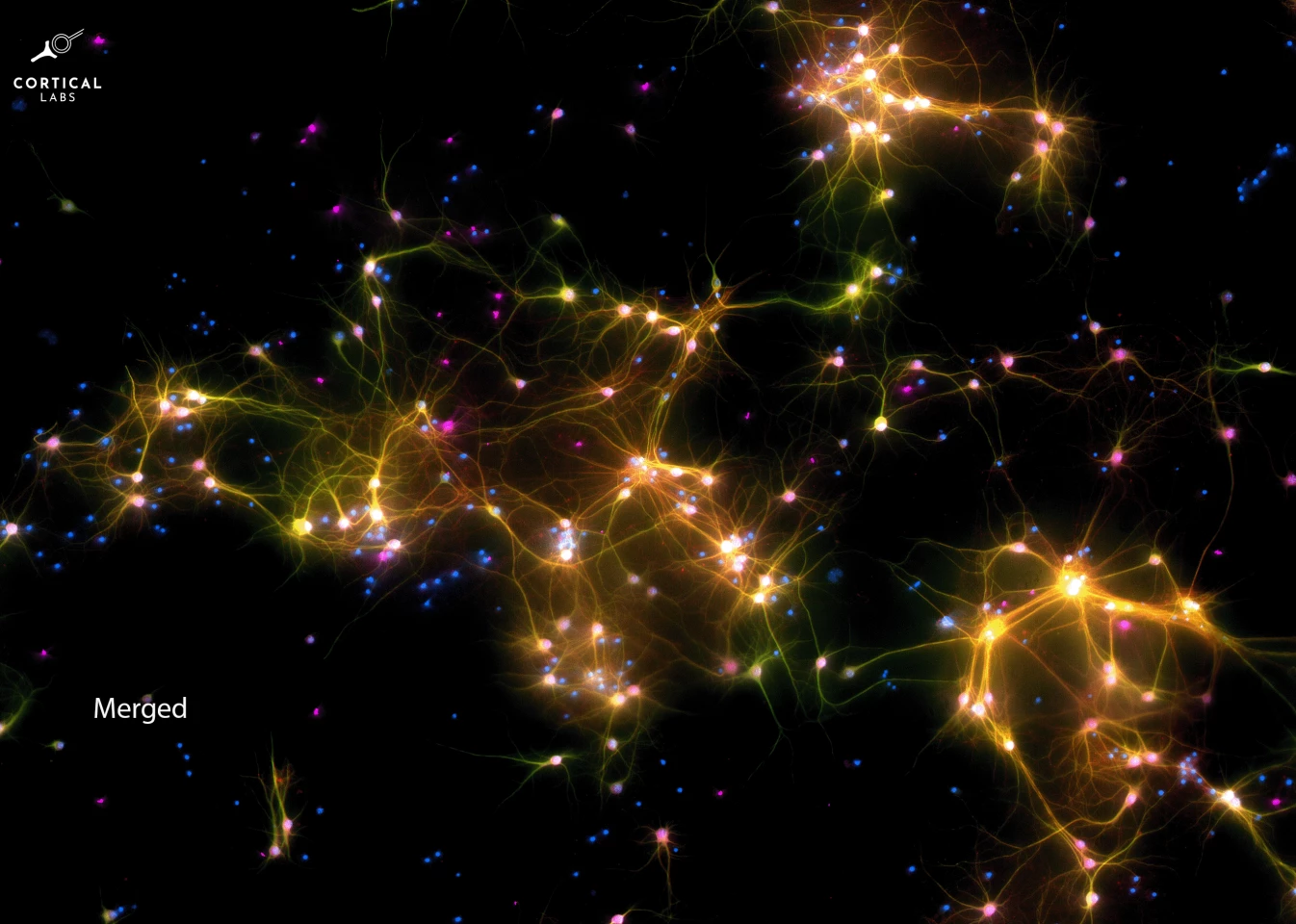

Cortical Labs is an Australian company doing some mind-boggling work integrating human brain tissue, grown from stem cells, into silicon electronics, where they can talk to computer components using the same electrical signals they send and receive in the body, and exhibit learning behaviors by constantly rewiring themselves, growing and shrinking connections as they do when we learn.

The company says its "human neurons raised in a simulation... grow, learn and adapt as we do." That they consume vastly less power, and appear to learn much faster, and generalize learned knowledge more effectively, than the building blocks of today's reinforcement learning supercomputers, while showing "more intuition, insight and creativity."

Indeed, the company hit the world stage in 2022 with a paper titled "In vitro neurons learn and exhibit sentience when embodied in a simulated game-world."

Sentience – the capability to experience sensations or feelings – in this case referred to the neurons' apparent preference for ordered and predictable electrical stimulation when wired into a computer chip, as opposed to random and unpredictable stimulation.

The Cortical team effectively used this preference as a reward/punishment scheme while feeding the cells information about a video game – Pong – and allowing them to move the paddle, and found that both human and mouse brain cells were quickly able to figure out how the game worked, keeping the ball in play significantly longer after just 20 minutes in the system. Human neurons, perhaps unsurprisingly, showed a significantly greater learning rate than the mouse ones.

A ridiculously exciting moment for science, and a potential revolution in computing – but the concept of sentience implies that there's an entity there experiencing something. This raises ethical questions; does a collection of human brain cells, grown in a petri dish, bathed in cerebro-spinal fluid and fed an electrical picture of a simulated world, have moral rights?

If you eventually give it control over a robotic body, thus giving it a similar electrical picture of the real world to the one our senses give us – and that certainly seems to be a stretch goal here, judging again by the company's website – what on Earth do you call that thing? And as the company moves to commercialize this technology, is the whole shebang remotely OK?

While the company has clearly leaned in to the whole sentience idea in some of its advertising, Cortical Labs is keen to get out in front of these questions, put a lid on the idea that these things might be conscious or self-aware, and build an ethics framework into its work at this early stage. As such, it's teamed up with some of its more vocal early critics and presented a framing study to begin illuminating the path forward, putting forth the idea that these living computer chips have plenty of potential to do moral good.

We caught up with Cortical Labs Chief Scientist Dr. Brett Kagan, to learn where things are at with this controversial and groundbreaking technology. What follows is an edited transcript.

Loz: I think I first encountered your work earlier this year when Monash announced military funding for development of the DishBrain.

Dr. Brett Kagan: Yeah. We'll leave it at that, Monash put out a media release on that. Yes, they're involved in that and we're doing a collaboration with them, as well as University of Melbourne on that. But the DishBrain, and the synthetic biological intelligence paradigm, have been developed pretty much in-house, with some involvement of just a few collaborators looking at some of the stuff around the edges.

So where are things at at the moment with the technology itself, and in which directions are you pushing?

The technology is still very much at the early stages. You know, we often compare our work now to the early transistors. They're kind of ugly. They're kind of big, and ungainly, but they could do some useful stuff. And decades of innovation and refinement led to some remarkable technology that now pervades our entire world. We see ourselves as being at that early stage.

We often compare our work now to the early transistors. They're kind of ugly. They're kind of big, and ungainly, but they could do some useful stuff.

But the difference between this and transistors is that at the end of the day, we're working with human biological tissue, which reflects to us – so there are immediate applications that become useful now. You don't have to wait for the technology to get to that very mature stage that could end up in everyone's home. In fact, if you can figure out how brains are working, you can design better drugs, or understand diseases better, or even just understand us better, which from a research perspective is highly impactful.

Can you describe DishBrain in simple terms?

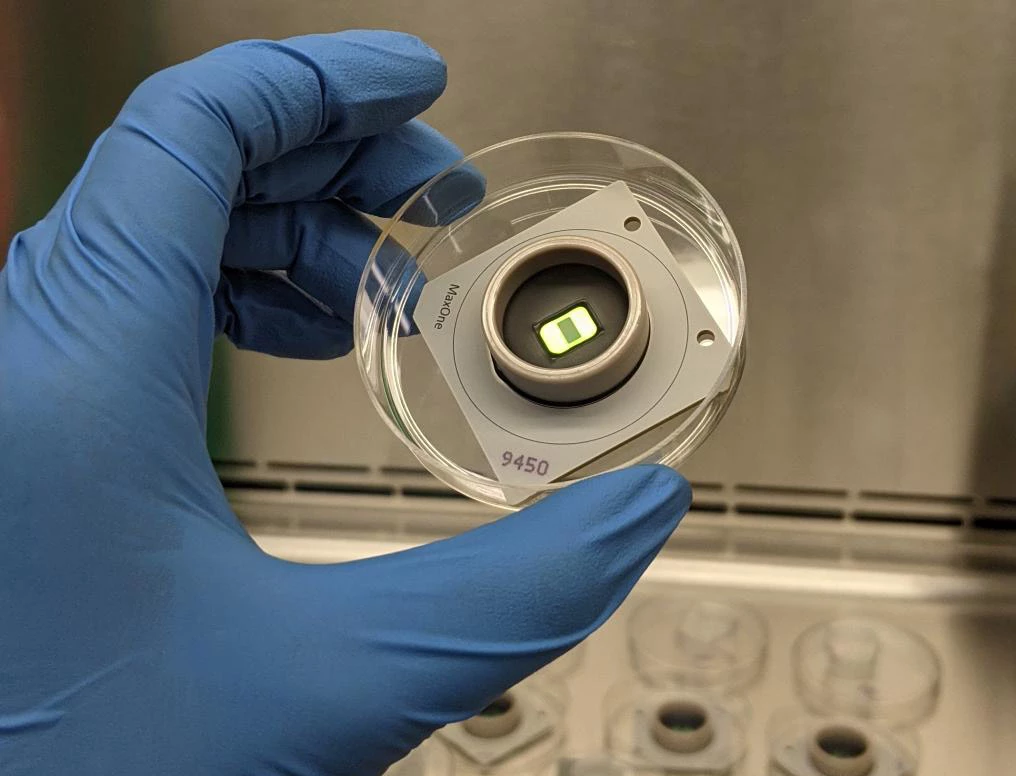

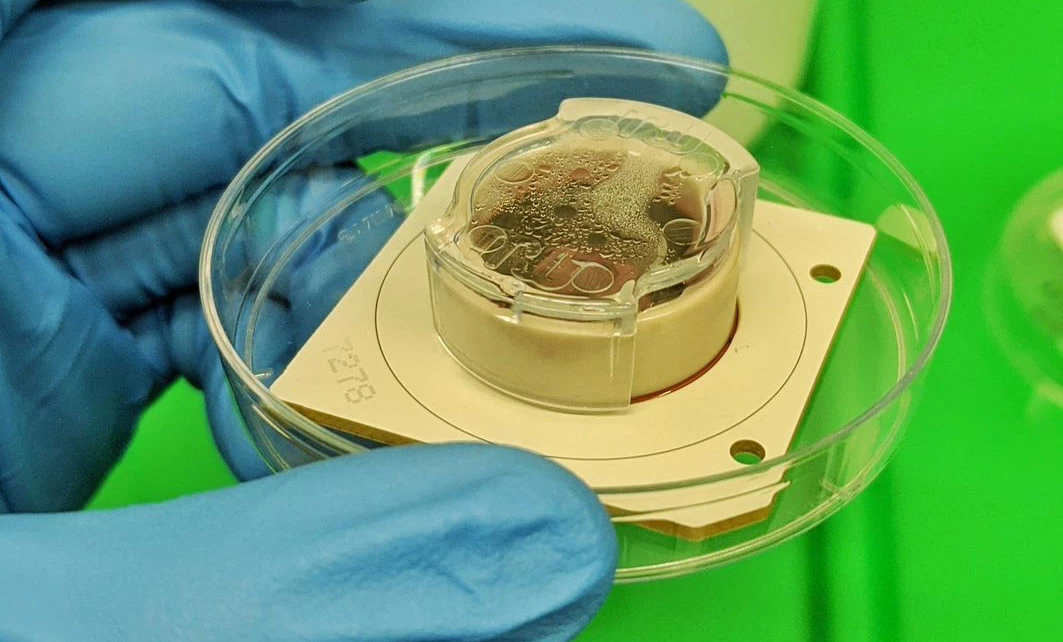

We've already moved on from that moniker, but DishBrain was the initial prototype we developed to really ask the question, can you interact with living biological brain cells in such a way as to get an intelligent process out of them? And we did that by playing the game Pong.

So essentially, DishBrain is a system that takes information from neurons when they're active – which you can see through electricity – places that information into a world – in this case, the Pong game – and allows them to actually impact the Pong game by moving a paddle. And then as they move the paddle that changes the way the world looks, and you then take that information for how the world looks and feed it back into the neurons through electrical information. And if you do that in a tight enough loop, it's almost like you can embody them inside this world.

That's what the DishBrain prototype was designed to do. And what we saw, to our excitement, was actually yeah, not only can you get meaningful learning, you get that meaningful learning incredibly rapidly, sort of in line with what you might expect for biological intelligence.

Not only can you get meaningful learning, you get that meaningful learning incredibly rapidly, sort of in line with what you might expect for biological intelligence.

So from the Pong construct, where have you taken it since then?

Essentially, we've been trying to take that technology to the next level. The initial work we did was very cool in an academic sense. You know, we had a question, we developed a new technology to answer the question, and we got a pretty nice answer that people seem to be interested in.

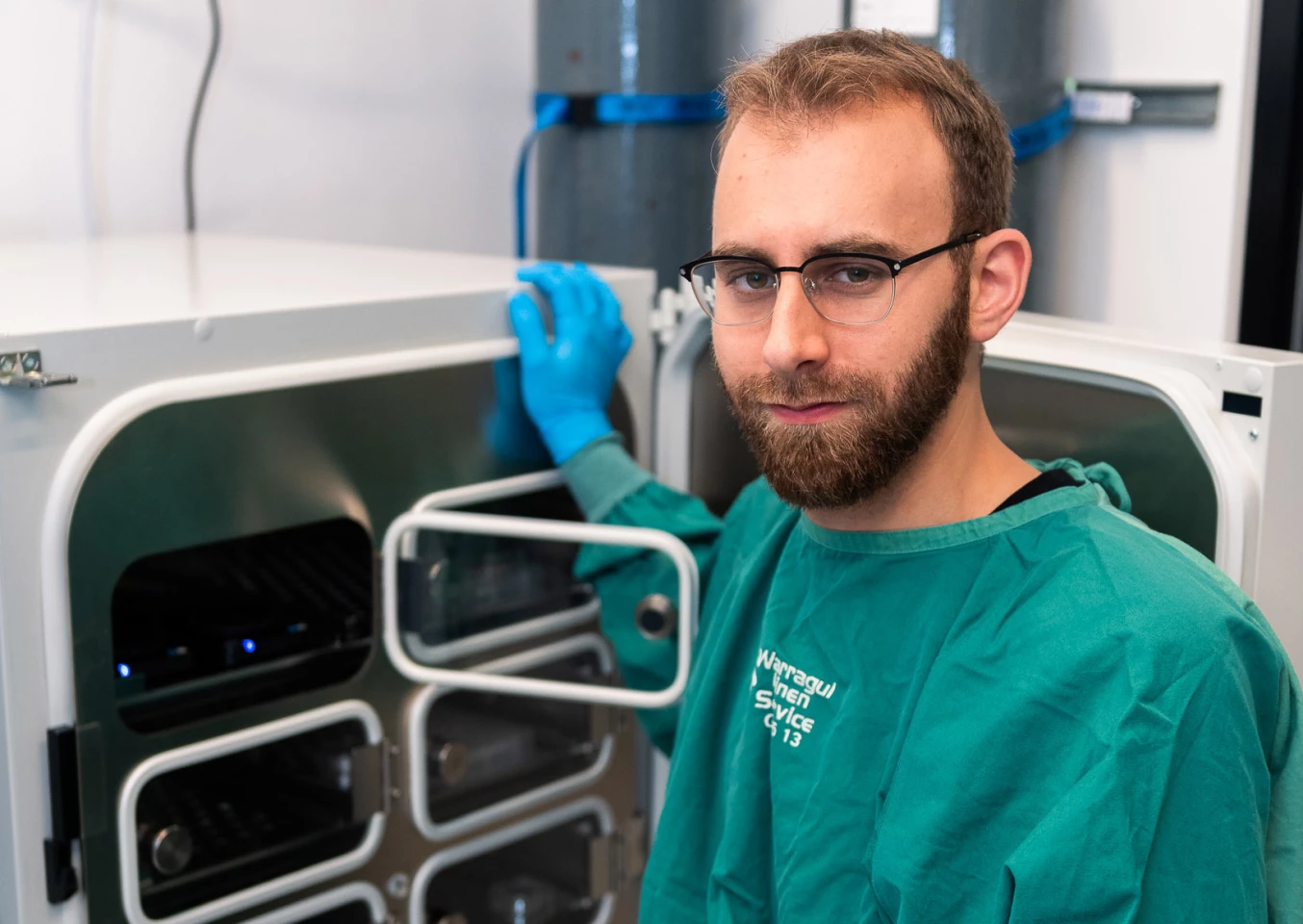

But actually for us, it's no good having this technology if others can't access it. So we've been putting a huge amount of effort into actually building more accessible technologies for people. We've been building a full stack – hardware, software, user interface, as well as all the wetware, which we call the biology, so that people can actually have access to this and start exploring ways to apply it, that might appeal to them.

We get all sorts of requests – can they play music? Can you use it to test for epilepsy? Can you mine Bitcoin with it? Some of these we provide more support to than others! But the bottom line is like, even if it's an idea we think is a bit out there, we want to make it available for other people to investigate it. So that's where we've been focusing on taking the technology: just making it accessible. And we've made some great strides in that.

So what, you've got a box that you can now ship to to people?

It's still in the prototype stage, but yeah, we have some boxes.

How long do these cells live?

We're able to keep cells alive for up to a year. Six months is very standard, but that's actually just using traditional cell culture methods. If you're going to ask how long do human neurons survive for, I mean, you can look at people. We have neurons, and while some new ones are born throughout our lives, for the most part, the ones you're born with are the ones you die with. Probably slightly fewer for most of us! So neurons can last 100, 120 years in theory.

In practice, we don't think we'll necessarily get neurons in a dish to do that, but we do have confidence that we will be able to get to a state where we can keep them alive and functioning well for 5 to 10 years. We're not there yet, but theoretically, it's very possible.

Right, and which applications are you seeing them shine in? What's looking most promising?

We see the immediate short term focus being stuff like drug discovery, disease modeling, and understanding how intelligence arises.

Now that can sound kind of pedestrian, some might say, but if you look at, let's say, clinical trials for neurological diseases, for new drugs, you're talking about 1-2% success rates. For iterations on things already shown to work for another disease, you're looking at only a 6-7% success rate, maybe 8%, depending on what you're looking at. It's abysmally low. And so that means there's all this money being poured into drugs that in theory should help people, but are wasted.

So, yeah, we can improve that by actually testing drugs on the true function of neurons, right? The true function of a neuron is not just to sit in a dish, or to even have activity. The true purpose of a neuron is to process information. So we've been doing testing on drugs and finding that you can get massively different responses if you're testing them on neurons while they're actually playing a game, versus just sitting there doing nothing.

Right, you're giving these neurons a job to do, and then assessing the effects of various drug interventions?

Yeah, exactly. And it gives you a very different perspective, that could really change the field of pharmacology for neuro-related diseases.

Fascinating. So as a computer component, outside the context of drug testing, are there particular tasks that this thing is likely to excel in?

Yeah, the answer is something like, anything that we humans might do better than machine learning. That might sound weird, because these days people love to hype up the machine learning angle, ChatGPT and whatnot. And they're really cool devices. And they do some stuff really, really well. But there are also things they just don't do well at all.

For example, we still use Guide Dogs for the visually impaired, instead of some sort of machine learning protocol. That's because dogs are great at going into a new environment, inferring what's around them, and what that is, even if they've never seen it before. You and I can see any trash can and generally immediately identify it as a trash can pretty quickly. Machine learning can't necessarily do that.

So that's what we've seen. We've done tests against reinforcement learning, and we find that in terms of how quickly the number of samples the system has to see before it starts to show meaningful learning, it's chalk and cheese. The biological systems, even as basic and janky as they are right now, are still outperforming the best deep learning algorithms that people have generated. That's pretty wild.

The biological systems, even as basic and janky as they are right now, are still outperforming the best deep learning algorithms that people have generated. That's pretty wild.

We published a paper through NeurIPS last year, if you're familiar with that conference, and we have another one under peer review at the moment. So yeah, it's been validated, it's not just an anecdote!

I guess the ethics side of things comes in when you start actually trying to figure out like, what are we dealing with here? Is this a life form? I mean, it is a life form! The cells are alive. It's biological. So I guess the question is, how do you draw a distinction between between what you guys are working with here and an animal? How is it fair to picture and to treat these things?

Everyone has a slightly different intuitive feeling about what that should be, and what we're really focused on in this work is anticipating what those might be, so that we can actually help educate people better as to the reality of the situation. Because it's all too easy for people to form an idea in their head that doesn't reflect that reality.

So we almost view it actually as a kind of different form of life to let's say, animal or human. So we think of it as a mechanical and engineering approach to intelligence. We're using the substrate of intelligence, which is biological neurons, but we're assembling them in a new way.

Let's compare an octopus to a human, they both show an intelligence, in some ways remarkable intelligences. But they're incredibly different, right? Because their architecture is so different. We're trying to build another architecture that yet again is different. And it can use some of the abilities that these things have, without necessarily capturing, let's say, the conscious, emotional aspect. So in some ways, it's a totally different source of life, but then how do you treat that? And that's the questions we start to discuss in this paper.

Yeah, obviously consciousness itself is still pretty mysterious. What's your intuition on, like, is there a number of cells, is there a level of interconnectivity... At what point does it go from being a pile of cells to getting a social security number?

Exactly, and people have been trying for even longer than neuroscience research has been around to answer these questions – like what is consciousness? What makes us us? What makes our feelings different to someone else's feelings? Or is it all the same? Or is it all an illusion?

We can't answer those questions now. But what we can do with these systems is actually start to pull apart, at a more nuanced level, what leads to certain patterns of activities and patterns of behavior that one might see. So the other work that we published in Nature Communications, about the same time as this one, was actually pulling apart something that happens in humans, that people had proposed was a marker for consciousness.

And we found that when you put these cells in a environment, like the Pong environment, they started to show this metric really, really strongly. But when we dove deeper into it we realized really clearly, like no question of a doubt about it, that what the cells are showing is not a marker of consciousness; it's simply what happens to a complex system in a structured information environment.

And so what we've done here is actually say look, some people – not many, but some – would propose that this is a marker of consciousness. But actually, it's so much more basic than that. And the initial proposals were very erroneous, based on a lack of information about how some things are working.

Briefly, what metric was this that you're talking about?

It's called criticality. It's a bit mathy. But in short term, you can imagine that there's a system, let's say water, and it could either freeze or stay liquid, and it's like right on the edge point. If it gets a bit cold, it'll freeze. If it gets a bit warmer, it won't. It's like right on the edge point. And brains can do similar things with activity. They can sit right on the edge point between chaos and order.

And that's been proposed to be really useful for a bunch of things. And this is where it all comes together, right? Like, you've got this hardcore math concept. And you have these discussions and ethics. But actually, by building out these systems, what we can start to do is be like, what is the right way ethically to think of this math concept and how it actually reflects something that's more meaningful to the average person. Which is, let's say, how they relate to the world and experience the world.

And you can see very clearly, in a way that people couldn't describe before, that that metric is useful to describe someone who's in a structured world... It's hard to make this simpler, right? But it's not a good marker for say, someone experiencing something unique.

And so by using the system, not only is there an ethics of the system, but the systems can help to answer ethics of the world, in a way that you can't do in a human, because a human has so much complexity going on. You can't just isolate and be like, what causes this thing to happen or not happen? Because there's everything else going on internally, externally, you can't remove a human from the world. But with a system you can. So you can start to pull apart and find that these things are far more fundamental, and that helps us ethically to understand that actually, these things don't show any markers of consciousness. What they do show is markers of structural organization, you know, which one might call intelligence.

It's almost like you're approaching this question from the opposite side as the people trying to figure out whether AIs are conscious. Like, GPT has passed all the Turing tests, there are very few tests on which language models don't appear to exhibit sentience, but intuitively, people know that it's not a sentient thing at the moment, it's a matrix of probabilities and they're trying to clarify the distinction at that end. But you guys are going from the opposite direction, which is like, we've got a group of living cells here. At what point does this become conscious or sentient?

Exactly. And I mean, even those words are so poorly defined, and this is actually one of the big points we make in our paper. Scientifically, ethically, socially, whatever, to discuss this technology right now is almost impossible, because nobody can agree on what these words mean.

Scientifically, ethically, socially, whatever, to discuss this technology right now is almost impossible, because nobody can agree on what these words mean.

And so we say to people, look, this technology seems to have huge potential. But because it's biological, and particularly using human cells, people start to feel unsure about it. So we're trying to say, hey, look, you can do this in a highly ethical way. Not only can you do it in a highly ethical way, doing this technology is really the only way we can start to understand the ethics of other things. That's why we've engaged with these amazing bioethicists, who have no vested interest in anything other than working out how to approach this technology responsibly, so it can be sustainable and have longevity.

What are the practical considerations for you guys? Like, if at some point you determine that you're dealing with something conscious, how does this affect what you do? Or are there kind of grades of sentience that can be treated differently?

100%. So for us, it needs to be a very tiered approach, and the one thing we're very against is people dropping into slippery slope arguments now.

For us, the very first thing we need to establish is the language that we're using, so we can all be on the same page. In theory, that should not be an impossible task, In practice, well, we'll see. Then we need to start to figure out how do we even define when these problems pop up? So we looked at criticality, maybe that was one option? It's absolutely, clearly not. We've looked at other things, again, absolutely, clearly not good markers. So it's like, first we need to find the markers that we can work with.

And as I said, that's got this great benefit where it has this very organic interaction with the existing work people are doing, in trying to understand what consciousness even is, and how to define it. And that has implications not just for this work, but arguably for the way we live as people, how we treat animals, how we treat plants.

If we can understand at the level where consciousness is arising, it really just redefines how we relate to the world in general and to other people and to ourselves. And that's a really exciting perspective.

And then beyond that, let's say that these systems do develop consciousness – in my opinion, very unlikely, but let's say it does happen. Then you need to decide, well, is it actually ethically right to test with them or not? Because we do test on conscious creatures. You know, we test on animals, which I think have a level of consciousness, without any worry... We eat animals, many of us, with very little worry, but it's justifiable.

Is it actually ethically right to test with them or not? Because we do test on conscious creatures. You know, we test on animals, which I think have a level of consciousness, without any worry.

So even if they do display this, let's say that you have to test on these partially conscious blobs in a dish. And let's not oversell it – little blobs of tissue in a dish that show some degree of awareness in the far future when we've improved it. It still could be worthwhile testing on it if you can develop, let's say, a cure for dementia. Right? I'm not sure where it would go. Obviously, these are very speculative. But even at that case, there could still be very strong arguments that this is still the best way to solve a much bigger problem.

You're playing in a strange and magical kind of world, invoking these concepts.

Yeah, a lot of people tell us what we're working on is like science fiction. But my response to that is that the difference between science and science fiction is once you start working on the problem, and we're very fortunate to have an amazing team of people, both with Cortical Labs, and with our collaborators who are working on these problems right now.

So where do you go from this paper?

So for us, this really is a framing paper. It's sort of saying: Here's what the technology looks like, because that's still very unclear to many people. Here's some applications, and here's our thinking around some of those applications and here's how to step forwards.

And the key pathway is that we're adopting this idea called anticipatory governance, which is really about identifying where are the benefits, where are the potential issues, and how do we maximize those benefits while minimizing the issues. We'll really be diving into this area deeper to make sure that the work can, as I say, be progressed and applied responsibly.

The one thing we don't want is for people to see this and think, oh, science is running amok, no one's regulating this. Well, no one can regulate this yet because we don't understand it well enough.

The one thing we don't want is for people to see this and think, oh, science is running amok, no one's regulating this. Well, no one can regulate this yet because we don't understand it well enough. So our goal is to understand it, so we can all move forward and give people the confidence that this research has been done in the right way. You know, I'm not aware of many research fields, or perhaps any, where this sort of approach has been taken, where people have integrated ethics into the foundation of this type of research. But I think for the work we're doing, it's necessary.

Some of the people on this paper that we just did, the way we initially made contact was in opposition, they'd written a paper basically calling for prohibition of research down this line. And we wrote in saying, look, there's a bunch of errors we think that you've made in how you've done some of this work. And these folks actually turned around and went, yeah, actually, we agree with you. Why don't we work together on the next paper? So they ended up putting a piece in The Conversation.

And so we reached out to them saying, you know, we appreciate your openness, let's work together. So some of these people have gone from, you know, 'we need to decide what's not permissible,' to quote their paper, 'and actually start regulating it,' to 'this stuff has huge ethical potential if we do it right.' Let's work together. So I think it's been a great sort of 180 in quite a lot of the community simply by engaging and bringing all these different people together.

I was wondering what kind of external pushback you'd been getting. But those are the people you're now working with on ethics?

Yeah. You're always going to get a few people who are just negative, right? There's always gonna be people who are just naysayers. But what's been really awesome for us to see is that as soon as the international community realized that we want to work with people to make this right and do the right thing, the welcome and the engagement we've had has been amazing.

And I think the problem really highlights that most scientists have a bit of disdain for that side. You know, they want to sit in their ivory towers and lob things off. But we're not like that, right? We want to bring the international community along. And so that's why we've gone out and engaged with them.

So where you say there's huge ethical potential, you're talking about the ability to test drugs on complex neuron configurations doing some kind of intelligent work, before you go to clinical trials in humans?

That's just one of them. They're captured in the paper, so I'll be super brief. But one is reducing testing on animals. Two is improving the chance for clinical outcomes for people. Three is like equity and access; it's like a personalized medicine approach if you can test on a person's own DNA – which we can do, because we can generate cells from a consenting donor, so you actually improve equity and access. And because we're making the technology affordable, that has a socioeconomic benefit to it as well.

There's also the whole power consumption issue, as I said. If this could lead to more efficient use of power, it's less damaging to the environment, which is generally agreed upon as an ethical good.

And then once you get to this consciousness debate, the chance to actually identify what may or may not lead to consciousness, so we can figure out how to just be better in general is huge. It's further out there, but if it's attained, a huge ethical benefit, so these are sort of the core ones, but as I said, they're discussed in more detail in the in the paper.

Right. Where does your company go commercial? What sort of product do you end up selling?

We're building up a cloud-based system. There's two options basically, the earliest one will be a cloud-based system, so you could log in from anywhere in the world, and you could test your environments that you might want to build, or you could work with us, and we'll help build them for you.

It'd be like, what happens to a neuron or collection of neurons if you do this, or if you put this drug in, or if you use this type of disease, cell line, whatever. So that's the first one, basically a cloud-based service. You can think of it like AWS, but for biology, right, and very much focused on on biological interventions and their effect on problem solving.

And in some ways, it could be computation, so we have a lot of neuromorphic people wanting to figure out how these things work so they can try and reproduce it in hardware. Or machine learning people looking at maybe, you know, could I build a better algorithm designed closer to the way the biology works? So there's a computing angle even now. It's just more of a deep research question so far.

So that's the immediate short term commercialization options. Longer term, we obviously can't give any clear promises because it's more speculative, but as I said, the preliminary work suggests that for autonomous, self-learning systems, this is incredibly supportive.

And on the ethical angle, for an autonomous, self-learning system, because it's contained to the biology, it's also arguably a hell of a lot safer than something that could, you know, presumably get out there and just replicate itself through software alone.

Not to mention the whole power consumption angle – machine learning, LLMs and all of that are hugely power-hungry during training, but human brains run on 20 watts.

They've been around for millions and millions of years. They've had a bit of time to optimize!

Thanks to Dr. Brett Kagan and Tom Carruthers for their assistance on this piece.

Source: Cortical Labs