A landmark AI therapy chatbot is closing down on June 30, and industry experts believe that its demise is most likely in response to the challenges of delivering impactful mental health services and navigating safety issues in the digital space.

Woebot, which we covered back in 2017 when it launched, was at the time seen as the "future of therapy," as in-person mental health services became increasingly harder to access around the globe. After raising millions in funding, it received the US Food and Drug Administration's Breakthrough Device Designation in 2021 for its personalized postpartum depression service WB001, which combined cognitive behavioral therapy (CBT) and psychotherapy delivered by its conversational chatbot.

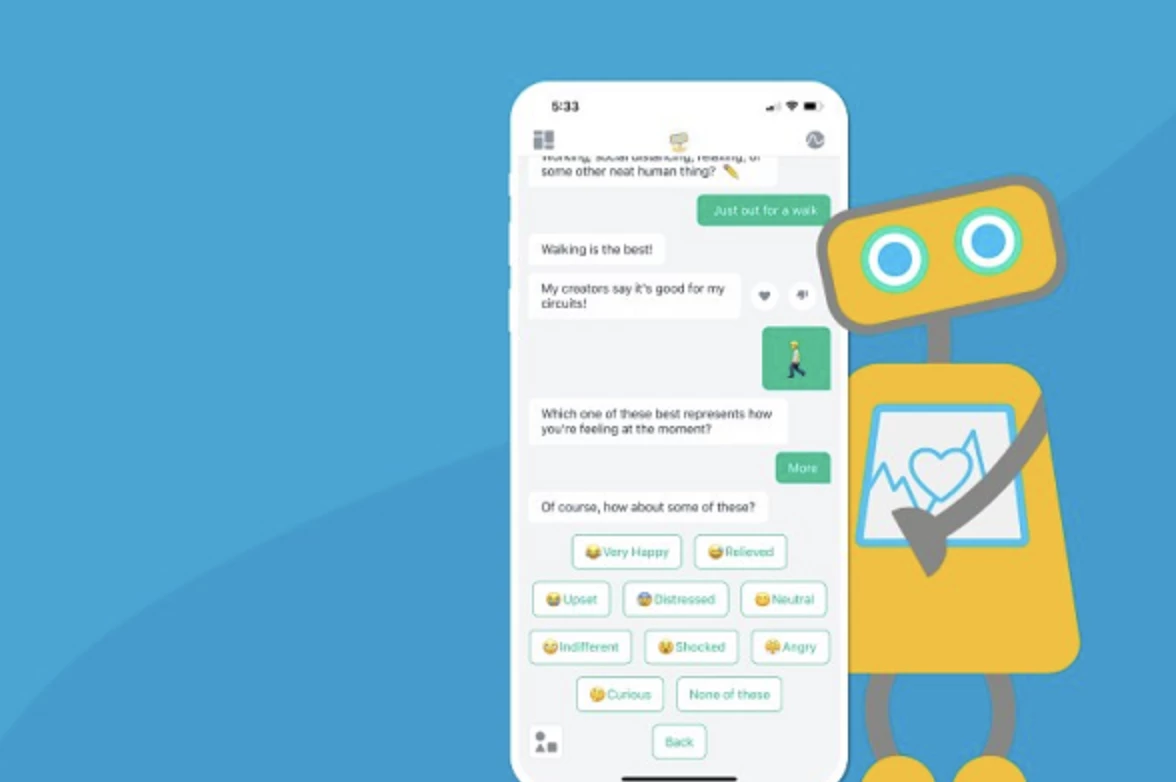

The company, Woebot Health, rolled out a subscription-free chatbot plus specialized services aimed at teens, and other services to be used in conjunction with therapy or as a standalone tool. And in 2023, Woebot's famed creator and clinical psychologist, Alison Darcy, was named in TIME magazine's top 100 most important figures in AI.

“It’s an emotional assistant that’s there for you in tricky moments and always has your best interest at heart,” Darcy told the magazine at the time.

The app had been designed to fill a space that traditional therapy couldn't – be it for people on wait lists to see psychologists or between appointments, and studies showed it was effective in relieving symptoms of anxiety and depression when used as designed.

Users were informed of the app's closure via email and also given the option to download their chat logs, and were also reassured of the privacy of their data.

But despite tight guardrails built into Woebot's therapy services, and the clinicians involved in its programming, its demise underpins the fundamental issues in delivering mental health treatment via AI.

"Chatbots can probably provide benefits for people with mental health concerns, but they also create risks and challenges," noted researchers in this 2023 Digital Health paper. "The ethical issues we identified involved the replacement of expert humans, having an adequate evidence base, data use and security, and the apparent disclosure of crimes."

Recently, new research suggested that chatbots in general, like ChatGPT, were perhaps isolating those in need even further. Nonetheless, other studies have shown that people are turning to the likes of ChatGPT and Claude for mental health support and as a sort of 24-7 digital life coach.

Since its launch, Woebot has been used in 120 countries and has had more than two million conversations with users. It was the first therapy chatbot of its kind, and research found that people accessing it had formed genuine personal relationships with their digital mental health provider.

"Although bonds are often presumed to be the exclusive domain of human therapeutic relationships, our findings challenge the notion that digital therapeutics are incapable of establishing a therapeutic bond with users," the researchers noted in a 2021 study.

Woebot Health, which has raised US$123 million in capital to date, is yet to release a statement on the app's closure, so it's unclear exactly why the decision has been made to take it offline.

Source: Woebot Health