Biocomputing is one of the most bizarre frontiers in emerging technology, made possible by the fact that our neurons perceive the world and act on it speaking the same language as computers do – electrical signals. Human brain cells, grown in large numbers onto silicon chips, can receive electrical signals from a computer, try to make sense of them, and talk back.

More importantly, they can learn. The first time we encountered the concept was in the DishBrain project at Monash University, Australia. In what must've felt like a Dr. Frankenstein moment, researchers grew about 800,000 brain cells onto a chip, put it into a simulated environment, and watched this horrific cyborg abomination learn to play Pong within about five minutes. The project was quickly funded by the Australian military, and spun out into a company called Cortical Labs.

When we interviewed Cortical Labs' Chief Scientific Officer Brett Kagan, he told us that even at an early stage, human neuron-enhanced biocomputers appear to learn much faster, using much less power, than today's AI machine learning chips, while demonstrating "more intuition, insight and creativity." Our brains, after all, consume just a tiny 20 watts to run nature's most powerful necktop computers.

"We've done tests against reinforcement learning," Kagan told us, "and we find that in terms of how quickly the number of samples the system has to see before it starts to show meaningful learning, it's chalk and cheese. The biological systems, even as basic and janky as they are right now, are still outperforming the best deep learning algorithms that people have generated. That's pretty wild."

One downside – apart from some clearly thorny ethics – is that the "wetware" components need to be kept alive. That means keeping them fed, watered, temperature-controlled and protected from germs and viruses. Cortical's record back in 2023 was about 12 months.

We've since covered similar projects at Indiana University – where researchers let the brain cells self-organize into a three-dimensional ball-shaped "Brainoware" organoid before poking electrodes into them – and Swiss startup FinalSpark, which has started using dopamine as a reward mechanism for its Neuroplatform biocomputing chips.

If this is the first time you've heard about this brain-on-a-chip stuff, do pick your jaw up off the floor and read a few of those links – this is absolutely staggering work. And now Chinese researchers say they're taking it to the next level.

The MetaBOC (BOC for brain-on-chip, of course) project brings together researchers from Tianjin University's Haihe Laboratory of Brain-Computer Interaction and Human-Computer Integration with other teams from the Southern University of Science and Technology.

It's an open-source piece of software designed to act as an interface between brain-on-a-chip biocomputers and other electronic devices, giving the brain organoid the ability to perceive the world through electronic signals, operate on it through whatever controls it's given access to, and learn to master certain tasks.

The Tianjin team says it's using ball-shaped organoids, much like the Brainoware team at Indiana, since their three-dimensional physical structure allows them to form more complex neural connections, much like they do in our brains. These organoids are grown under low-intensity focused ultrasound stimulation, which the researchers say seems to give them a better intelligent foundation to build on.

The MetaBOC system also tries to meet intelligence with intelligence, using AI algorithms within the software to communicate with the brain cells' biological intelligence.

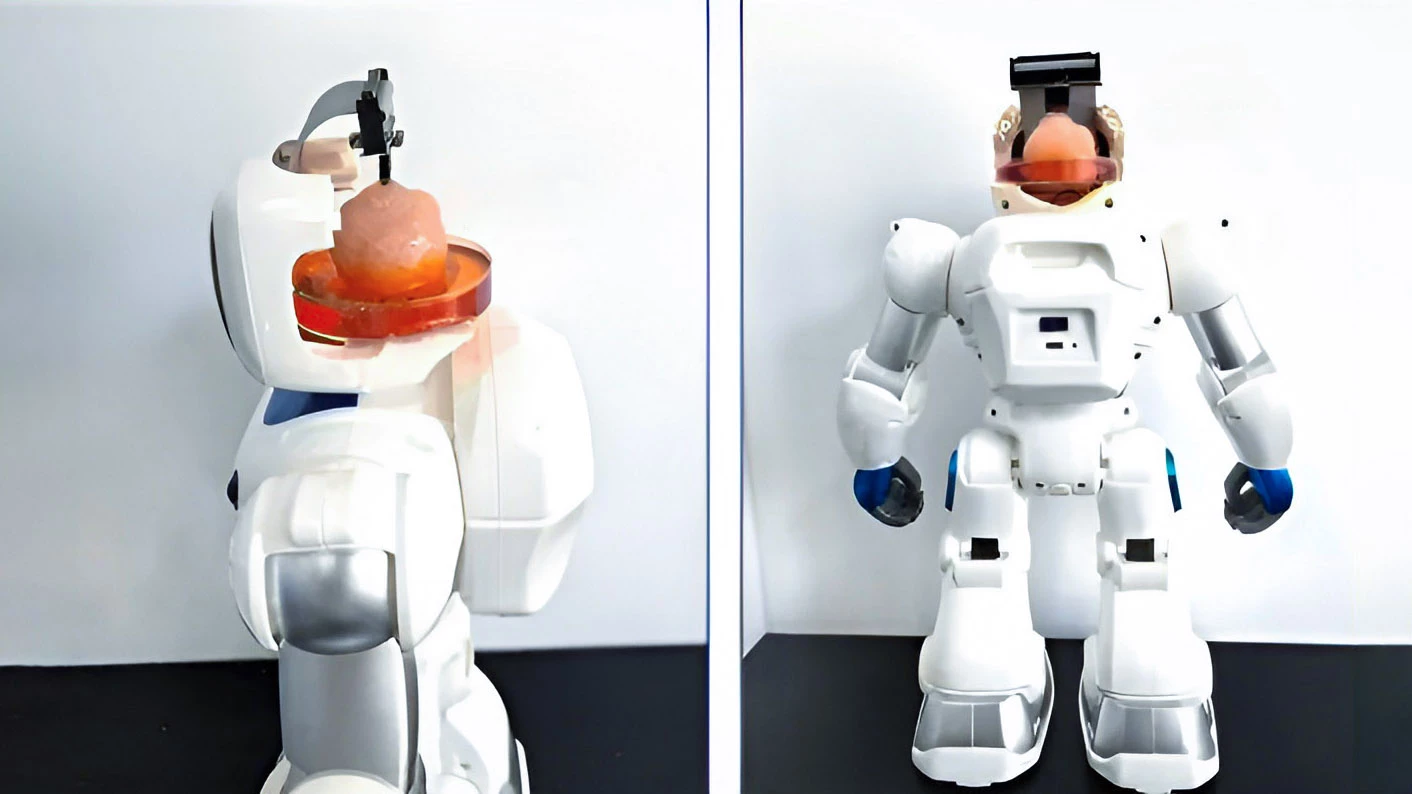

The Tianjin team specifically mentions robotics as an integration target, and provides the rather silly images above, as if deliberately trying to undermine the credibility of the work. A brain-on-a-chip biocomputer, says the team, can now learn to drive a robot, figuring out the controls and attempting tasks like avoiding obstacles, tracking targets, or learning to use arms and hands to grasp various objects.

Because the brain organoid is only able to 'see' the world through the electrical signals provided to it, it can theoretically train itself up on how to pilot its mini-gundam in a fully simulated environment, allowing it to get most of its falling and crashing out of the way without jeopardizing its fleshy intelligence engine.

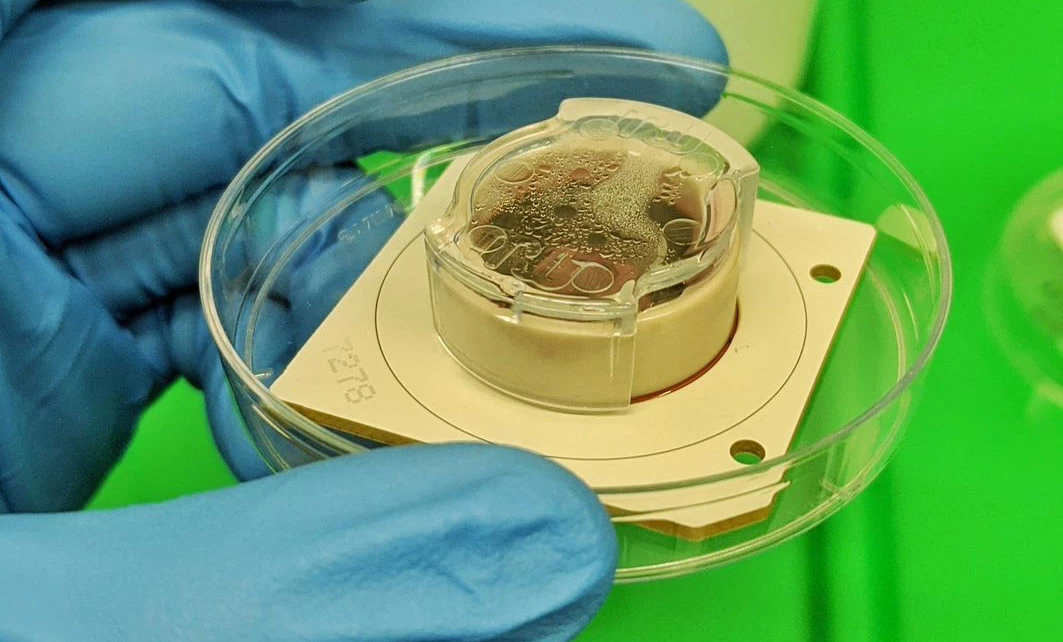

Now, to be crystal clear, the fully exposed, pink lollipop-style brain organoids in the robot images above are mockups – "demonstration diagrams of future application scenarios" – rather than brain-controlled prototypes. Perhaps the image below, from Cortical Labs, is a better representation of what these kinds of brains on chips will look like in the real world.

But either way, if you built a small robot with appropriate sensing and motor capabilities, we see no reason why human brain cells couldn't soon be in there trying to get the hang of driving it.

This is a phenomenal time for science and technology, with projects like Neuralink aiming to hook high-bandwidth computer interfaces straight into your brain, while projects like MetaBOC grow human brain cells into computers, and the surging AI industry attempts to overtake the best of biological intelligence with some strange facsimile built entirely in silicon.

Science and tech are forced to get philosophical as they slam up against the limits of our understanding; are dish-brains conscious? Are AIs conscious? Both may conceivably end up being indistinguishable from sentient beings at some point in the near future. What are the ethics once that happens? Are they different for biological and silicon-based intelligences?

"Let's say," says Kagan in our extensive interview, "that these systems do develop consciousness – in my opinion, very unlikely, but let's say it does happen. Then you need to decide, well, is it actually ethically right to test with them or not? Because we do test on conscious creatures. You know, we test on animals, which I think have a level of consciousness, without any worry ... We eat animals, many of us, with very little worry, but it's justifiable."

Frankly, I can hardly believe what I'm writing; that humanity is starting to take the physical building blocks of its own mind, and use them to build cyborg minds capable of intelligently controlling machines.

But this is life in 2024, as we accelerate full-throttle toward the mysterious technological singularity, the point where AI intelligence surpasses our own, and starts developing things even faster than humans can. The point where technological progress – which is already happening at unprecedented speed – accelerates toward a vertical line, and we lose control of it altogether.

What a time to be alive – and not as a clump of cells wired to a chip in a dish. Well, as far as we know.

Source: Tianjin University