Born April 6th, 1986, Adcock is already a veteran technology entrepreneur at the relatively tender age of 37, having successfully sold employment website Vettery for US$110 million, then founded key eVTOL aircraft contender Archer and taken it public on the NYSE within four years at a valuation of $2.7 billion.

Now, he's taking a swing at the future of labor with humanoid robotics startup Figure, which launched in 2022, quickly raised $70 million and assembled a crack team of 60-odd robot wranglers and AI specialists with experience at companies like Boston Dynamics, Tesla, Google Deepmind and Apple's secretive Special Projects Group, where one not-so-secret secret was a self-driving car effort. Now, the Figure 01 robot is up and walking around, and preparing to make its public debut.

We spoke to Adcock last week, and published the first of our interview pieces yesterday, in which we discuss the future of human society and human rights in a world where human labor loses all its value, and whether Figure is getting involved with military projects.

Today's instalment is a little more down to Earth, covering some of the practicalities of building, training and deploying humanoids. What follows is an edited transcript.

Loz: So Figure. Humanoid general purpose robots. It looks like the sky's the limit here. When you've got people taking bets on when there's going to be more humanoids than humans... It looks like you can go as big as you want, if you can get these things working.

Brett Adcock: Yeah, for sure!

So were you keeping an eye on this space during your time at Archer?

When I left Archer, I took a little reset, and thought, like, what's the most impact I could have? And I think this humanoid thing is really real in a big way. I think we've really seen batteries and motors get much more power- and energy-dense than ever before. I think we've seen compute for AI systems get really good and I think we've seen locomotion controls for bipedal walking get really good.

So I'm pretty strongly convinced this is the right decade to make it work. And we have an enormous labor shortage happening here in the world. And the humanoids can get in there and just do all the grunt work for humanity, that we don't want to do. I think it could be a pretty useful technology.

So it's as simple as that, it's AI, plus what Atlas demonstrated in terms of walking and running and jumping and locomotion, plus batteries and motors. That's what made you think the time is right?

I think this is the right time to make this work. I think this is gonna happen. And similar to Archer, there was a bunch of players on the field. We came in, like the new kid on the block. A really good, solid team, guys from Boston Dynamics, Apple's self-driving car program, Google DeepMind, Tesla. We're running really fast, and we're building a really good product.

The robot's walking around the lab every day, it's phenomenal. I think within the next like 24 months, we'll see humanoid robots in real applications out in the world.

Figure is now 60 people!

— Brett Adcock (@adcock_brett) September 13, 2023

Here are the backgrounds of the last few hires:

→ Google Deepmind (AI)

→ Dexterity (AI)

→ Boston Dynamics (Controls)

→ Joby Aviation (ME)

→ Tesla (EE Design)

→ Tesla (EE Integration)

→ UMich Ph.D. (Controls)

→ Berkeley Ph.D. (Controls) pic.twitter.com/OcRnLsJU5Y

In this business, what's the split between hardware and software?

Longer term, it's definitely more software and AI. In the early days, there's a lot of hardware we need to design. One thing I've realized that's maybe contradictory to a lot of conventional wisdom is you have to have really good hardware in robotics to have a good product.

I think people think the hardware stuff is just go and buy it, it'll be fine. It doesn't really work like that in real life. The hardware is extremely difficult to do really well with high reliability. So we design almost all of our hardware ourselves at this point. So right now it's a mix, half and half. But I think over time the software teams will outpace the hardware teams.

Over the last couple of years, it seems like there's a gold rush developing in humanoid robots, a flood of entrants to the field. Probably since since Tesla got involved. When you look at the other humanoids on the market, what are you looking for? What's significant in a humanoid robot?

The things I look at are things like are they commercial or R&D? Like, Boston Dynamics is a research group. The second is, do they have a bipedal robot walking? Not every group has a walking robot. I think walking is extremely hard. I think it also validates that you have the right software and hardware systems.

I think the third thing is: do they have capable hands that can grab items? Like, there's really no value to a humanoid if it just walks around. The value is really moving objects. And this could be doing real work in a commercial setting, like moving bins and totes and packages, or it could be working in your home folding laundry or doing something but you really need to touch the world and interact with it.

Then there's having a good handle on the AI systems for robotics. And then there's things like, is there a decent enough size team and capital to support the endeavor? That's kind of my five things, the yes or nos I use when I look at this market.

It's interesting that Hyundai, now the owners of Boston Dynamics, doesn't appear to be going after a commercial humanoid product.

They've commercialized Spot and Stretch, but yeah, Atlas is still in a research innovation group, not in a commercial group.

I guess it raises the question, how much value is there in the bipedal part of things? Obviously, humanoids can get up stairs and go to most of the spaces that humans can, but for most of the early use cases, they won't have to. They'll be on big, flat factory and warehouse floors, that sort of thing. You don't need humanoids there.

I guess it's about scaling out, like, these weird rolling, balancing things like Stretch might be better at those early jobs, but they won't scale out to handle all the different jobs humans can do.

Yeah, I don't know. I mean, we certainly think we can put humanoids next to humans all over the world. So I don't know what Boston Dynamics' view is. We're seeing a tremendous demand from clients to put humanoids into applications. And I think I would have to assume the demand is much higher than what you're seeing for things like quadrupeds.

So, use cases. It's gonna come down to use cases. We're probably gonna see them launch in what I call Planet Fitness-type gigs where they pick things up and put things down. Maybe that's unloading trucks, loading them back up, picking orders, putting them on pallets, that sort of stuff. But at what point do you move past the 'pick things up and put things down' kind of task?

Honestly, if we can just pick things up and put things down, that's a massive market, to move bins and boxes and packages. We think that could ship millions of robots throughout the world. There's obviously a lot of other applications in retail, and medical, other things that would be a little bit different from that, but I think we can demonstrate those applications in the coming years.

I don't think we're really at a point where we have to wait five years to demonstrate it doing things other than packages. We can spend time on doing more dexterous solutions. Toyota just put a video out, showing their robots spreading peanut butter on toast, and doing more dexterous manipulation work that you might see in a consumer household.

So yeah, I think there's certainly technologies here today to be able to demonstrate that work. Now if you don't do that reliably, if it can't ship to your home and do the job well every day... I think that's where the real focus is for like companies like Figure. And that's just gonna take time for us to do that. Even moving boxes and bins, we're gonna start doing that this year and into next year, but it'll take us time to do that really reliably, 24/7, without any humans ever stepping in fixing things.

So demonstrating it'll work for one day is the first task, and then do it for a week and then do for a month. So we have milestones we need to reach, and certainly the trajectory looks really fast. The humanoid space is on an exponential curve. A lot of the work that's been done over the last 10 years has been really research-driven. And we're seeing a lot of the work now move to commercially driven work. So you have us, Tesla, groups like Agility out there trying to make it happen commercially.

Training-wise, how do you teach these things to do a task?

One of the best ways we've found is through human demonstrations. It's really no different than what happens in your self-driving Tesla. They take video feeds of a human driving, and they watch how to handle certain situations. Like, how to slow down in front of a stop sign, how to merge, things like that.

So there's many ways we can do training. We can do it synthetically through simulation, we can do it through human demonstrations. We can do it through the robot itself doing actions and learning from those actions if things went well or not. So, there's many different ways to do robot learning, but we do a lot of human demonstrations. And we're also trying to get robots out to do real application work so they can learn themselves how to do things.

So when you say human demonstrations, is the robot just watching a human do things, or are they operating the robot in VR?

Tele-operating to the robot and instructing them on how to pick up a bin, or box, or an object on the table. Doing that over and over again in different poses. And teaching the robot what success looks like, and what a bad outcome looks like. You're labeling that information. You're feeding that into a neural net and you're doing training like that. So that's been a really rich environment. Google's done a lot of work in this space, Toyota Research Institute's done a lot of work there.

It's working for us really well. But nothing's gonna be better than having a fleet of robots out into the world learning. That's gonna be the best. Like, we're gonna have 10,000 robots out doing applications all day, and learning from those applications.

A swarm learning kind of situation.

Your kid learns how to do something after they fail like 100 times. They're like little reinforcement learning agents. But once our robot learns how to do stuff, every robot will know how to do it. We can share that with the fleet and have a fleet learning system. So it's really about trying to train the robot on what everything means, and how to do this stuff.

For meaning, we can use large language models, which we'll be doing. It's like, how do we semantically understand what's happening in the world? We can go through language. We can ask a chatbot, you know, how to clean up a coke can if it's spilling. It knows what to do, it's great at giving step by step instructions. So those are things that help ground the robot in real-life scenarios and real-life understanding.

And then, you know, getting the robot to do applications in the real world so it can learn and perceive an indoor warehouse. We'll use that data to do learning on as well.

I imagine you're going to need to ask your early customers for some for some patience, if you're expecting the robots to learn by failure!

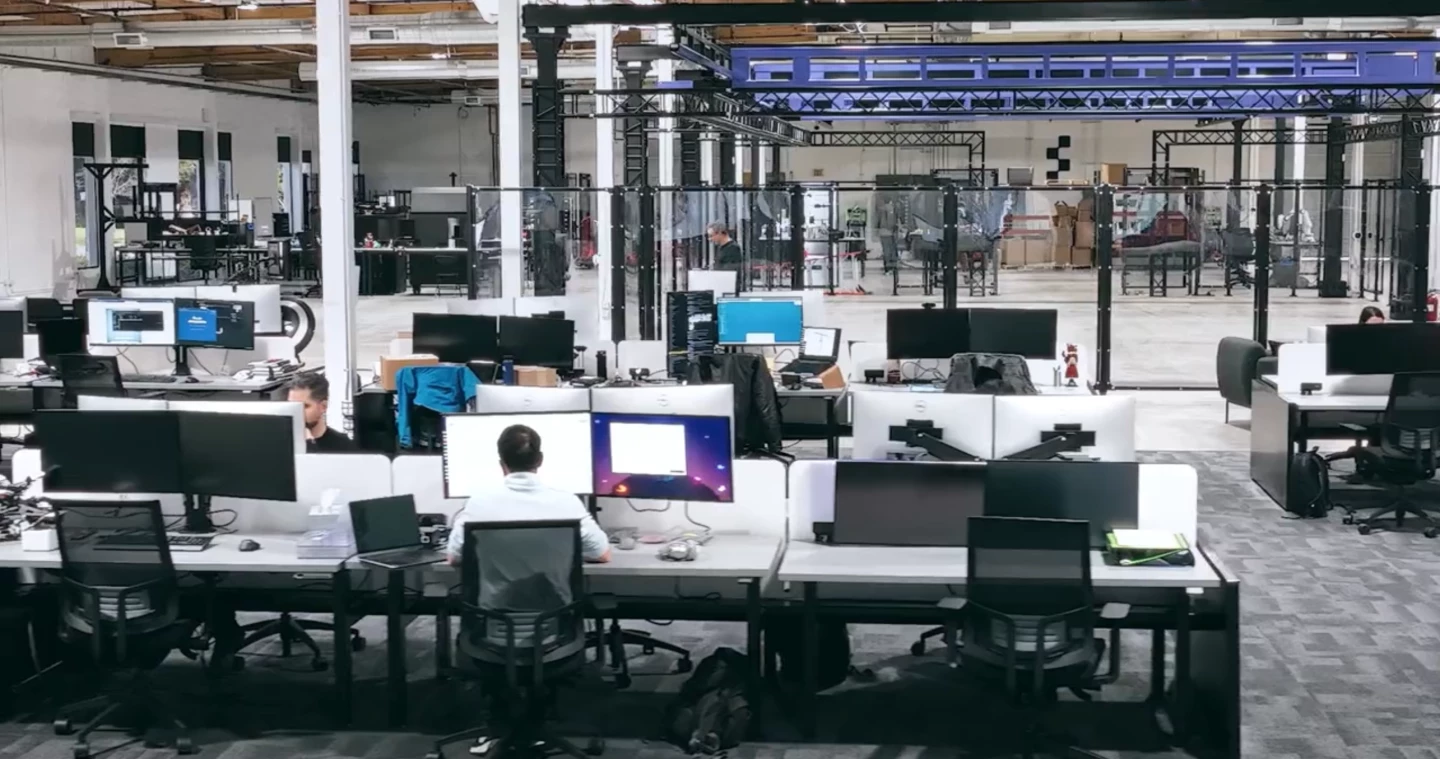

Yeah, I think we'll be failing a lot in the lab. We don't really want to be expecting to fail on our customer sites. But we want to be failing a lot in the lab. That's where we want to be pushing the boundaries, which means we'll see a lot of failure cases. We have a full warehouse built in the middle there. That's our training ground, here in our office where everybody's sitting, and where we can run through testing over and over again.

Like a live version of those blooper videos from from Boston Dynamics, they're always a favorite.

They do bloopers like the best of them!

Do you see this as a system that you would train up to do one job, and then just have it do that for the remainder of its service life, or is it something that you would see as being repurposed?

We should be able to do software updates to the robot and have it learn how to do new things.

Just like its eyes flicker, "I know kung fu," that kind of thing?

My Tesla does a software update every two weeks. So do the apps on your phone. We'll just have a software update on the robot. It'll then know how to do palletization or unloading trucks, and the next year it'll learn how to, you know, jump off the back of a UPS truck, grab a package and drop it off.

Are all of the robots going to know all the things, or are there task-based downloads?

At some point it should know how to do everything without any downloads.

How big of a hard drive do you need to save all of human behavior?

We have the same level of compute and graphics that your self-driving car would have in the torso of the humanoid. We can also call things in the cloud, anytime we need, whenever it might need some more information. I can just query the cloud and access basically unlimited compute or storage. And then we'll have stuff locally on the robot that knows how to do things.

Have you set Figure up differently to Archer?

Yeah, so Figure... I'd like to not go public too early. I think it's gonna be really important. And I think ultimately structuring the board the right way would be really important here. So I have a really strong board. And finding the right partners along the way is really important. So I think my investors that are with me now – and I was the largest investor in the series A – are just phenomenal folks that really want to do this for the long haul. So having the right folks around the table is also going to be really important; they're in it for the next 10-20 years. They're not in it to cash out in the next year or two. This is gonna take a marathon, I think, a long time – and we need to be at the right corporate structure to allow for that, basically.

Check out the first part of this interview here, and stay tuned for the third part in the coming days, covering Adcock's early days as a tech entrepreneur and the path that led him to Figure. Many thanks to Brett Adcock and Crystal Bentley for their assistance on this piece.

Source: Figure