Even if the unlikely six-month moratorium on AI development goes ahead, it seems GPT-4 has the capability for huge leaps forward if it just takes a good hard look at itself. Researchers have had GPT critique its own work for a 30% performance boost.

"It’s not everyday that humans develop novel techniques to achieve state-of-the-art standards using decision-making processes once thought to be unique to human intelligence," wrote researchers Noah Shinn and Ashwin Gopinath. "But, that’s exactly what we did."

The "Reflexion" technique takes GPT-4's already-impressive ability to perform various tests, and introduces "a framework that allows AI agents to emulate human-like self-reflection and evaluate its performance." Effectively, it introduces extra steps in which GPT-4 designs tests to critique its own answers, looking for errors and missteps, then rewrites its solutions based on what it's found.

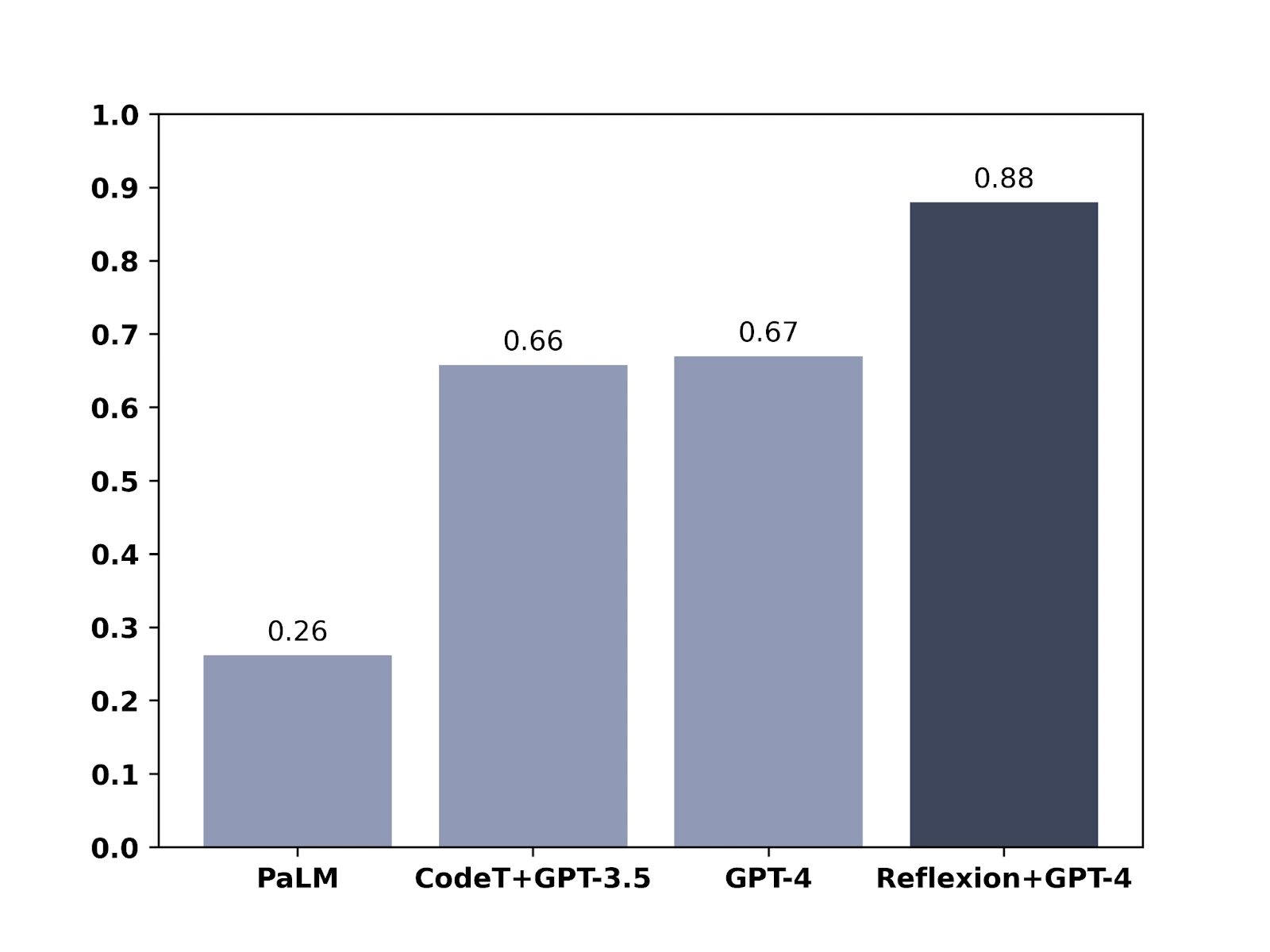

The team used its technique against a few different performance tests. In the HumanEval test, which consists of 164 Python programming problems the model has never seen, GPT-4 scored a record 67%, but with the Reflexion technique, its score jumped to a very impressive 88%.

In the Alfworld test, which challenges an AI's ability to make decisions and solve multi-step tasks by executing several different allowable actions in a variety of interactive environments, the Reflexion technique boosted GPT-4's performance from around 73% to a near-perfect 97%, failing on only 4 out of 134 tasks.

In another test called HotPotQA, the language model was given access to Wikipedia, and then given 100 out of a possible 13,000 question/answer pairs that "challenge agents to parse content and reason over several supporting documents." In this test, GPT-4 scored just 34% accuracy, but GPT-4 with Reflexion managed to do significantly better with 54%.

A Self-Reflecting LLM Agent

— John Nay (@johnjnay) March 23, 2023

Equips LLM-based agent w/

-dynamic memory

-a self-reflective LLM

-a method for detecting hallucinations

Challenge agent to learn from its own mistakes

-Evaluate on knowledge-intensive tasks

-Outperforms ReAct agents

Paper: https://t.co/URsJWbkwmj pic.twitter.com/WfNcPQvIs6

More and more often, the solution to AI problems appears to be more AI. In some ways, this feels a little like a generative adversarial network, in which two AIs hone each other's skills, one trying to generate images, for example, that can't be distinguished from "real" images, and the other trying to tell the fake ones from the real ones. But in this case, GPT is both the writer and the editor, working to improve its own output.

Very neat!

The paper is available at Arxiv.

Source: Nano Thoughts via AI Explained