Thomas Edison once described genius as "one percent inspiration and 99 percent perspiration" – but AI systems like MetaGPT can already reduce that sweat to nearly nothing when it comes to coding and deploying simple apps and websites.

Large Language Models (LLMs) can "understand" natural language prompts with incredible insight and subtlety, and they can write highly effective code in several programming languages, too. But in their raw question-and-answer form – such as ChatGPT – they don't exactly just go away and get the job done for you.

That's why people are building a million apps around these AIs, constraining their vast abilities into single-function machines. An app built around a single type of task can do a lot of background work preparing and assisting the AI in its job, and trying to ensure a high quality output.

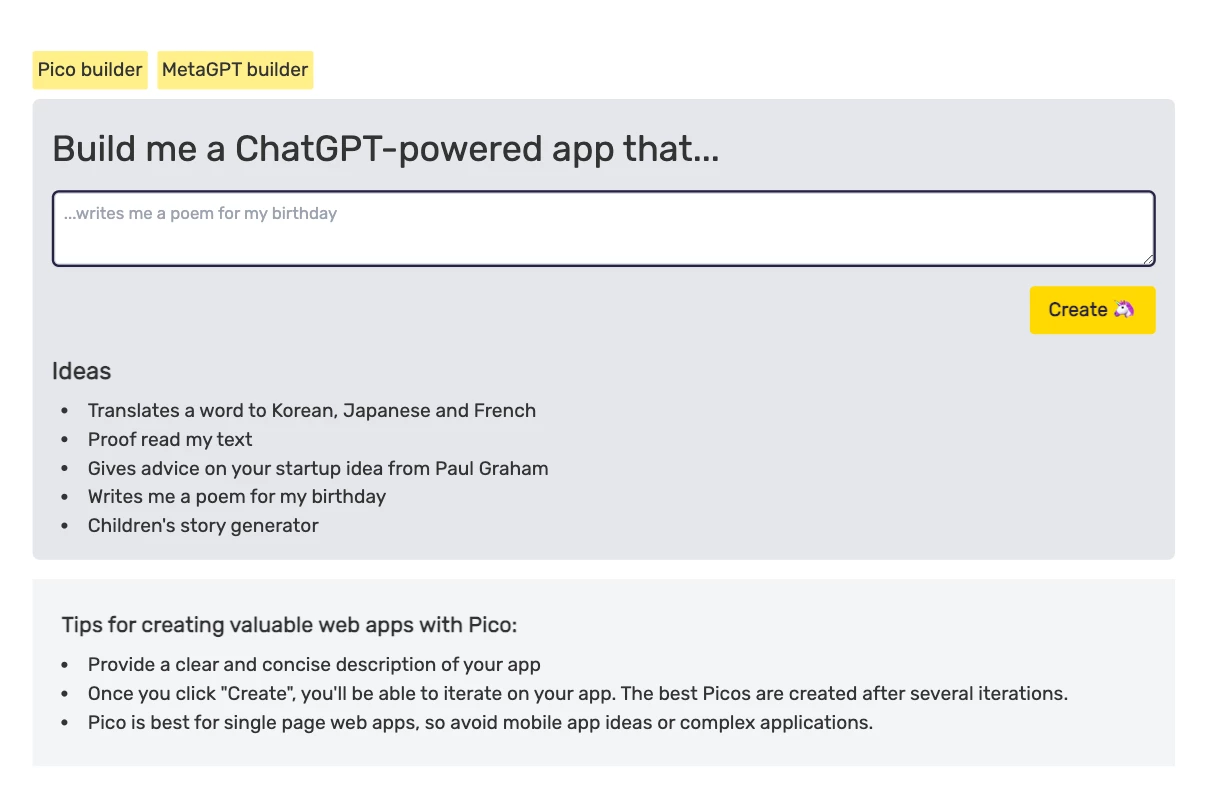

Pico's MetaGPT is such an app, designed to help you create your own functional web apps. You type in what you want the app to do, what inputs it will ask users for, and what outputs it'll give them. You can specify certain design parameters. It'll then attempt to build that app for you using a GPT model, and deploy it so you can test it right there in your browser.

You can then request changes, as if you're talking directly to your app designer, and it'll go away and rebuild the site, doing its best to incorporate those changes. These apps are not just built by GPT, they can also access GPT themselves, which is perfect for generating all kinds of responses. You can see the extremely simple process in this video:

At this point, it seems to work fairly decently - provided you keep things simple. I had it make me an app that asks for your favorite song, then insults you on the basis of your selection. It seems good at creating forms, and using them to prompt GPT. It appears to be capable of generating some dynamic graphics, as well as tables and whatnot.

But while it's unclear what its limits are, it's clear that it's pretty limited. And not just by the GPT model itself, which can often just make things up, but in terms of what it's actually capable of implementing. I'm not sure that submitted forms are actually emailed anywhere, for example, if you request that they are, and it seems single-page applications are as much as it can handle right now.

And as soon as you try to make things much more complex, either by asking it to draw on outside information, or run a user through multiple pages, or complete multi-step processes, it starts crapping out – or worse, building you something that simply doesn't work, and telling you nothing. Likewise, it takes some iterative feedback fairly well, but other ideas can ruin some of the good things in a previous version when they're implemented.

As with all LLM-based technologies, MetaGPT is a toddler in this regard, just a first step toward the LLM-derived anything-machines of tomorrow. But as always, it makes me get all philosophical. So indulge me for a moment...

Let's say within five or 10 years, these things are building their apps and websites more or less as you'd want them, at much higher levels of complexity and speed, and that they're capable of interacting flawlessly with other online services to do all sorts of things. You'd be able to create near-instant, disposable, single-use applications to suit your purpose of the moment. From inspiration to results, no perspiration required.

In that kind of a world, what is the value of an idea? When anyone can see your app, copy it as quickly as they can describe it, and tweak it into their own personal version without needing to understand programming at all, does the value of an idea plunge to zero? Are IP holders really going to pick through the custom-written code for every individual clone looking for things they can sue the user for? Well, maybe – since they can presumably simply deploy their own tireless AIs to do the sleuthing and the litigation on their behalf.

But if it's not possible or practical to protect ideas from bulk ground-up replication, will innovators leave the field, since there's no longer much of an incentive to put in the time to develop and refine a market-leading idea? We're getting into some odd territory here, friends.

Head over to MetaGPT to have a crack at building a pilot app yourself.

Source: MetaGPT