Researchers at the University of Minnesota have done away with all that tedious joystick work by developing a mind-controlled quadcopter. It may seem like the top item of next year’s Christmas list, but it also serves a very practical purpose. Using a skullcap fitted with a Brain Computer Interface (BCI), the University's College of Science and Engineering hopes to develop ways for people suffering from paralysis or neurodegenerative diseases to employ thought to control wheelchairs and other devices.

The aim of the Minnesota team led by biomedical engineering professor Bin He is to develop ways of developing thought-control devices that can work reliably at high speed, without the need for surgical implantation. This means extensive real-world testing and, though spectacular, flying is actually a very simple activity in a three-dimensional environment without the complications of obstacles and terrain that a ground vehicle encounters. That’s one of the reason why it was possible to make a plane that flies on autopilot decades before a self-driving car was even considered. So for developing mind-controlled devices, something like a quadcopter is an inexpensive option because is it relatively easy to control the variables of the experiment.

The quadcopter used was an AR Drone 1.0 built by Parrot SA of Paris, France. It is configured to fly with forward motion pre-set and the operator is able to use mind control to make it go left or right or up and down. A video camera mounted on the front provides a field of view pointing directly forward, an arrangement designed to promote a sense of embodiment in the operator and enhance feedback.

The key feature of the mind-controlled quadcopter was the non-invasive BCI skullcap. Invasive BCI are used for controlling robot limbs and have shown some success, but embedding these are a major surgical undertaking with risks of infection and rejection.

Non-invasive versions can avoid these problems we've already seen projects that use this approach for controlling wheelchairs and robotic appendages.

Even if the ultimate goal is an implanted interface, a non-invasive BCI can help in the process by allowing the patient to become familiar with a BCI before the procedure, especially for a progressive neurodegenerative disorder, such as amyotrophic lateral sclerosis, where early implantation isn’t warranted.

The skullcap BCI is based on electroencephalography (EEG). Inside the cap are 64 electrodes that record tiny currents of electrical activity in the brain. These are usually very complex and chaotic, which is why you can’t strap someone to an EEG machine and read their mind, but brain activity in the motor centers of the central cortex can be more readily identified. The system uses closed-loop sensing, processing and actuation. The cap picks up the signals, they are conveyed to a computer for processing and the output is in the form of commands to the quadcopter’s control system by WiFi.

"It’s completely noninvasive," says Karl LaFleur, a senior biomedical engineering student involved in the project. "Nobody has to have a chip implanted in their brain to pick up the neuronal activity."

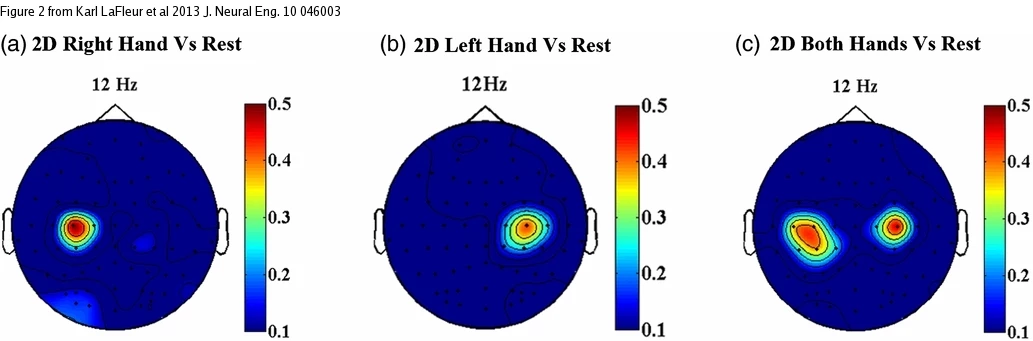

The experiment used five subjects – three women and two men in their twenties. Other subjects manipulated the quadcopter using a conventional keyboard to act as a control group. The test subjects were trained to control the quadcopter by imagining opening or closing their fists. Imagining making a left-hand fist causes the brain to fire activity in the area of the motor cortex controlling the left hand, the system detects this and tells the quadcopter to turn left. Imagining a right-hand fist makes it turn right, and imagining making both fists makes it go up and then down again.

This is something of a first because it required precise mapping of the brain. "We were the first to use both functional MRI (Magnetic resonance imaging) and EEG imaging to map where in the brain neurons are activated when you imagine movements," LaFleur says. "So now we know where the signals will come from."

The training involved working with simulators that resembled an old Pong game from the 1970s. The subjects had to learn to move a cursor on a screen left and right, then to move it up and down as well. Once they’d mastered this, they were set to controlling a simulated quadcopter in a virtual environment.

In the final experiment, a standard-size university gymnasium was kitted out with two large balloon rings suspended from the ceiling. The object of the exercise was to fly the quadcopter through the rings.

The operators faced away from the area, so they could only see through the quadcopter’s camera as a way of providing feedback on performance. The results showed an over 90 percent success rate in navigating the course once the system had been calibrated and the subjects had familiarized themselves with the layout.

"Our study shows that for the first time, humans are able to control the flight of flying robots using just their thoughts sensed from a non-invasive skull cap," says Bin He. "It works as good as invasive techniques used in the past.

"We envision that they’ll use this technology to control wheelchairs, artificial limbs or other devices. Our next step is to use the mapping and engineering technology we've developed to help disabled patients interact with the world. It may even help patients with conditions like autism or Alzheimer’s disease or help stroke victims recover. We’re now studying some stroke patients to see if it’ll help rewire brain circuits to bypass damaged areas."

The findings of the team were published in the Journal of Neural Engineering.

The video below shows the mind-controlled quadcopter in action.

Source: University of Minnesota via BBC