Researchers at Meta have developed a wristband that translates your hand gestures into commands to interact with a computer, including moving a cursor, and even transcribing your handwriting in the air into text. It could make today's personal devices a lot more accessible to people with reduced mobility or muscle weakness, and even unlock new ways for people to control their gadgets effortlessly.

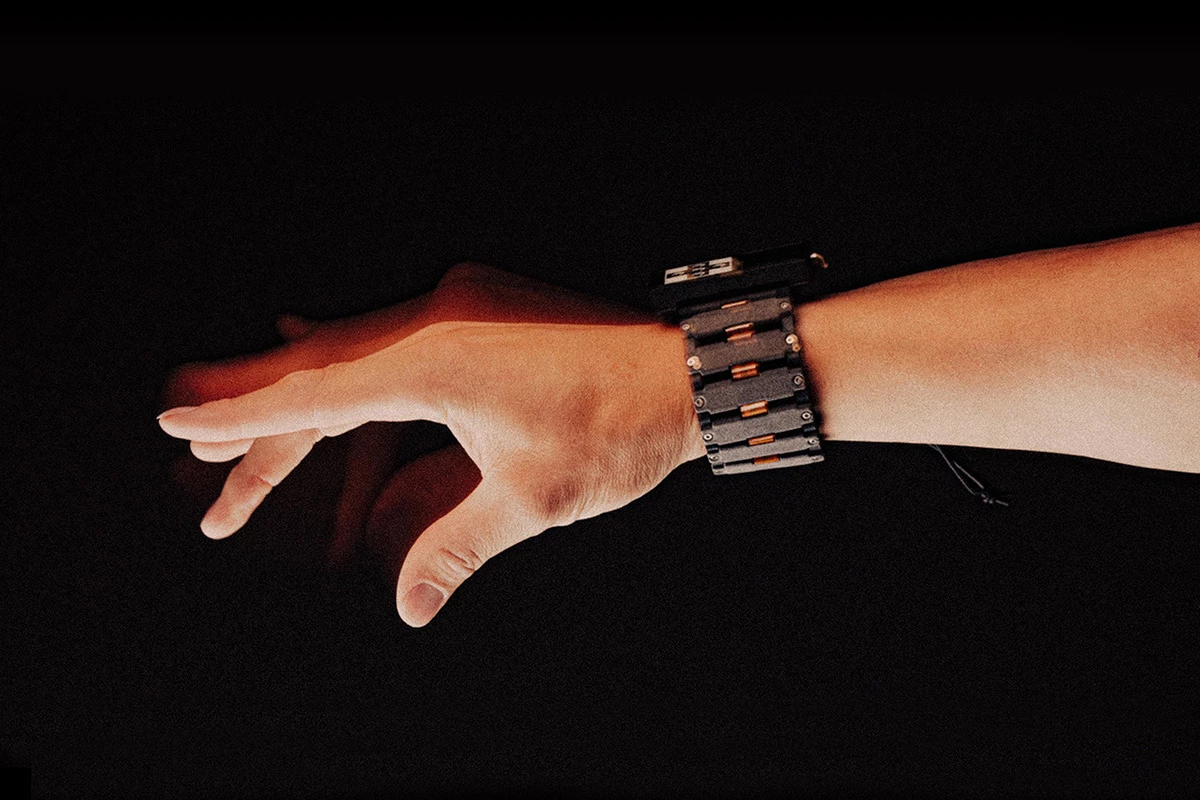

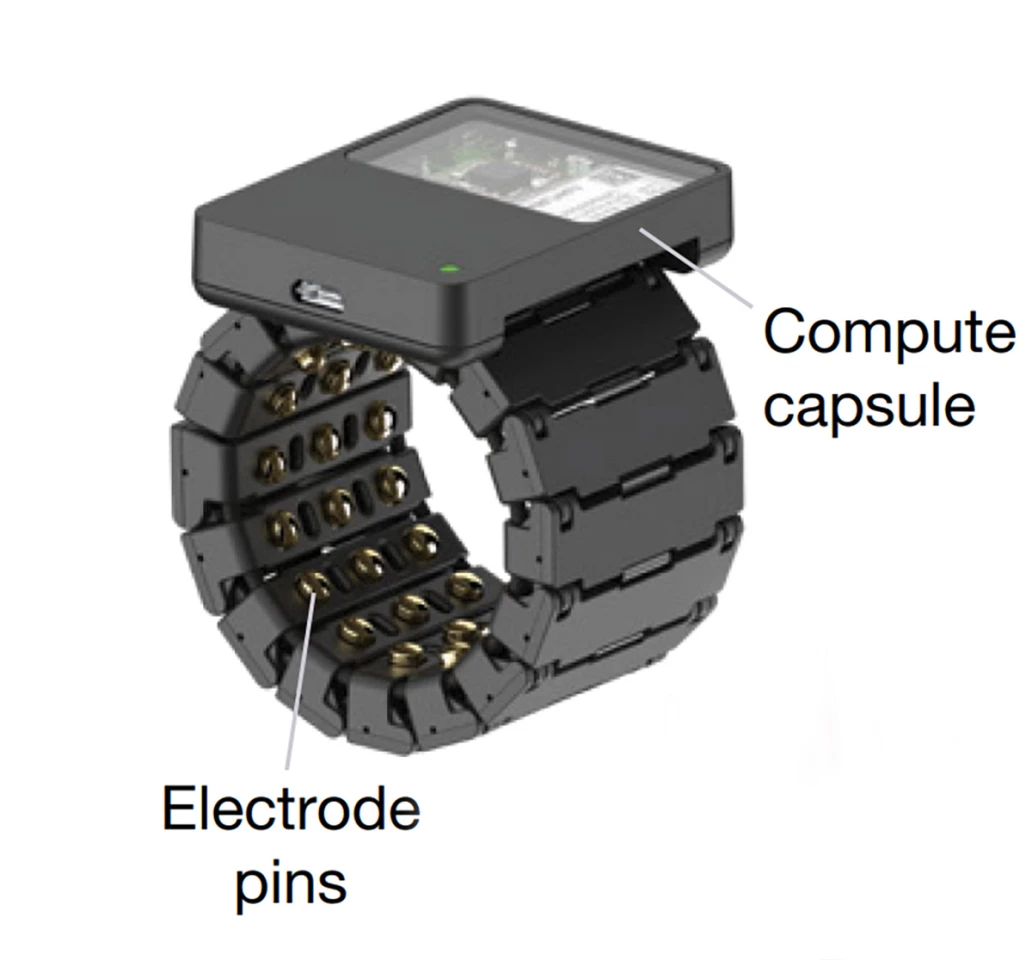

In a paper published in Nature this week, the Reality Labs team described its sEMG-RD (surface electromyography research device), which uses sensors to translate electrical motor nerve signals that travel through the wrist to the hand into digital commands that you can use to control a connected device.

Those signals are essentially your brain telling your hand to perform actions you've decided to carry out, so you can think of them as intentional instructions. You can see how the device works in the demo video below:

Meta had begun working on this years ago. In 2021, the company had a team including Thomas Reardon, who joined Reality Labs in 2019 as its director of neuromotor interfaces, prototype an electromyography-based gesture control device. At that time, Meta was keen to develop this tech to enhance interactions in augmented reality experiences, and initially aimed to enable simple interactions like replicating a single mouse click. Reardon led the work documented in this paper as well.

There have also been many other attempts to build similar systems, including this one from 2023 that used barometric-pressure sensors to recognize 10 different hand gestures, and the Mudra Band that claims to use Surface Nerve Conductance to control your Apple Watch with simple gestures.

The sEMG-RD tech goes quite a bit further. You can not only control an onscreen cursor in a one-directional mode (like a laser pointer), but also navigate through an interface and select items using finger pinches, thumb swipes, and thumb taps. You can even enter text by mimicking handwriting at a decent 20.9 words per minute. That last one is especially neat, considering that phone keyboard typing averages at about 36 words a minute.

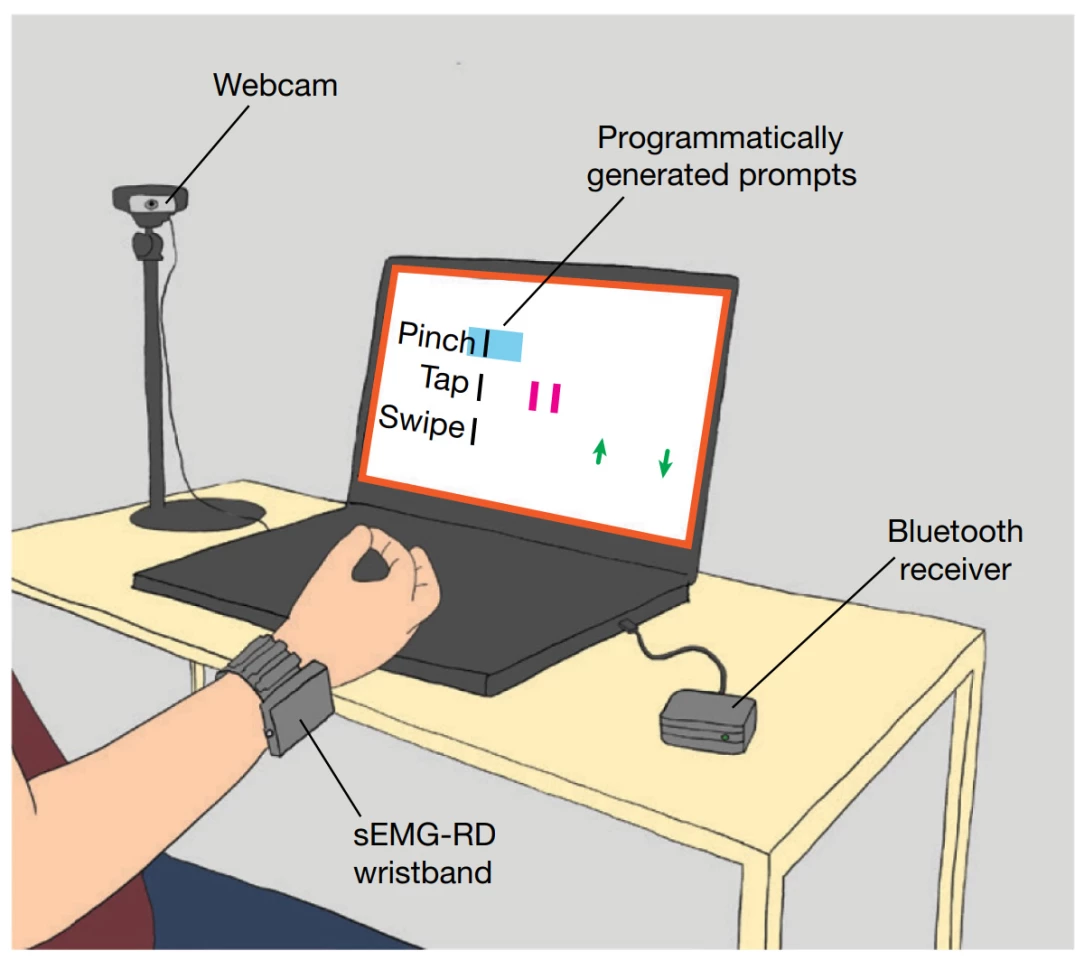

What's more, this system doesn't need to be calibrated for each individual before they use it – although it can be fine-tuned for greater personalization. The team developed a method to capture training data from study participants at scale, and ran it through a neural network to transform raw signals into commands accurately, regardless of who was using the wearable.

The researchers used training data from thousands of participants, piped through their deep learning system to create generic decoding models that accurately interpret user input across different people. That negates the need to tune sEMG-RD to individuals. This means it can be deployed widely, and people can start using the wearable interface as quickly as they can get the hang of it, just like how people without disabilities can use a computer mouse without first calibrating to the way they move their hands.

The team believes this tech could be developed further to directly detect the intended force of a gesture, and find use in more nuanced controls for cameras and joysticks. It could also reduce the already small physical efforts required to operate phones and other digital devices. Perhaps even more exciting, though, is the possibility of exploring novel interactions we don't yet have names for, by leveraging different muscle synergies or sending new signals for the wristband to interpret.

Source: Meta via Scimex