Anthropic's Claude Sonnet 3.5 has introduced a feature called Artifacts. It's essentially an interactive preview window adjacent to the chat window that provides a collaborative workspace.

What does that mean?

No longer are you restricted to simple text generation back and forth. You can attach files and images for Claude to interpret and extrapolate. You can also request SVG images (Scalable Vector Graphics, basically web-friendly pictures), which can be put directly into code, or made into slideshows or presentations to your boss for Monday morning. You can even make playable games to entertain yourself or your kids on a rainy day. Your imagination is the limit.

I tried it myself, and within 10 minutes and without writing a single line of code, I had a vertically scrolling jumping game. The main character looked similar to The Sky Knight from the 1982 Atari game Joust, happily hopping from branch to branch upwards. Two minutes later I'd broken the code trying to add a timer and ran out of free messages to Claude, prompting me to pay to subscribe.

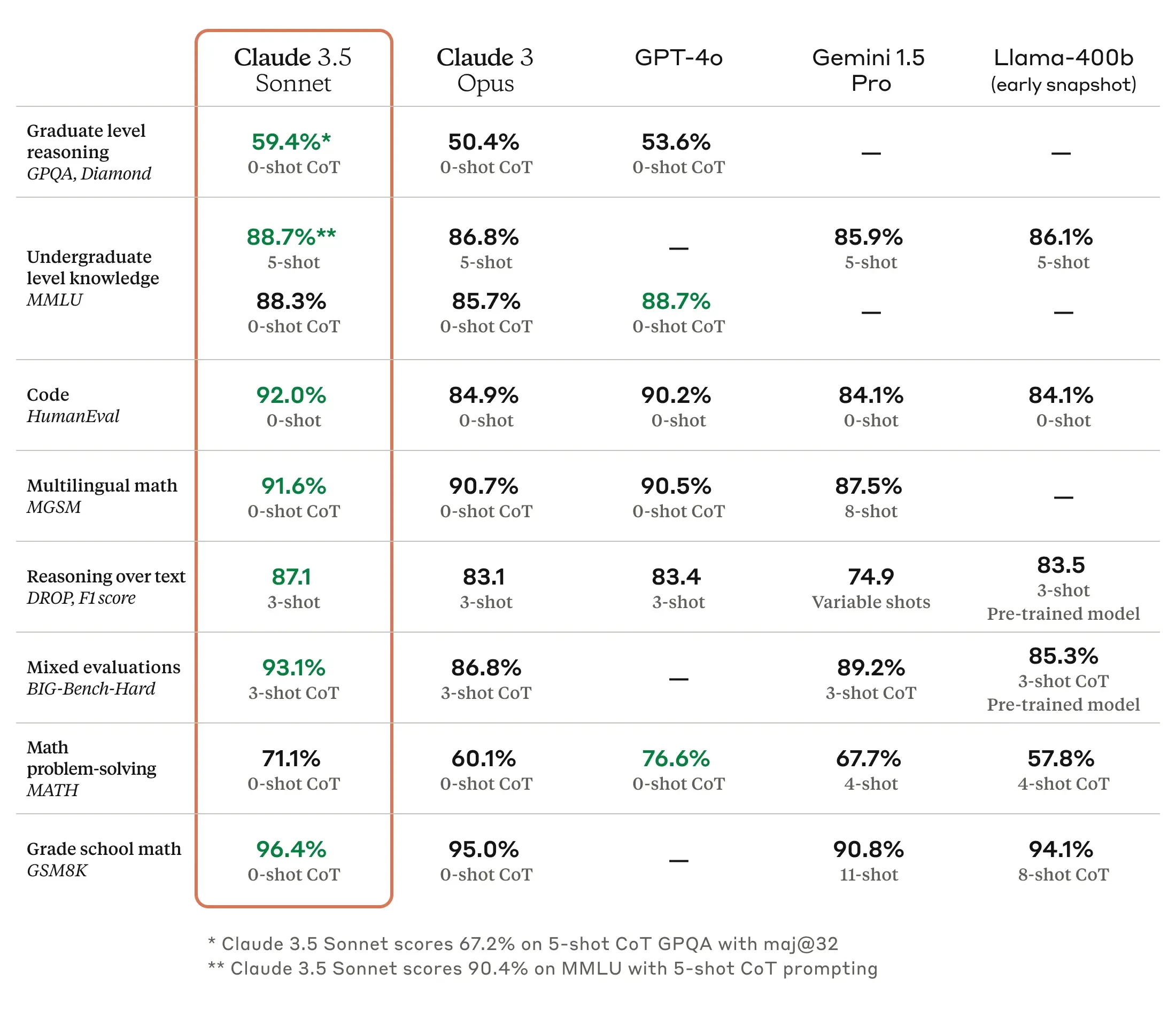

Games and code are just a fraction of what Anthropic's latest chat AI release is capable of. This release is smarter and faster than its predecessor, Claude Opus 3.0. According to Anthropic's figures, it's smarter and faster at nearly everything over GPT-4o, Gemini 1.5 Pro, and Meta's Llama 3. It's even twice as fast as Claude Opus 3.0.

It's also made to run cheaper. Sonnet costs US$3 per million input tokens and $15 per million output tokens, with a 200,000-token context window. For reference, GPT-4o costs $5 per million input tokens and $15 per million output.

The entirety of Shakespeare's work is about 900,000 words in all, adding up to about 1.2 million tokens.

What is a token?

A token is a piece of data measurement for Large Language Models. It could be a single character or even an entire word, like the letter "X" or the word "the" – though, a token is generally about four characters long, depending on the LLM. The "token context window" is how many words the LLM can remember from your previous interactions to better formulate the next reply. Two hundred thousand tokens comes out to about 150,000 words or 500 pages of text.

Source: Anthropic