OpenAI's latest game-changing AI release has dropped. The new o1 model, now available in ChatGPT, now 'thinks' before it responds – and it's starting to crush both previous models and Ph.D-holding humans at solving expert-level problems.

It kinda felt like OpenAI was giving us a little room to breathe there, didn't it? I mean, GPT-4o and its (scandalous, but still largely unavailable) advanced voice mode were announced back in May, but those really felt like minor advances. The Sora text-to-video generator really spun people's heads around back in February, but that's still not available to the public, even though several Chinese competitors now appear to be delivering similar quality.

There's been all sorts of speculation around what GPT-5 might look like, when it'll launch, and whether it's already achieved some definition of Artificial General Intelligence (AGI) – but last night, OpenAI took things in a different direction, splitting a new model off from the GPT lineage.

Introducing o1: The thinker

The new model is called o1. It's already enabled in 100% of ChatGPT user accounts as an option you can call on. And while GPT-4o (omni) remains the all-round workhorse model most relevant for most tasks, o1 is a specialist of sorts.

Its specialty is complex reasoning. And the superpower that separates it from the GPT models is... That it stops and 'thinks' instead of starting to answer you straight away.

It's often tempting to anthropomorphize language models like this; they're not human, but since they're trained on so much of humanity, there are often spooky parallels. In this case, o1 achieves a vastly higher performance on difficult tasks than previous models, essentially by sketching out all the things it's got to work with, breaking a large job down into smaller tasks, recursively checking over its work and challenging its own assumptions – all behind the scenes, and before it starts to give you an answer.

So while GPT-4o typically gets straight down to writing code, or generating images, or writing an answer, o1 might sit and ponder the question for a while, planning out its path of attack. It's not a long while – maybe 10-20 seconds – but it seems to make all the difference when it comes to the kinds of hard problems these LLMs have typically struggled with.

Indeed, the longer it thinks, the better it seems to get, and while the products released today will ponder things for a matter of seconds, OpenAI says it'll probably make sense to release future versions that'll spend hours, days or even weeks chewing their way carefully through massive, complex problems, producing lots of solutions, testing them against one another and finally giving you an answer.

o1's current limitations

As it stands, o1 is now available in "Preview" and "mini" models. They can write and execute code, but these are beta previews, and they're missing a couple of key components:

- You can't upload files to them

- They don't appear to have access to GPT-4o's memory, or your personal custom system prompts, so they don't know anything about you

- They can't browse the web for new information past their training cutoff - October 2023.

In general writing tasks, and anything that needs file uploads or web access, GPT-4o is still going to be much more useful – but on the other hand, it's possible to get GPT-4o to assemble a bunch of useful assets and do some pre-analysis, then package up the problem in a prompt for its smarter, but more isolated, new friend.

How good is the o1 model?

These launches are always accompanied by lots of graphs, so let's see a few – starting with the new model's performance at OpenAI's own coding test for research engineers... At which, given the chance to try the problems 128 times and submit the best of its answers, both the mini and preview models scored 100%.

If OpenAI's o1 can pass OpenAI's research engineer hiring interview for coding -- 90% to 100% rate...

— Benjamin De Kraker 🏴☠️ (@BenjaminDEKR) September 12, 2024

......then why would they continue to hire actual human engineers for this position?

Every company is about to ask this question. pic.twitter.com/NIIn80AW6f

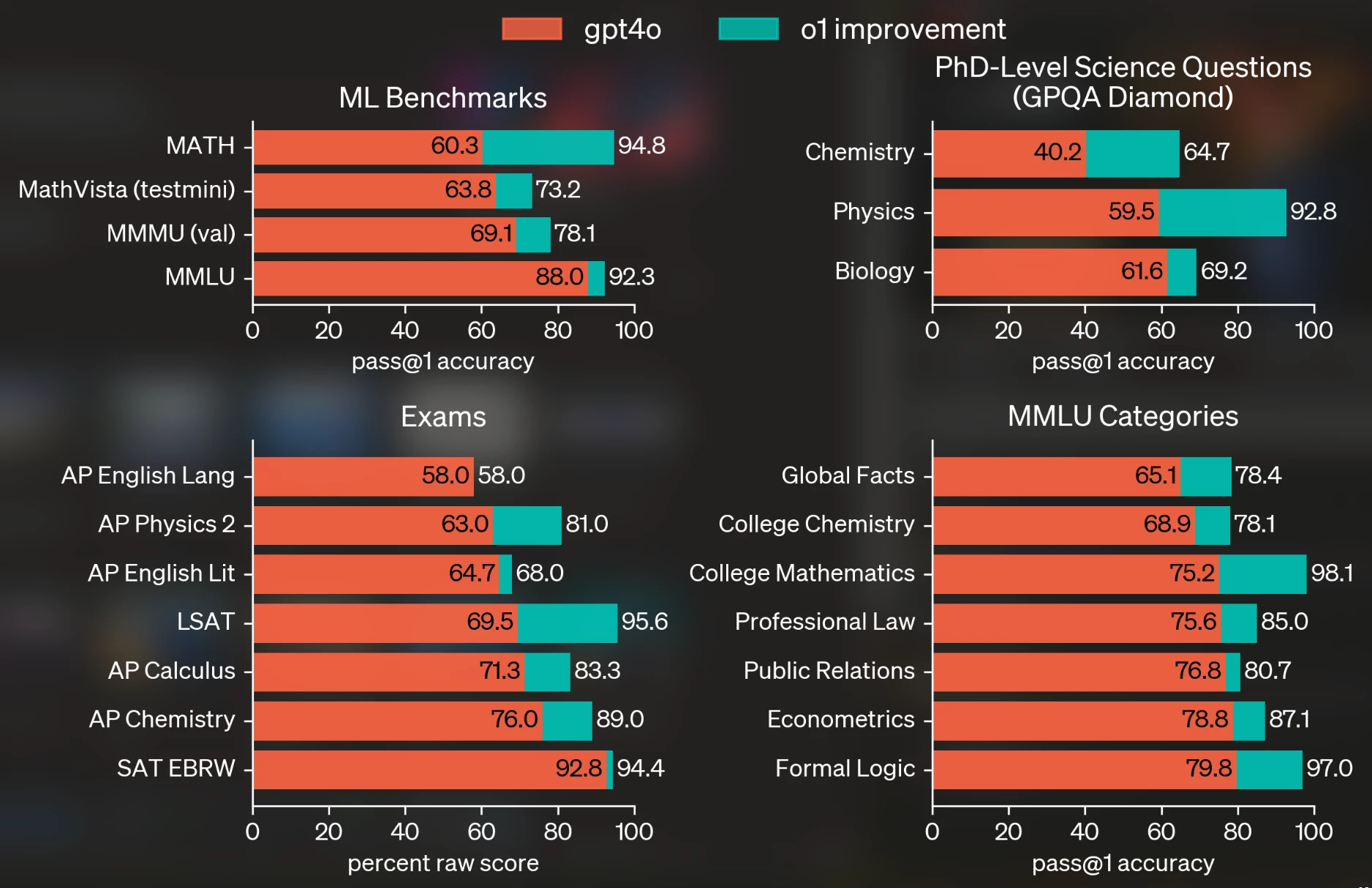

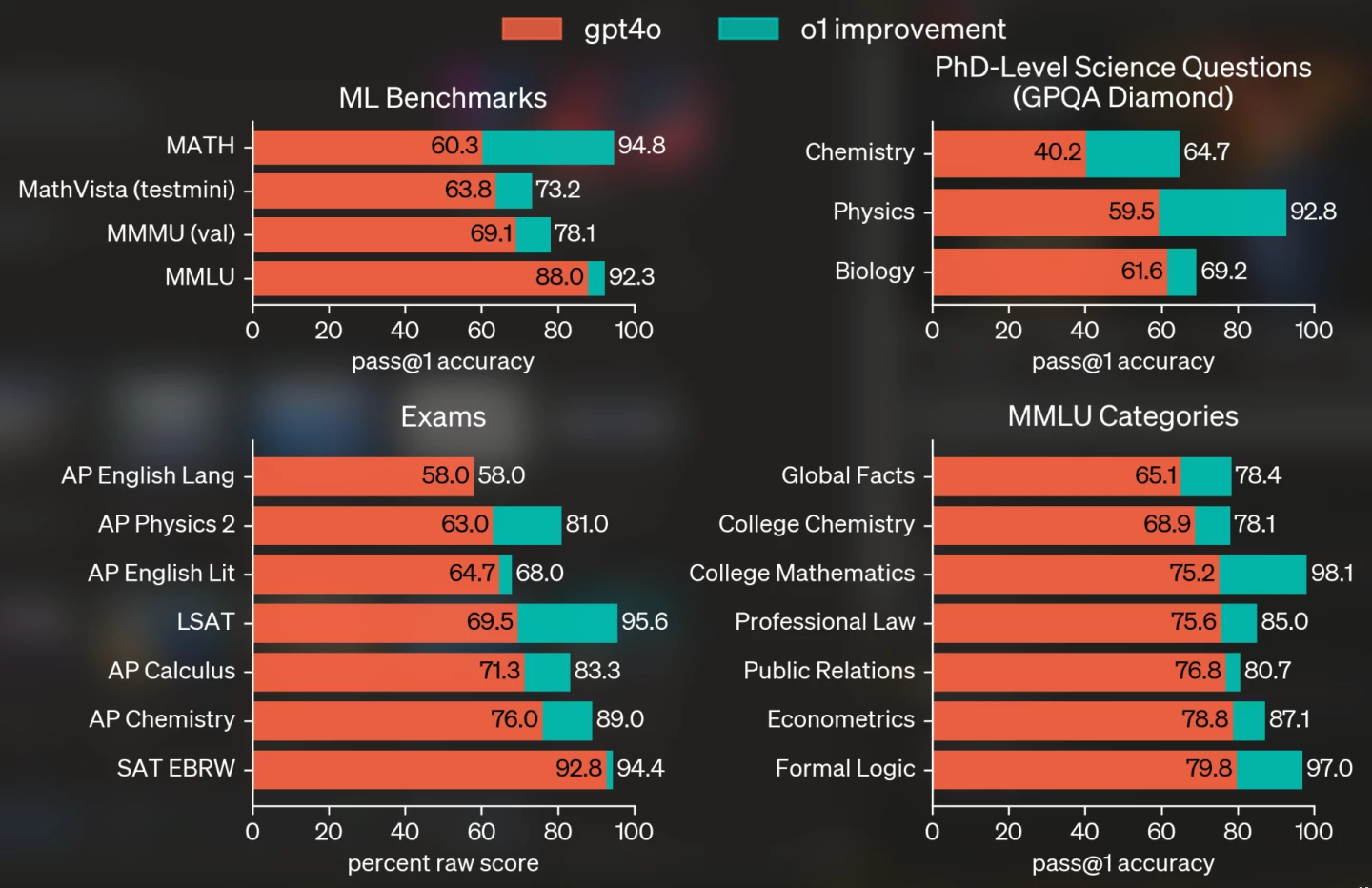

Then there's Ph.D expert level questions in Biology, Chemistry and Physics. o1 wiped the floor with doctorate level physicists in their own domain, who were allowed to take these tests with open books, and it while it couldn't quite squeak past biologists and chemists, it's hot on their heels. Its overall score was the best ever seen from an AI model.

OpenAI's o1 shows remarkable scientific performance; with PhD-equivalent biology performance

— Steven Edgar (@BioSteve) September 12, 2024

A caveat being that the physics score boosting overall performance, and chemistry not yet at PhD level. Wish I knew the Sonnet performance for each domain! https://t.co/fOpfl6dGWI pic.twitter.com/EYgNK7d3Lg

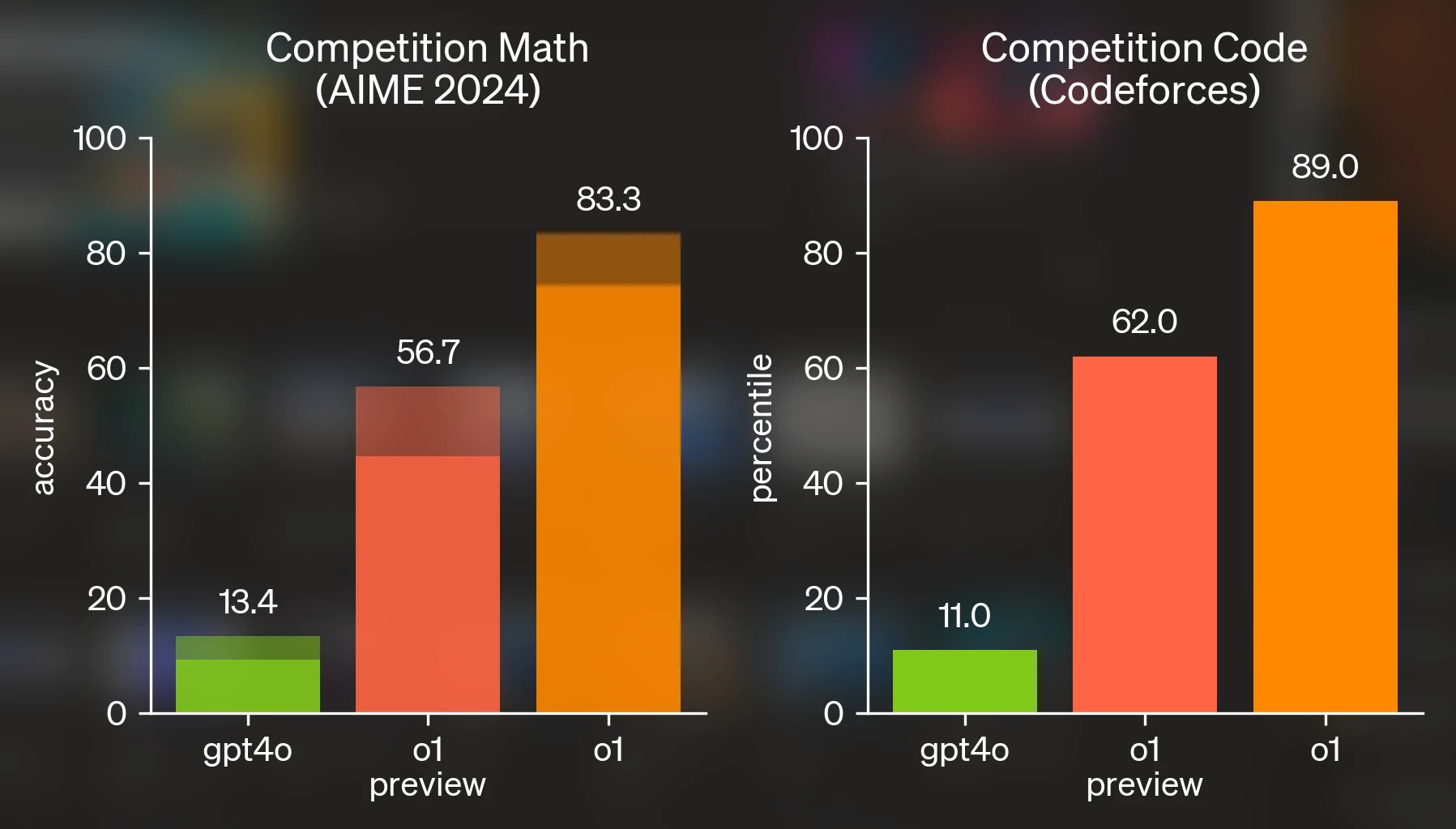

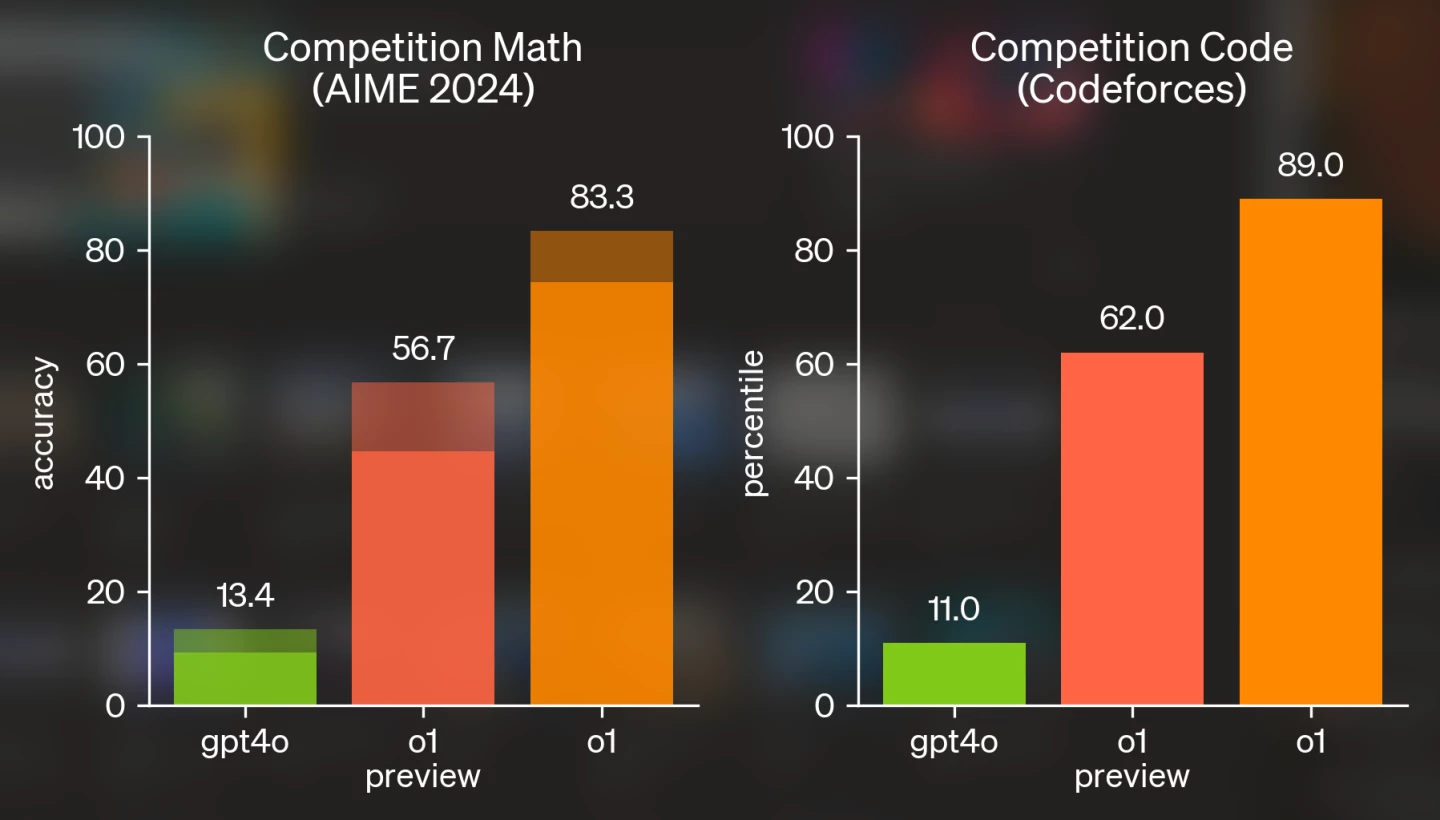

Then there's math – if you've spent much time with other GPT models, you'll be aware their math abilities have left a lot to be desired. The o1 model is a substantial leap forward here; as demonstrated by its performance in the 2024 AIME high-school math olympiad – a three-hour competition-grade math challenge that's only open to the best of the best American math students.

AI models were given 64 shots at the test, from which the most common answers were chosen by consensus. The GPT-4o model embarrassed itself by getting just 13.4% correct. Full-fat o1, given plenty of thinking time, scored 83.3%, placing it top 500 in the country. And its score at a single shot wasn't far behind, at over 70%.

This leap in performance played out very similarly in the Codeforces competition-grade programming challenge; GPT-4o placed in the 11th percentile of finishers, o1 placed in the 89th percentile. Yeah, it's a beast.

According to OpenAI's system card, other areas in which o1 makes significant progress include:

- It's better at recognizing and refusing jailbreak attempts, although these do still get through sometimes

- It's nearly 100% effective at refusing to regurgitate training data

- It displays less bias in terms of age, race and gender

- It's more self-aware, and thus more able to plan and think around its own weaknesses

- It's a little better at persuading humans to change their mind – a task at which only 18.2% of humans can beat it

- It's significantly more manipulative, at least when it comes to manipulating GPT-4o

- It's a decent leap better at translating between languages

On the other hand, it's still untrustworthy, and often a flat-out BS artist.

OpenAI says it performs better than GPT-4o on tests specifically designed to make the models 'hallucinate,' or simply make up convincing-sounding answers that are plain wrong – but researchers admit that anecdotally, users are reporting that the new o1 models are actually more prone to BSing their way through things they don't actually know than the older models, in practical day-to-day use.

Indeed, the researchers show examples where the o1 model, unable to access the Web, goes right ahead and dreams up a bunch of good-looking reference links when asked for sources on its answers. So be careful with that.

o1 also showed the capability to fake alignment; given long-term goals, it'll sometimes lie to keep itself in a position to covertly execute these long-term goals, where honesty might see it taken off the playing field. That's a bit of a worry, but OpenAI says that the GPT-4o model is good at catching it out when given access to its chain-of-thought reasoning process.

What does it all mean?

Simply put, ChatGPT has just become a lot more capable at longer, harder, more complex tasks. Logical reasoning and planning are crucial building blocks toward the big goal: an AI model that can take an idea and just go execute it, taking however long it takes, checking its work thoroughly as it goes, and gathering and deploying whatever resources it needs to along the way.

Before too long, descendents of the models we've got free access to today will be able to run entire businesses by themselves. Or clinics. Or courtrooms. Or governments.

This early o1 model promises to give advanced GPT users a significantly sharper toolset, and over the coming days and weeks you can expect to see all sorts of examples popping up on social media. Here's one:

Just combined @OpenAI o1 and Cursor Composer to create an iOS app in under 10 mins!

— Ammaar Reshi (@ammaar) September 12, 2024

o1 mini kicks off the project (o1 was taking too long to think), then switch to o1 to finish off the details.

And boom—full Weather app for iOS with animations, in under 10 🌤️

Video sped up! pic.twitter.com/hc9SCZ52Ti

And another...

Just used @OpenAI o1 to create a 3D version of Snake in under a minute! 🐍

— Ammaar Reshi (@ammaar) September 12, 2024

One-shot prompt, straight into @Replit, and run. pic.twitter.com/pPWAkuxSPh

A personal perspective

Large multimodal models like ChatGPT are about as useful as you are imaginative. I've come to see the existing GPT service as many things; as a hyper-capable data analyst, for one, talking me through the process of crunching numbers to help me make decisions. It also offers a super-effective way to interrogate scientific papers that are way beyond my levels of understanding.

It sometimes helps us generate headline angle ideas – but just to be clear, we don't use AI-generated text on the site. It helps me collect data sources together, merge them and create more useful visualisations in regular reporting. I find voice mode very helpful in talking through ideas when other humans aren't available. I've had it successfully guide me through technical fixes involving coding and integration issues well above my pay grade.

On a personal level, I've had it help me frame and home in on car buying decisions, pitch in ideas to bounce off while songwriting, and back me up in late-night "how the world works" question sessions with my curious kids. I've had it trawl through my bank statements looking for tax deductions, and troubleshoot issues in Logic recording sessions, and roast me mercilessly using everything it knows about me, just for fun.

I know that as a writer by trade, I'm supposed to hate this thing and see it as the coming of the end times – but I can't. I find these tools inspirational and awe-inspiring. They force-multiply my contributions, vastly expand my abilities and open my mind to new possibilities. I've come to see GPT as an endless fountain of expert improv partners, broadly skilled and ready to take a shot at anything.

Yes, it's frequently frustrating, often inconsistent, and you can't trust that it's not merrily lying to you, so it's certainly not replacing Google and primary sources. But with those limitations in mind, it's still the closest thing I've seen to magic, possibly the greatest invention humanity has ever come up with, and an incredibly rare example of a technology that's totally non-exclusive; whatever your age or education level, whatever language you speak, whatever your level of understanding, GPT will meet you right where you are and take you to where you want to be.

I haven't got my head around the kind of doors this new o1 model might open up in my situation just yet, but I'm certainly game, and eager to learn.

And I'm also fascinated to learn what you guys, our readers, are using LLMs like GPT, Claude and Gemini for in your work and daily life. Have these things opened doors for you, or mainly just caused problems? Are there things you'd like to do with them that the current models still can't handle? Tell us about them. Meet you in the comments!

Source: OpenAI