Convolutional neural networks, or CNNs, are the workhorses behind many of AI's greatest hits, like spotting faces in photos, reading handwriting, or translating languages. They're masters at pattern recognition, scanning raw data with tiny filters (called kernels) to pick out meaningful features, kind of like a digital magnifying glass that highlights what matters.

But this clever filtering comes at a steep cost. Most of the energy CNNs use goes into these complex operations, which are like running a marathon through every pixel of an image. It's powerful, but not exactly efficient.

As AI systems grow bigger and hungrier, this brute-force method is starting to strain the system. Data centers are feeling the heat, literally, with rising power demands sparking concerns about an "AI recession," where the cost of keeping up could slow innovation.

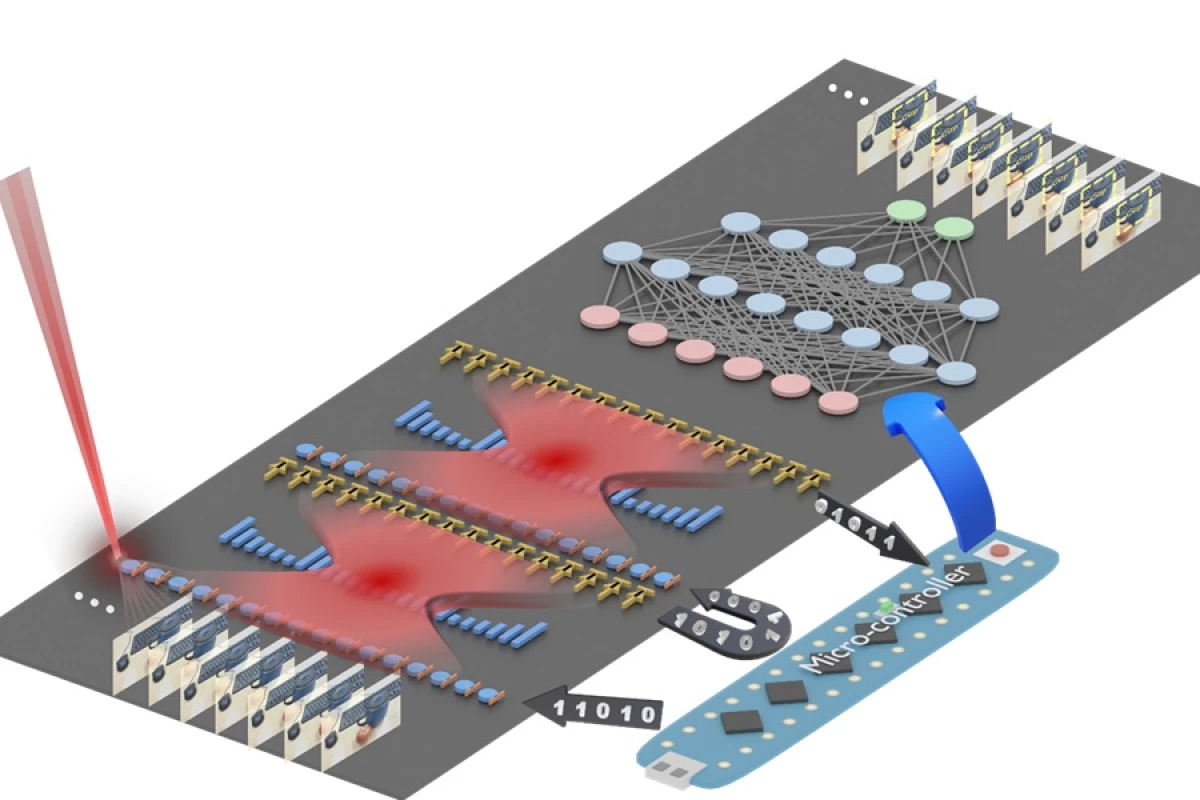

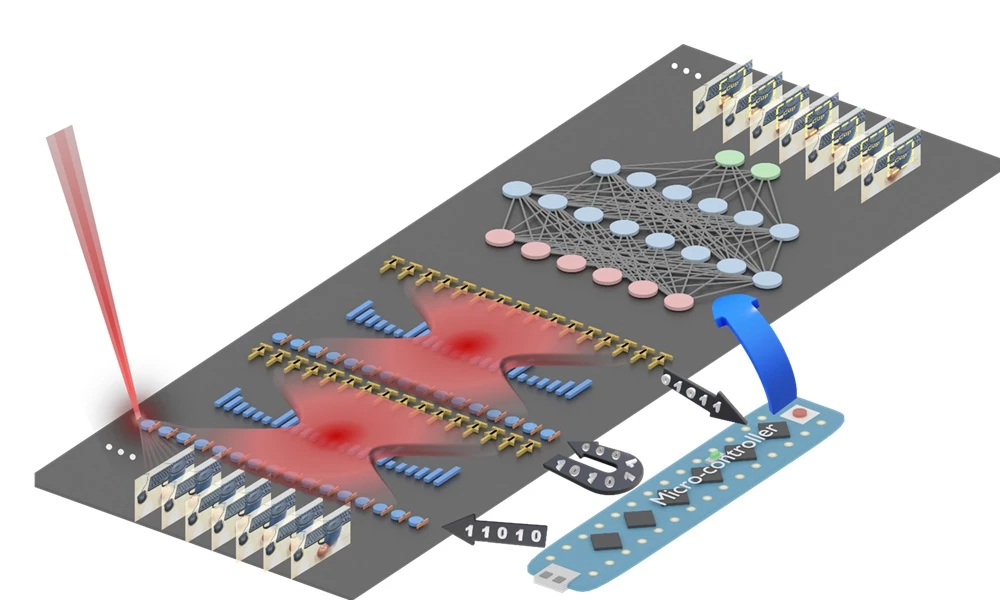

To tackle the growing energy demands of AI, researchers at the University of Florida have built something dazzling. Their new chip, called a photonic joint transform correlator (pJTC), swaps electricity for light to handle one of AI's most power-hungry jobs.

What makes it special is how it rewrites the rules of speed and efficiency. Instead of crawling along with traditional tech like liquid crystals or micromirrors, the pJTC programs data and filters at blistering GHz speeds. The chip cleverly repurposes trusted photonic components from optical transceivers, adding a fresh twist: additions of on-chip silicon photonics-based FT Fresnel lenses that perform complex light-based math right on the chip.

And then there's the laser magic. With chip-integrated lasers, the pJTC can juggle multiple computations at once using different colors of light, a technique called spectral multiplexing. Thanks to photonic wire bonding, it’s also compact and sleek.

In tests, the prototype chip correctly identified handwritten digits with 98% accuracy, rivaling traditional electronic processors.

Instead of crunching numbers the usual way, the chip transforms machine learning data into laser light. This light then travels through tiny Fresnel lenses etched into the chip, which bend and shape it to perform complex math, like a light-powered calculator. Once the math is done, the light is turned back into a digital signal, and voilà, the AI task is complete.

Hangbo Yang, a research associate professor in Sorger's group at the University of Florida and co-author of the study, emphasized the breakthrough by noting that, "This is the first time anyone has put this type of optical computation on a chip and applied it to an AI neural network. We can have multiple wavelengths, or colors, of light passing through the lens at the same time. That's a key advantage of photonics."

The research, published in Advanced Photonics, was conducted in collaboration with the Florida Semiconductor Institute, UCLA, and George Washington University. Study leader Volker J. Sorger, from the University of Florida, noted that chip manufacturers such as NVIDIA already use optical elements in some parts of their AI systems, which could make it easier to integrate this new technology.

"In the near future, chip-based optics will become a key part of every AI chip we use daily," Sorger suggested. "And optical AI computing is next."

By using multiple wavelengths of light at once, this photonic architecture can crunch data with incredible efficiency, hitting performance levels that leave traditional chips in the dust: up to 305 trillion operations per second per watt, and 40.2 trillion per square millimeter.

What does that mean in the real world?

It means this tiny, energy-smart chip could supercharge AI across the board, from nimble edge devices and high-performance computing rigs to sprawling cloud services. Its ability to perform convolutions with far less computational drag opens the door to faster, smarter AI in everything from self-driving cars to medical scans.

The research was published in the journal Advanced Photonics.

Source: SPIE--International Society for Optics and Photonics