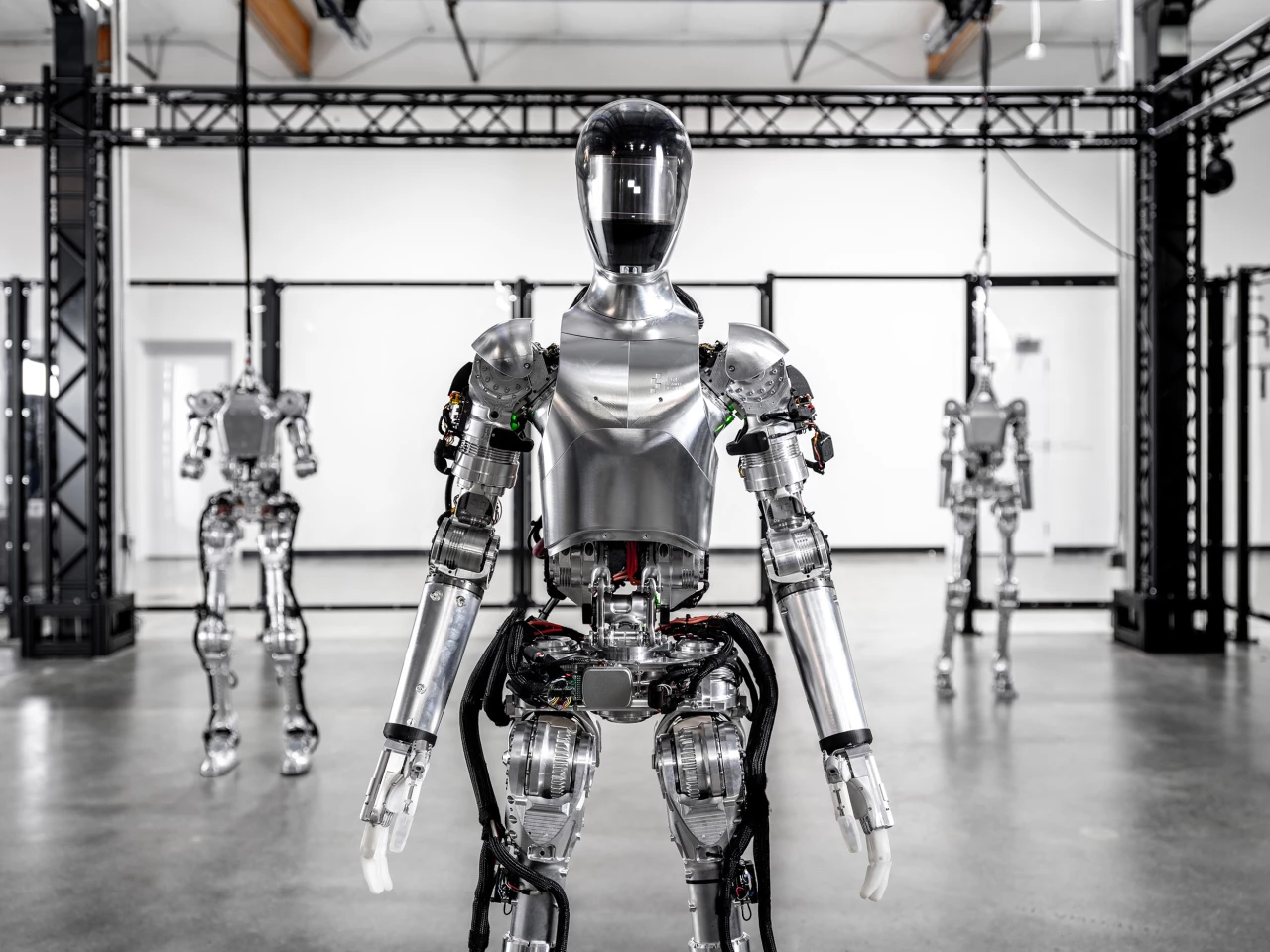

After just 12 months of development, Figure has released video footage of its humanoid robot walking – and it's looking pretty sprightly compared to its commercial competition. It's our first look at a prototype that should be doing useful work within months.

Figure is taking a bluntly pragmatic approach to humanoid robotics. It doesn't care about running, jumping, or doing backflips; its robot is designed to get to work and make itself useful as quickly as possible, starting with easy jobs involving moving things around in a warehouse-type environment, and then expanding its abilities to take over more and more tasks.

Staffed by a group of some 60-odd humanoid and AI industry veterans that founder Brett Adcock lured away from leading companies like Boston Dynamics, Google Deepmind, Tesla and Apple, Figure is hitting the general-purpose robot worker space with the same breakneck speed that Adcock's former company Archer did when it arrived late to the eVTOL party.

Check out the video below, showing "dynamic bipedal walking," which the team achieved in less than 12 months. Adcock believes that's a record for a brand new humanoid initiative.

It's a short video, but the Figure prototype moves relatively quickly and smoothly, compared to the somewhat unsteadier-looking gait demonstrated by Tesla's Optimus prototype back in May.

And while Figure's not yet ready to release video, Adcock tells us it's doing plenty of other things too; picking things up, moving them around and navigating the world, all autonomously, but not all at the same time as yet.

The team's goal is to show this prototype doing useful work by the end of the year, and Adcock is confident that they'll get there. We caught up with him for a video chat, and what follows below is an edited transcript.

Loz: Can you explain a little more about torque-controlled walking as opposed to position and velocity based walking?

Brett Adcock: There's two different styles that folks have used throughout the years. Position and velocity control is basically just dictating the angles of all the joints, it seems to be pretty prescriptive about how you walk, tracking the center of mass and center of pressure. So you kind of get a very Honda ASIMO style of walking. They call it ZMP, zero moment point.

It's kind of slow, they're always centering the weight over one of the feet. It's not very dynamic, in the sense that you can put pressure on the world and try to really understand and react to what's happening. The real world's not perfect, it's always a little bit messy. So basically, torque control allows us to measure torque, or moments in the joints itself. Every joint has a little torque sensor in it. And that allows us to be more dynamic in the environment. So we can understand what forces we're putting on the world and react to those instantaneously. It's very much more modern and we think it's the path toward human-level performance of a very complex world.

Is it analogous to the way that humans perceive and balance?

We kind of sense torque and pressure on objects, like we can like touch the ground and we understand that we're touching the ground, things like that. So when you work with positions and velocities, you don't really know if you're making an impact with the world.

We're not the only ones; torque controlled walking's what probably all the newest groups have done. Boston Dynamics does that with Atlas. It's at the bleeding edge of what the best groups in the world have been demonstrating. So this isn't the first time it's been demonstrated, but doing it is really difficult for both the software and hardware side. I think what's interesting for us here is that there's very few groups commercially that are trying to go after the commercial market that have hands, and that are dynamically walking through the world.

This is the first big check for us – to be able to technically show that we're able to do it, and do it well. And the next big push for us is to integrate all the autonomous systems into the robot, which we're doing actively right now, so that the robot can do end to end applications and useful work autonomously.

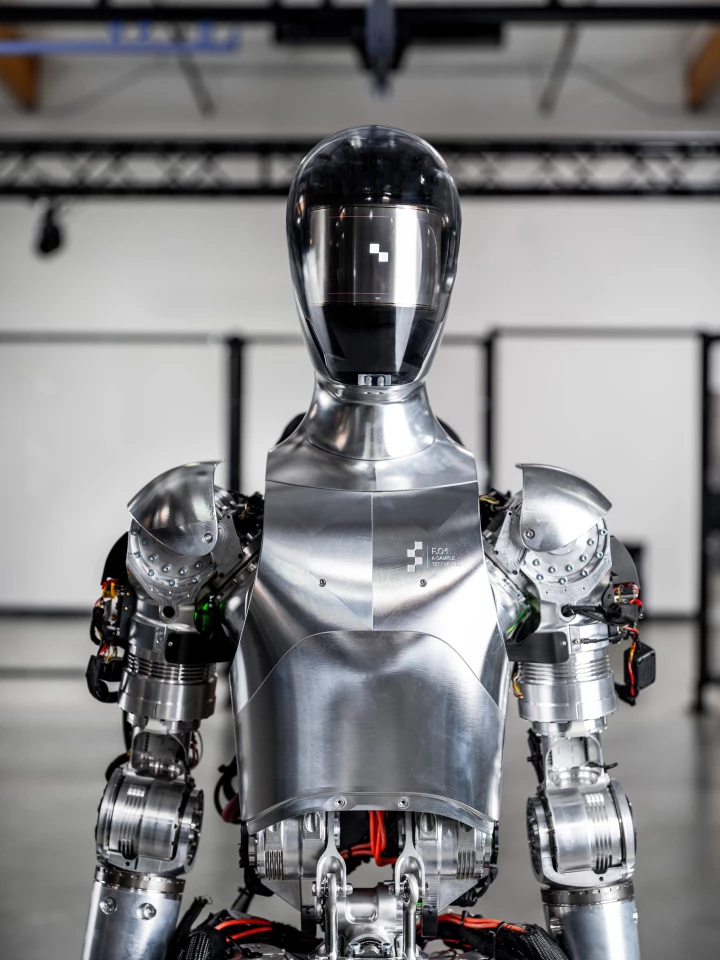

Alright, so I've got some photos here of the robot prototype. Are these hands close to the ones that are already doing dynamic gripping and manipulation?

Yep. Those are our in-house designed hands, with silicone soft-grip fingertips. They're the hands that we'll use as we go into a production environment too.

Okay. And what's it able to do so far?

We've been able to do both single-handed and bimanual manipulation, grabbing objects with two hands and moving them around. We've been able to do manual manipulation of boxes and bins and other assets that we see in warehouse and manufacturing environments. We've also done single-handed grips of different consumer applications, so like, bags and chips and other types of things. And we've done those pretty successfully in our lab at this point.

Right. And this is via teleoperation, or have you got some autonomous actions running?

Both. Yeah, we've done teleoperation work, mostly from an AR training perspective, and then most of the work we've done is with fully end-to-end systems that are not teleoperated.

Okay, so it's picking things up by itself, and moving them around, and putting them down. And we're talking at the box scale here, or the smaller, more complex item level?

We've done some complex items and single-handed grabs, but we've done a bunch of work on being able to grab tote bins and other types of bins. So yeah, I would say bins, boxes, carts, individual consumer items, we've been able to grab those successfully and move them around.

And the the facial screen is integrated and up and running?

Yeah, based on what the robot's actually doing, we display a different type of application and design language on the screen. So we've done some early work on human-machine interaction, around how we're going to show the humans in the world what the robot's actively doing and what we'll be doing next. You want to know the robot's powered on, you wanna know, when it's actively in a task, what it plans to do after that task. We're looking at communicating intent via video and potentially audio.

Right, you might have it speaking?

Yep. I might want to know what to do next and might want commands from you. You might want to ask it like, why are you doing this right now? And you might want a response back.

Right, so you're building that stuff in already?

We're trying to! We're doing early stuff there.

So what are the most powerful joints, and what kind of torque are those motors putting out?

The knee and hip have over 200 Newton meters.

Okay. And apart from walking, can it squat down at this point?

We're not going to show it yet, but it can squat down, we've picked up bins and other things now, and moved them around. We have a whole shakeout routine with a lot of different movements, to test range of motion. Reaching up high and down low...

Morning yoga!

Tesla's doing yoga, so maybe we'll be a yoga instructor.

Maybe the Pilates room is free! Very cool. So this is the fastest you're aware that any company has managed to get a get a robot up and walking by itself?

Yeah, I mean if you look at the time we spent towards engineering this robot, it's been like 12 months. I don't really know anybody that's gotten here better or faster. I don't really know. I happen to think it's probably one of the fastest in history to do it.

Okay. So what are you guys hoping to demonstrate next?

Next is for us to be able to do end-to-end commercial applications; real work. And to have all our autonomy and AI systems running on board. And then be able to move the kinds of items around that are central to what our customers need. Building more robots, and getting the autonomy working really well.

Okay. And how many robots have you got fully assembled at this point?

We have five units of that version in our facility, but there are different maturity levels. Some are just the torso, some are fully built, some are walking, some are close to walking, things like that.

Gotcha. And have you started pushing them around with brooms yet?

Yeah, we've done some decent amount of push recovery testing... It always feels weird pushing the robot, but yeah, we've done a decent amount of that work.

We better push them while we can, right? They'll be pushing us soon enough.

Yeah, for sure!

Okay, so it's able to recover from a push while it's walking?

We haven't done that exact thing, but you can push it when it's standing. The focus really hasn't been making it robust to large disturbances while walking. It's mostly been to get the locomotion control right, then get this whole system doing end-to-end application work, and then we'll probably spend more time doing more robust push recovery and other things like that into early next year. We'll get the end-to-end applications running, and then we'll mature that, make it higher performance, make it faster. And then more robust, basically. We want you to see the robot doing real work this year, that's our goal.

Gotcha. Can it pick itself up from a fall at this stage?

We have designed it to do that, but we haven't demonstrated that.

It sounds like you've got most of the major building blocks in place, to get those early "pick things up and put them down" kind of applications happening. Is it currently capable of walking while carrying a load?

Yeah, we've actually walked while carrying a load already.

Okay. So what are the key things you've still got to knock over before you can demonstrate that useful work?

It's really stitching everything together really well and making sure that perception systems can see the world, that we don't collide with the world, like, the knees don't hit when we're going to grab things. Make sure the arms aren't colliding with the objects in the world. We have manipulation and perception policies in the AI side that we want to integrate into system to do it fully end to end.

There's a lot of little things to look at. We want to do better motion planning, or doing other types of control work to make it far more robust so it can do things over and over again and recover from failures. So there's a whole host of smaller things that we're all trying to do well to stitch together. We've demonstrated the first basic end to end application in our lab, and we just need to make it far more robust.

What was that first application?

It's a warehouse and manufacturing-related objective... Basically, moving objects around our facility.

In terms of SLAM and navigation, perceiving the world, where's that stuff at?

We're localizing now in a map that we're building in real time. We have perception policies that are running on real time, including occupancy and object detection. Building a little 3D simulation of the world, and labeling objects to know what they are. This is what your Tesla does when it's driving down the road.

And then we have manipulation policies for grabbing objects we're going through, and then behaviors that are kind of helping to stitch that together. We have a big board about how to integrate all these streams and make them work reliably on the robot that we've developed in the last year.

Right. So it's totally camera-based is it? No time of flight kind of stuff?

As of right now, we're using seven RGB cameras. It's got 360-degree vision, it can see behind it, to the sides, it can look down.

So in terms of the walking video, is that one-to-one speed?

It's one to one, yeah.

It moves a bit!

It's awesome, right?

What sort of speed is it at this point?

Maybe a meter a second, maybe a little less.

Looks a bit quicker than a lot of the competition.

Yep. Looks pretty smooth too. So yeah, it's pretty good, right? It's probably some of the best walking I've seen out of some of the humanoids. Obviously Boston Dynamics has done a great job on the non-commercial research side – like PETMAN, that's probably the best humanoid walking gait of all time.

That was the military looking thing, right?

Yeah, someone dressed him up in a gas mask.

He had some swagger, that guy! Are your leg motors and whatnot sufficient to start getting it running at some point, jumping, that sort of stuff? I know that's not really in your wheelhouse.

We don't want to do that stuff. We don't want to jump and do parkour and backflips and box jumps. Like, normal humans don't do that. We just want to do human work.

Thanks to Figure's Brett Adcock and Lee Randaccio for their assistance with this story.

Source: Figure