Two new technologies allow a single pair of glasses to track eye movements and read the wearer's facial expressions, respectively. The systems use sonar instead of cameras, for better battery life and increased user privacy.

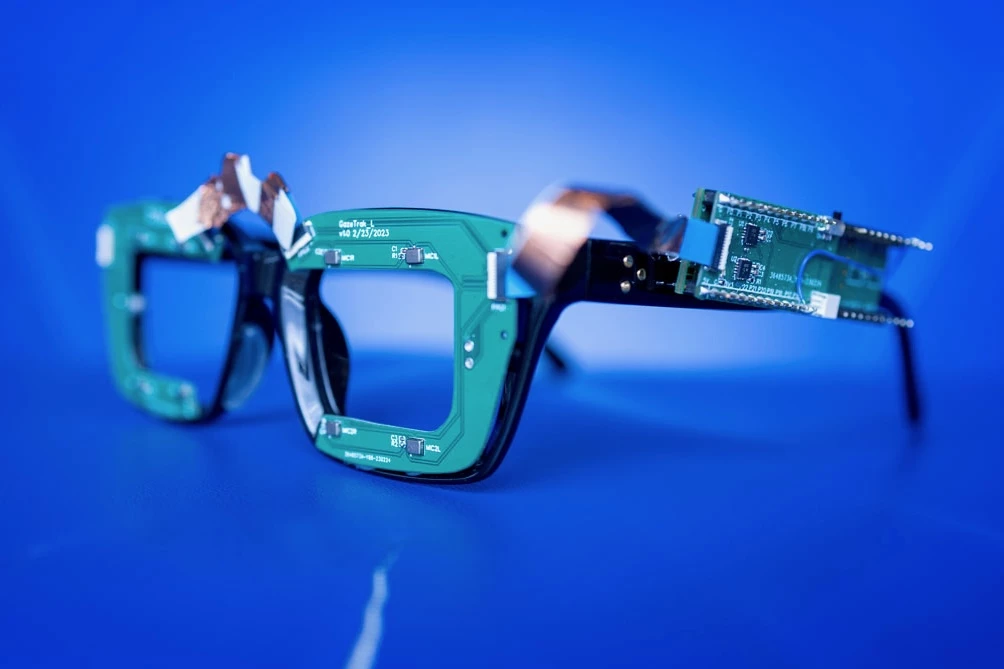

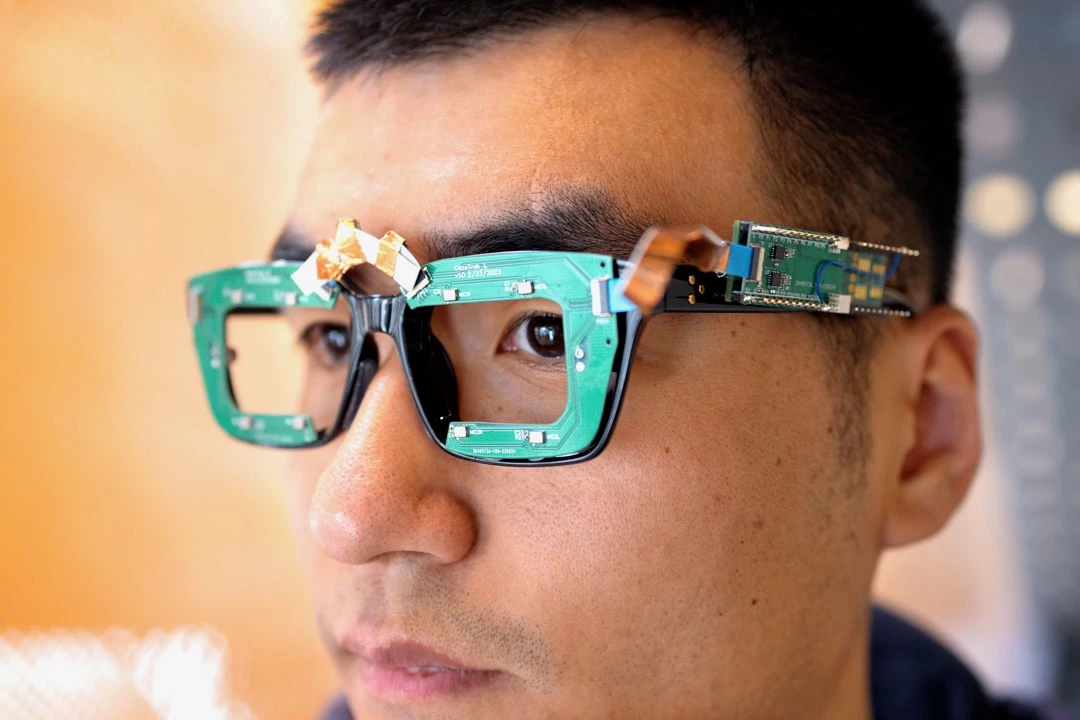

Known as GazeTrak and EyeEcho, the technologies are being developed at Cornell University by a team led by Information Science doctoral student Ke Li.

Both setups could be incorporated into third-party smartglasses or VR headsets, where they would use far less power than camera-based systems. They also wouldn't capture any images of the user's face.

GazeTrak presently utilizes one speaker and four microphones arranged around the inside of each lens frame on a pair of glasses (for a total of two speakers and eight mics). The speakers emit pulsed inaudible sound waves which echo off the eyeball and get picked up by the microphones.

Because human eyeballs aren't perfectly round spheres, each echo takes a different amount of time to reach each of the mics, depending on which way the eyeball is facing.

Therefore, by utilizing AI-based software on a wirelessly linked smartphone or laptop (which continuously analyzes those millisecond differences) it's possible to track the direction of the user's gaze. And importantly, the technology isn't adversely affected by loud background noises.

It should be noted that in its current proof-of-concept form, GazeTrak isn't as accurate as conventional camera-based eye-tracking wearables. That said, it consumes only 5% as much power as such devices. The scientists state that if a GazeTrak system were to use a battery of the same capacity as that of the existing Tobii Pro Glasses 3, it could run for 38.5 hours as opposed to the Tobiis' 1.75 hours.

Additionally, the researchers state the system's accuracy should improve dramatically as the technology is developed further.

EyeEcho also sends out sound waves and receives their echoes, although it does so using one speaker and one microphone located next to each of the glasses' two arm hinges (for a total of two speakers and two mics).

In this case, subtle movements of the facial skin are what affect the amount of time that elapses between each pulse being emitted and its echo being detected. The AI software matches these time differences to specific skin movements, which are in turn matched to specific facial expressions.

After just four minutes of training on each of 12 test subjects' faces, the system proved to be highly accurate at reading their expressions, even when they were performing a variety of everyday activities in different environments.

Ke Li and colleagues previously developed a similar expression-reading system called EarIO, in which the speakers and mics are integrated into earphones. As compared to that setup, EyeEcho is said to offer better performance using less training data, plus its accuracy remains stable over a longer period of time.

"There are many camera-based systems in this area of research or even on commercial products to track facial expressions or gaze movements, like Vision Pro or Oculus," said Li. "But not everyone wants cameras on wearables to capture you and your surroundings all the time."

Papers on GazeTrak and EyeEcho will be presented later this year, and can currently be accessed via Arxiv.

And as if these systems weren't enough, Cornell scientists previously created yet another face-reading sonar technology that could be built into smartglasses. Named EchoSpeech, it monitors the wearer's lips to read words that the person silently speaks.

Source: Cornell University