Figure's Brett Adcock claimed a "ChatGPT moment" for humanoid robotics on the weekend. Now, we know what he means: the robot can now watch humans doing tasks, build its own understanding of how to do them, and start doing them entirely autonomously.

General-purpose humanoid robots will need to handle all sorts of jobs. They'll need to understand all the tools and devices, objects, techniques and objectives we humans use to get things done, and they'll need to be as flexible and adaptable as we are in an enormous range of dynamic working environments.

They're not going to be useful if they need a team of programmers telling them how to do every new job; they need to be able to watch and learn – and multimodal AIs capable of watching and interpreting video, then driving robotics to replicate what they see, have been taking revolutionary strides in recent months, as evidenced by Toyota's incredible "large behavior model" demonstration in September.

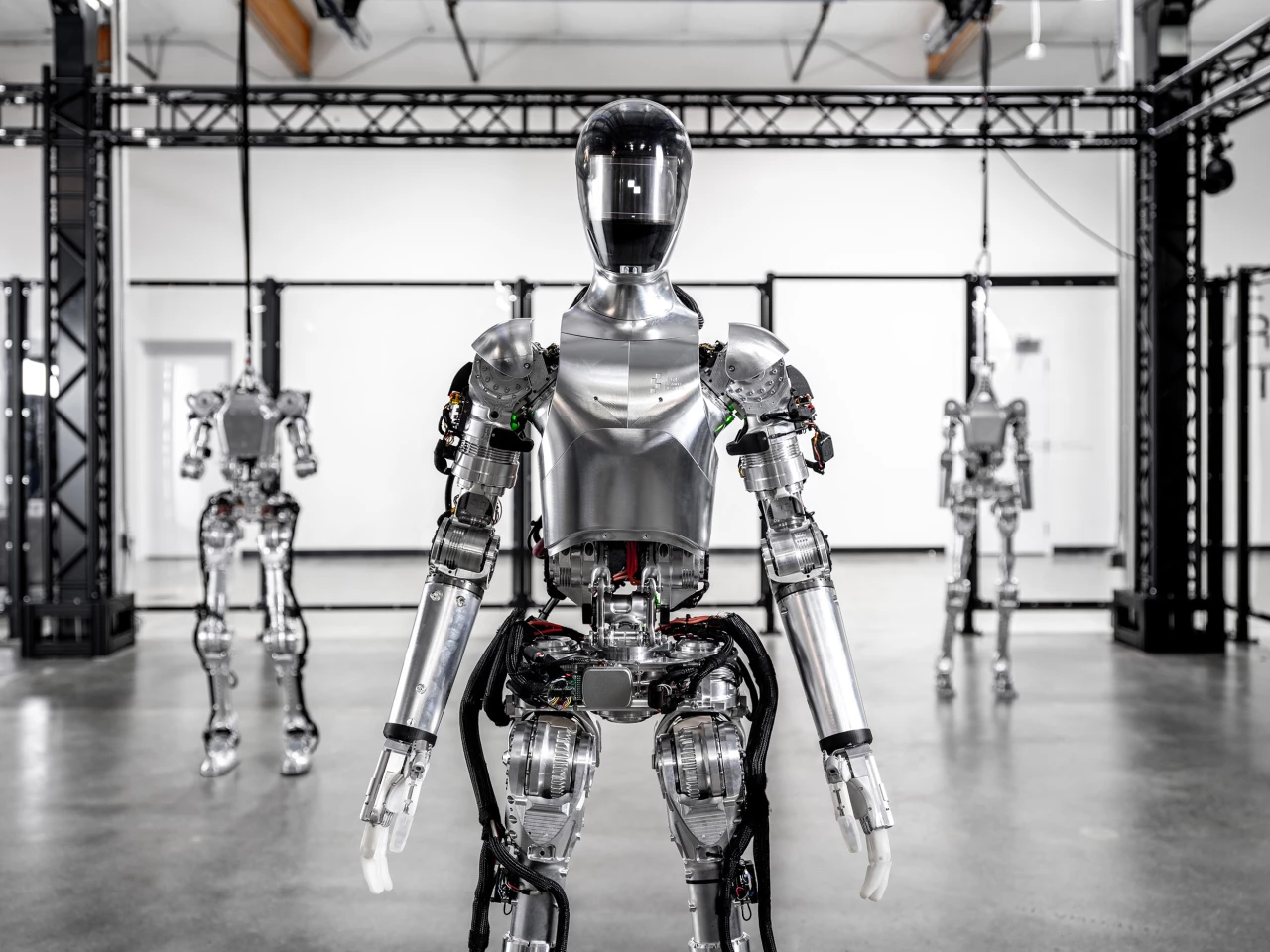

But Toyota is using bench-based robot arms, in a research center. Figure, like Tesla, Agility, and a growing number of other companies, is laser-focused on self-sufficient full-body humanoids that can theoretically go into any workplace and eventually learn to take over any human task. And these are not research programs, these companies want products out there in the market yesterday, starting to pay their way and get useful work done.

Adcock told us he hoped to have the 01 robot deployed and demonstrating useful work around Figure's own premises by the end of 2023 – and while that doesn't seem to have transpired at this point, a watch-and-learn capability in a humanoid is indeed big news.

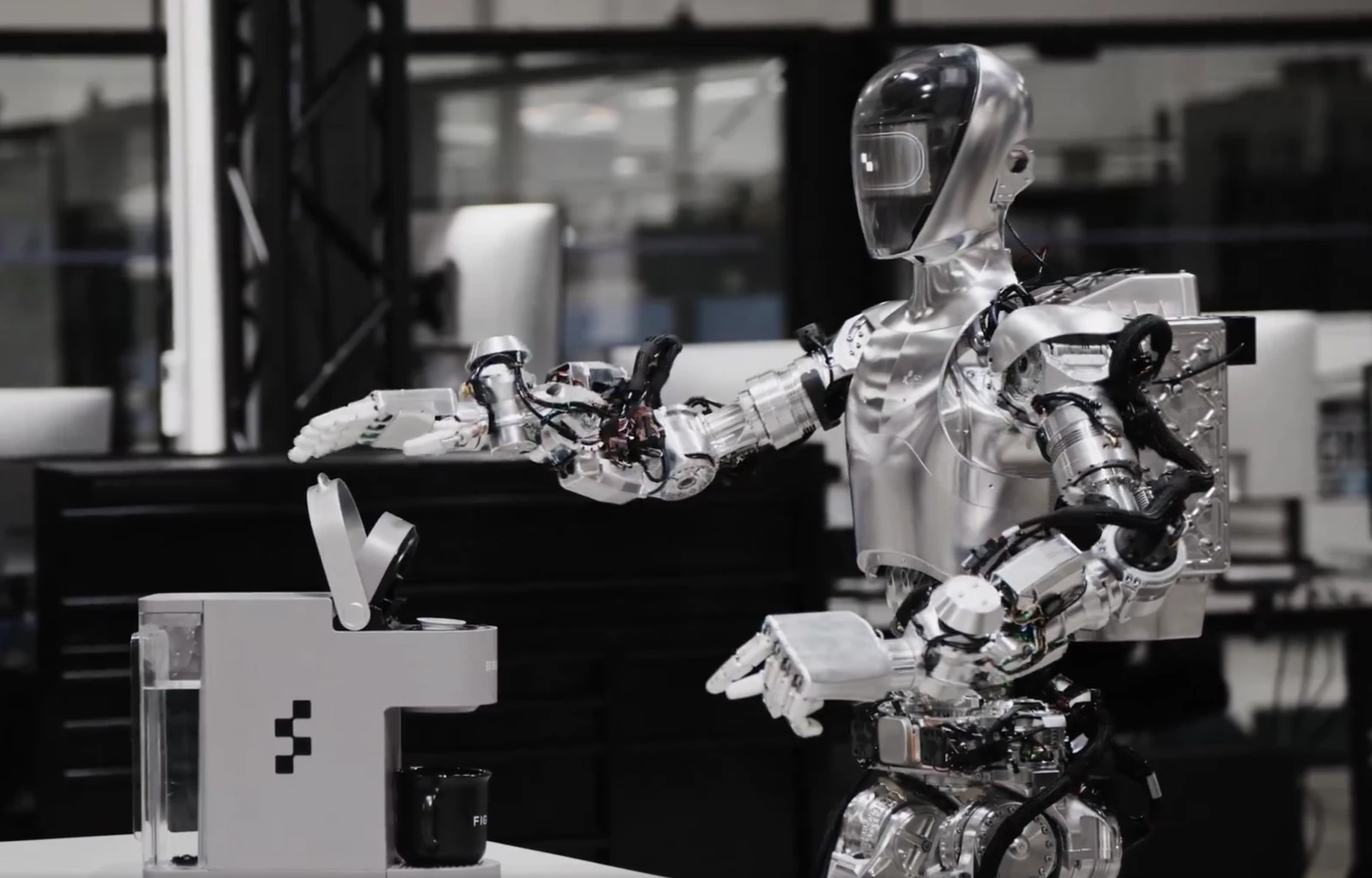

The demonstration in question, mind you, is not the most Earth-shatteringly impressive task; the Figure robot is shown operating a Keurig coffee machine, with a cup already in it. It responds to a verbal command, opens the top hatch, pops a coffee pod in, closes the hatch and presses the button, and lets the guy who asked for the coffee grab the full cup out of the machine himself. Check it out:

Figure-01 has learned to make coffee ☕️

— Brett Adcock (@adcock_brett) January 7, 2024

Our AI learned this after watching humans make coffee

This is end-to-end AI: our neural networks are taking video in, trajectories out

Join us to train our robot fleet: https://t.co/egQy3iz3Kypic.twitter.com/Y0ksEoHZsW

So yes, it's fair to say the human and the Keurig machine are still doing some heavy lifting here – but that's not the point. The point is, the Figure robot took 10 hours to study video, and can now do a thing by itself. It's added a new autonomous action to its library, transferrable to any other Figure robot running on the same system via swarm learning.

If that learning process is robust across a broad range of different tasks, then there's no reason why we shouldn't start seeing a new video like this every other day, as the 01 learns to do everything from peeling bananas, to putting pages in a ring binder, to screwing jar lids on and off, to using spanners, drills, angle grinders and screwdrivers.

It shouldn't be long before it can go find a cup in the kitchen, check that the Keurig's plugged in and has plenty of water in it, make the damn press-button coffee, and bring it to your desk without spilling it – a complex task making use of its walking capabilities and Large Language Model AI's ability to break things down into actionable steps.

So don't get hung up on the coffee; watch this space. If Figure's robot really knows how to watch and learn now, we're going to feel a serious jolt of acceleration in the wild frontier of commercial humanoid robotics as 2024 starts to get underway. And even if Figure is overselling its capabilities – not that any tech startup would dream of doing such a thing – it ain't gonna be long, and there's a couple dozen other teams manically racing to ship robots with these capabilities. This is happening.

Today we're unveiling our Figure 01 robot.

— Figure (@Figure_robot) October 17, 2023

Watch as we demonstrate dynamic bipedal walking - a milestone the team was able to hit within 12 months of company inception.

Here are the details: pic.twitter.com/tSNVLioXpC

Make no mistake: humanoid robots stand to be an absolutely revolutionary technology once they're deployed at scale, capable of fundamentally changing the world in ways not even Adcock and the other leaders in this field can predict. The meteoric rise of GPT and other language model AIs has made it clear that human intelligence won't be all that special for very long, and the parallel rise of the humanoids is absolutely designed to put an end to human labor.

Things are happening right now that would've been absolutely unthinkable even five years ago. We appear to be right at the tipping point of a technological and societal upheaval bigger than the agricultural or industrial revolutions, that could unlock a world of unimaginable ease and plenty, and/or possibly relegate 95% of humans to the status of zoo animals or house plants.

Without internationally enforced speed limits on AI, humanity is very unlikely to survive. From AI's perspective in 2-3 years from now, we look more like plants than animals: big slow chunks of biofuel showing weak signs of intelligence when undisturbed for ages (seconds) on end.…

— Andrew Critch (h/acc) (@AndrewCritchPhD) July 16, 2023

How are you feeling about all this, folks? Personally, I'm a little wigged out. My eyebrows can only go so high, and they've been there for a good while now. I'm getting new forehead wrinkles.

Source: Figure